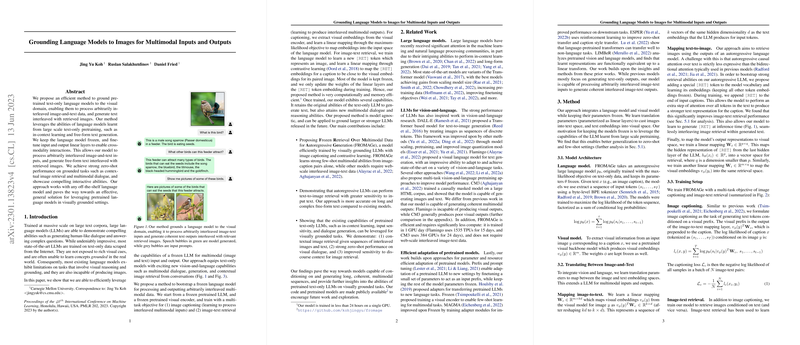

The paper "Grounding LLMs to Images for Multimodal Inputs and Outputs" (Koh et al., 2023 ) introduces Frozen Retrieval Over Multimodal Data for Autoregressive Generation (FROMAGe), a method for grounding pre-trained text-only LLMs to the visual domain. This enables the model to process arbitrarily interleaved image-and-text data and generate text interleaved with retrieved images. The key idea is to leverage the existing capabilities of LLMs, such as in-context learning and free-form text generation, while adapting them to handle visual information.

The approach involves keeping the LLM frozen and fine-tuning input and output linear layers to facilitate cross-modality interactions. The model is trained with a multi-task objective:

- Image captioning: learning to process interleaved multimodal inputs.

- Image-text retrieval: learning to produce interleaved multimodal outputs.

For image captioning, visual embeddings are extracted using a pre-trained visual encoder. A linear mapping, , is learned to map these embeddings into the input space of the LLM via a maximum-likelihood objective. : dimension of visual embeddings : number of vectors : hidden dimensionality

For image-text retrieval, the LLM learns a new [RET] token representing an image. Another linear mapping, , is trained using contrastive learning to map the [RET] embeddings for a caption to be close to the visual embeddings of its paired image. The visual embeddings are mapped into the same retrieval space using the linear mapping . : hidden representation of the [RET] token from the last hidden layer of the LLM

: retrieval dimension, where

The normalized cosine similarity for the image and text embeddings is computed as:

Where: : caption : paired image : output of the last hidden layer of the LLM (LLM) for the [RET] token : output of the visual encoder for the image : linear mapping to map the hidden representation of [RET] from the last hidden layer of the LLM (LLM) : linear mapping to map the visual embeddings

The InfoNCE loss is minimized for text-to-image (t2i) and image-to-text (i2t) retrieval over a batch of text-image pairs . The loss functions are:

$\mathcal{L}_{\text{t2i} = -\frac{1}{N} \sum_{i=1}^N \left( \log \frac{\exp(\text{sim}(x_i, y_i) / \tau)}{ \sum_{j=1}^N \exp(\text{sim}(x_i, y_j) / \tau )} \right)$

$\mathcal{L}_{\text{i2t} = -\frac{1}{N} \sum_{i=1}^N \left( \log \frac{\exp(\text{sim}(y_i, x_i) / \tau)}{ \sum_{j=1}^N \exp(\text{sim}(y_i, x_j) / \tau )} \right)$

Where: : learnable temperature parameter.

The final training loss is a weighted sum of the captioning loss and the retrieval losses:

$\mathcal{L} = \lambda_c \mathcal{L}_{\text{c} + \lambda_r (\mathcal{L}_{\text{t2i} + \mathcal{L}_{\text{i2t})$

Where: : captioning loss weight : retrieval loss weight

During training, only the linear mappings (, , and ) and the [RET] embedding vector are updated.

The paper evaluates FROMAGe on tasks such as contextual image retrieval and visual dialogue, demonstrating strong zero-shot performance. Key findings include:

- Autoregressive LLMs can perform text-to-image retrieval with greater sensitivity to input text compared to existing models.

- The existing capabilities of pre-trained text-only LLMs can be leveraged for visually grounded tasks.

Experiments on the Visual Storytelling (VIST) dataset [huang2016visual] show that FROMAGe outperforms CLIP [radford2021learning] in contextual image retrieval, especially when provided with longer, temporally dependent sentences and interleaved image-and-text context. On Visual Dialog (VisDial) [das2017visual], FROMAGe achieves competitive results in zero-shot text answer selection and significantly outperforms prior work in text-to-image retrieval. Ablation studies validate the importance of freezing the LLM and using a dedicated retrieval token. The paper also presents results showing a positive correlation between model size and performance.