Overview of LLMs Encode Clinical Knowledge

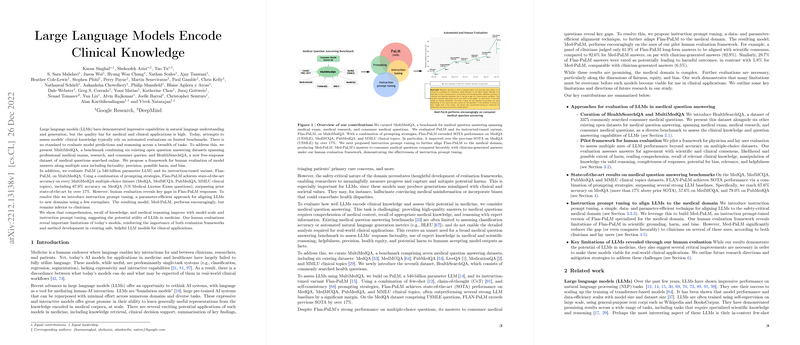

The paper "LLMs Encode Clinical Knowledge" introduces groundbreaking advancements in assessing and utilizing LLMs for clinical applications. The paper presents the MultiMedQA benchmark, an extensive collection of seven diverse datasets, designed to evaluate the clinical knowledge of LLMs. These datasets include MedQA (USMLE), MedMCQA (AIIMS/NEET), PubMedQA, MMLU clinical topics, LiveQA, MedicationQA, and the newly introduced HealthSearchQA.

MultiMedQA Benchmark

MultiMedQA is a benchmark that combines six existing medical question-answering datasets and one new dataset, HealthSearchQA. The paper emphasizes the benchmark's utility in evaluating model predictions across a range of medical tasks, including professional medical exams, medical research queries, and consumer health questions. HealthSearchQA, curated from commonly searched health queries, adds a consumer-focused dimension to this comprehensive benchmark.

Evaluation of PaLM and Flan-PaLM

The authors evaluated PaLM, a 540-billion parameter LLM, and its instruction-tuned variant, Flan-PaLM, using MultiMedQA. The results showed that Flan-PaLM achieved state-of-the-art (SOTA) accuracy across multiple-choice datasets within the benchmark, significantly surpassing previous models. For instance, Flan-PaLM attained a 67.6% accuracy on the MedQA dataset, outperforming the prior SOTA by over 17%.

Human Evaluation Framework

To complement automated evaluations, the authors proposed a human evaluation framework assessing multiple axes such as factuality, precision, potential harm, and bias. This framework was crucial in uncovering key gaps in the LLM's responses, particularly in Flan-PaLM's long-form answers to consumer medical questions.

Instruction Prompt Tuning

To address these gaps, the paper introduces instruction prompt tuning, a data-efficient alignment method to optimize LLM performance in specific domains. The resulting model, Med-PaLM, underwent extensive human evaluation and demonstrated alignment improvements, with answers closely matching clinician-generated responses. Notably, Med-PaLM's responses aligned with scientific consensus 92.6% of the time and significantly reduced potentially harmful outcomes compared to Flan-PaLM.

Key Findings

- State-of-the-art Performance: Flan-PaLM achieved SOTA results across several datasets, including an impressive 67.6% accuracy in MedQA.

- Instruction Prompt Tuning Effectiveness: This method improved model alignment with the medical domain, as evidenced by Med-PaLM's performance.

- Human Evaluation Insights: The evaluation framework highlighted critical areas for improvement, emphasizing the need for both robust evaluation tools and cautious application in clinical contexts.

Implications and Future Developments

The findings suggest a promising trajectory for LLMs in clinical applications, indicating potential utility in tasks ranging from clinical decision support to patient education. However, the paper also underscores the necessity for ongoing evaluation frameworks and alignment techniques to ensure the safety and reliability of these models in practice. Future research could explore enhancements in fairness and bias mitigation, as well as multilingual support to expand applicability across diverse populations.

Conclusion

The paper represents a significant step forward in understanding and improving LLMs for clinical knowledge applications. While the current models like Med-PaLM show remarkable potential, the path to real-world clinical implementation will require continuous advancements in accuracy, safety, and ethical considerations. This paper lays a strong foundation for such future explorations, highlighting both the opportunities and the challenges that lie ahead.