Exploring AltCLIP: Enhancing CLIP's Language Encoder for Multilingual Multimodal Applications

This paper investigates the adaptation of CLIP's language encoding capabilities to support extended multilingual functionalities. The authors, Chen et al., propose AltCLIP, an innovative approach that retains the strengths of the original OpenAI's CLIP model while expanding its scope to handle multiple languages effectively.

Methodology

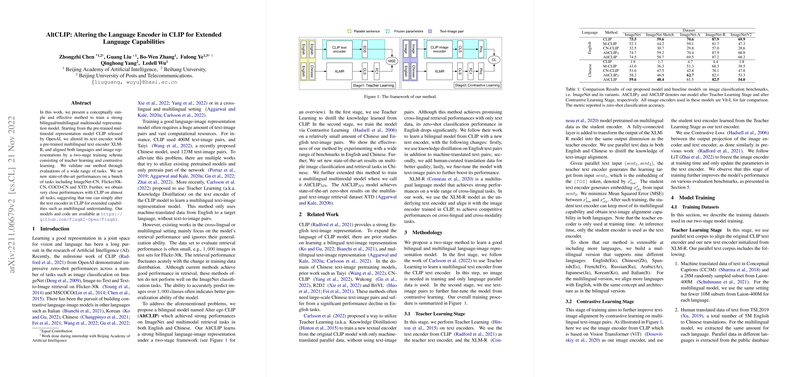

The methodology employed by the authors involves replacing the text encoder in CLIP with XLM-R, a robust multilingual text encoder. This substitution aims to advance the model's bilingual and multilingual capabilities while maintaining high-quality text-image alignment. To achieve this, the authors deploy a two-stage training methodology consisting of Teacher Learning and Contrastive Learning strategies.

- Teacher Learning Stage: This stage employs knowledge distillation, aligning the multilingual XLM-R text encoder with the CLIP's pre-existing English text encoder. The primary advantage here is the model's ability to capture text-image alignment without relying on extensive text-image datasets. This stage utilizes both machine-translated and human-curated parallel text data, ensuring robust bilingual alignment.

- Contrastive Learning Stage: To strengthen the text-image alignment, the authors utilize a dataset comprising text-image pairs to refine the model through contrastive learning. This stage allows AltCLIP to exhibit enhanced performance in vision-language tasks across multiple languages.

Experimental Evaluation

The authors performed a comprehensive evaluation on widely used datasets, including ImageNet and MSCOCO, both in English and Chinese, to determine the efficacy of AltCLIP. The results are noteworthy, with AltCLIP achieving close performance to the original CLIP model in English tasks and setting new benchmarks in Chinese zero-shot image classification and retrieval.

- In comparative analysis with models like M-CLIP and CN-CLIP, AltCLIP excelled, showcasing superior multilingual representation.

- The paper highlights that only using a modest dataset of 36 million text data and 2 million text-image pairs, AltCLIP outperformed models relying on much larger datasets.

Implications and Future Directions

The implications of AltCLIP are significant, expanding CLIP's utility into multilingual domains efficiently. The proposed method presents a scalable way to integrate multiple languages into text-to-image models, offering an economical alternative to traditional training that often demands extensive data and computational resources.

Theoretical implications suggest advancements in zero-shot learning by extending language support while maintaining visual understanding. Practically, AltCLIP can be further developed to improve machine translation models, language understanding systems, and image-based search engines in various languages.

Future avenues include applying similar methodologies to adapt other components of CLIP, such as the image encoder, potentially enhancing the model's performance across diverse datasets and reducing dependency on machine-translated data. Investigating cultural biases introduced through multilingual encodings could also provide insights into optimizing model fairness and performance.

In conclusion, AltCLIP presents a promising step forward in the domain of multilingual multimodal models, revealing potential pathways for innovation in AI's understanding and processing of diverse linguistic inputs.