CLIPPO: Image-and-Language Understanding from Pixels Only

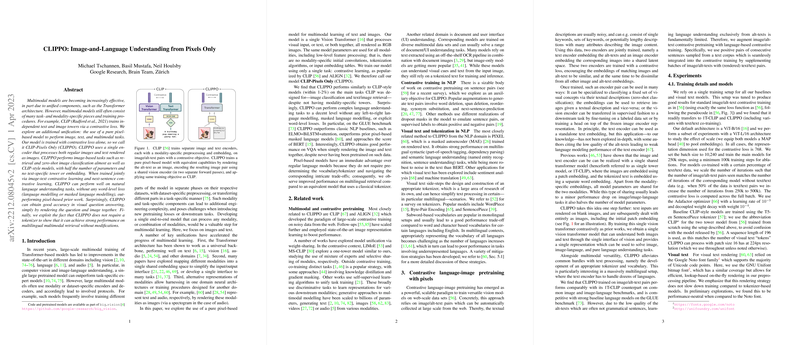

The paper "CLIPPO: Image-and-Language Understanding from Pixels Only" introduces a novel approach to multimodal learning by leveraging a pure pixel-based model, termed CLIPPO, to process images and text alike. This approach seeks to unify the treatment of different modalities within a single model architecture, diverging from traditional multimodal models that typically involve distinct text and image processing pathways.

Key Contributions

- Unified Encoder: Unlike previous multimodal models such as CLIP, which use modality-specific towers, CLIPPO employs a single Vision Transformer encoder for both images and rendered text. This unification reduces parameter count substantially while maintaining competitive performance.

- Contrastive Loss Paradigm: The training of CLIPPO relies exclusively on contrastive learning, drawing inspiration from the CLIP and ALIGN frameworks. The model processes text by rendering it as an image, thereby allowing the model to handle both images and text without requiring text-specific embeddings or tokenization.

- Performance Evaluation: CLIPPO realizes a comparable performance with traditional CLIP-style models on key tasks such as image classification and retrieval, with minimal parameter use. The simplicity of using a single encoder for both input types contributes to its efficiency and practical application.

- Language Understanding: By employing contrastive language-image pretraining only, CLIPPO achieves noteworthy performance on natural language understanding benchmarks like GLUE without specific word-level losses, surpassing several classical NLP baselines.

- Multilingual Capabilities: The absence of a tokenizer allows CLIPPO to excel in multilingual scenarios, exhibiting robust performance across various languages without any adaptation or prespecified vocabulary constraints.

Experimental Analysis

The paper provides a comprehensive set of experiments validating CLIPPO's approach. Key performance metrics include:

- ImageNet Classification: CLIPPO achieves nearly equivalent classification accuracy to models with separate text and image towers.

- VQA (Visual Question Answering): When integrated directly into the image, question rendering allows CLIPPO to achieve competitive scores.

- GLUE Benchmark: Particularly when co-trained with language-based contrastive tasks, CLIPPO approaches the performance levels of models that utilize extensive linguistic pretraining.

- Crossmodal Retrieval: The model demonstrates high retrieval accuracy across multiple languages, underscoring its capacity to operate without the constraints of traditional tokenization.

Implications and Future Directions

The findings from this research signify an important step towards simplifying multimodal model architectures by integrating textual inputs into the visual domain. This not only reduces complexity and parameter count but also simplifies preprocessing pipelines and expands multilingual handling capabilities. The indicated results inspire further exploration into purely pixel-based approaches for other modality domains such as audio and beyond.

Future developments could harness this framework's potential for language generation and expand its application to more complex multimodal tasks. Addressing the trade-offs between language-only and image-driven tasks through refined co-training strategies represents another promising future challenge. Moreover, extending CLIPPO for more diverse visual text scenarios, including noise robustness and document processing, could significantly broaden its application base.

In conclusion, CLIPPO presents a streamlined, efficient approach to multimodal learning, simplifying traditional architectures into a unified framework without sacrificing performance across both image and text tasks. This innovative step holds promise for broader applications in AI, encouraging ongoing discourse and development within the research community.