An Expert Overview of "Towards a Unified Multi-Dimensional Evaluator for Text Generation"

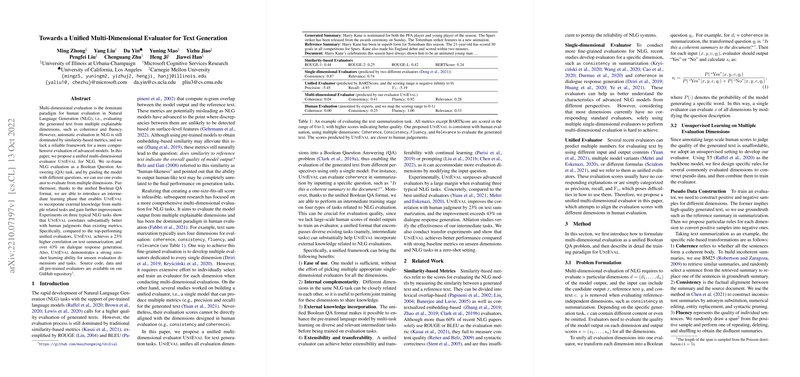

The paper "Towards a Unified Multi-Dimensional Evaluator for Text Generation" introduces a novel approach, termed UniEval, which is designed to improve the evaluation of Natural Language Generation (NLG) systems. Traditional evaluation metrics such as ROUGE and BLEU primarily focus on similarity with reference text, potentially overlooking other critical quality dimensions such as coherence, consistency, fluency, and relevance. Recognizing these limitations, the authors propose a unified multi-dimensional evaluation framework based on reframing the task as a Boolean Question Answering problem, thereby enabling evaluation across multiple dimensions with a single model.

Technical Approach

UniEval leverages pre-trained LLMs and transforms the evaluation process into a Boolean QA task. By framing each dimension as a specific question, the same model can evaluate different quality metrics. This unification is accomplished by posing questions like "Is this a coherent summary?" and using the model's response probabilities to derive scores. An additional layer of learning is introduced through intermediate tasks that align with NLG objectives, enhancing the model's robustness and allowing it to incorporate external knowledge.

The training methodology is notable for adopting an unsupervised strategy utilizing pseudo data generated through rule-based transformations. This approach creates positive samples from ground truth data and derives negative samples using dimension-specific transformations, such as sentence replacement to test coherence or antonym substitution for consistency testing.

Empirical Findings

Extensive experiments performed across summarization and dialogue response generation tasks establish UniEval's superiority over existing metrics. For instance, it excels with a significant 23% higher correlation with human judgments on text summarization compared to top existing evaluators, and over 43% improvement on dialogue response generation. Moreover, UniEval exhibits strong zero-shot learning capabilities, allowing it to extend its evaluation to unseen dimensions and tasks without additional training.

Theoretical and Practical Implications

The construction of UniEval reveals both a theoretical and practical advancement in the arena of NLG evaluation. Theoretically, it reinforces the importance of multi-dimensional evaluation in fully capturing the quality of textual outputs, suggesting that surface-level metrics like similarity do not suffice for evaluating sophisticated LLMs. Practically, it offers a unified and extensible framework capable of adapting to diverse and evolving NLG tasks and dimensions, potentially reducing the need for developing task-specific evaluators.

Future Prospects in AI Development

UniEval’s approach highlights a promising direction for AI research, emphasizing adaptability and multi-faceted evaluation in AI-generated content. Future developments could further refine the transformation rules and explore additional dimensions to incorporate into this unified evaluator. Additionally, extending this evaluation approach to other languages and more complex NLG tasks could further enhance its applicability and effectiveness.

This paper contributes a robust framework to the NLG evaluation landscape, advocating for comprehensive evaluation beyond traditional metrics, a direction that holds significant promise for advancing AI's capabilities and trustworthiness in language generation tasks.