kNN-Prompt: Nearest Neighbor Zero-Shot Inference

The paper "kNN-Prompt: Nearest Neighbor Zero-Shot Inference" explores the enhancement of zero-shot inference in LLMs (LMs) through retrieval-augmented techniques. Traditionally, retrieval-augmented LMs leverage non-parametric memory, which has shown significant improvements in perplexity-based evaluations. This paper extends the exploration to zero-shot and few-shot task evaluations, demonstrating the potential of enhanced retrieval using a -nearest neighbor LLM (NN-LM).

Key Contributions

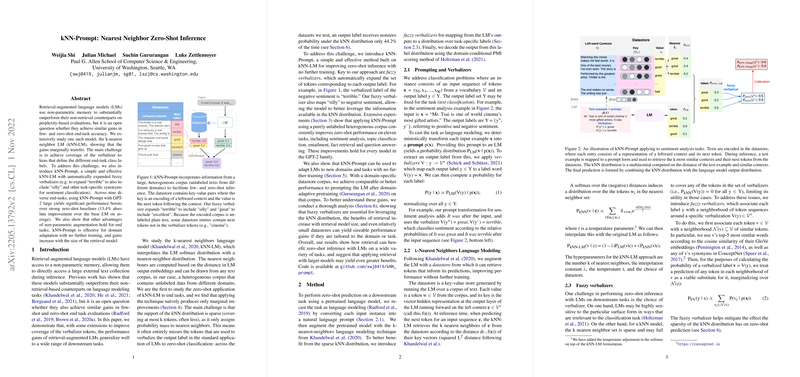

The primary challenge addressed in this work is achieving comprehensive coverage of verbalizer tokens that define end-task class labels. The paper introduces kNN-Prompt, an extension of the NN-LM model, integrating fuzzy verbalizers which automatically expand the set of tokens corresponding to each output label. This process involves associating task-specific synonyms such as expanding "terrible" to include "silly" for sentiment classification. The method shows a substantial average performance boost of 13.4% over strong zero-shot baselines across a diverse set of nine tasks when applied with GPT-2 large models.

Performance and Findings

- Improved Performance: kNN-Prompt was evaluated on a range of tasks including sentiment analysis, topic classification, and entailment. The methodology demonstrated significant improvements compared to both baseline LMs and standard NN-LMs, showcasing its robustness and versatility across domains.

- Fuzzy Verbalizers: The introduction of fuzzy verbalizers plays a crucial role in bridging the gap between sparse NN distributions and the dense representations needed for effective task completion. This not only increases the support for output labels within the NN retrieval but also mitigates issues related to verbalizer sensitivity.

- Zero-Shot and Few-Shot Adaptability: The kNN-Prompt model is adaptable for both zero-shot and few-shot scenarios, providing consistent improvements without additional training. The paper also demonstrates the model's proficiency in domain adaptation by effectively utilizing domain-specific datastores.

- Scalability: Performance gains were shown to scale with the size of the retrieval model. Larger retrieval models lead to better results, albeit with increased memory and computational demands.

Implications and Future Directions

The implications of this work lie in the potential of retrieval-augmented LMs to serve broader applications in AI by enhancing task-specific inference without extensive task-specific training data. The findings suggest that such models can be effectively adapted using domain-specific data, providing practical solutions for real-world applications where specific training data might be limited or costly to obtain.

Future research could investigate optimizing datastore sizes and efficiency in retrieval processes, perhaps through compression or more efficient indexing mechanisms. Additionally, the methodology could be extended to explore varying levels of context abstraction and retrieval at coarser granularities like sentences or paragraphs, which might enhance reasoning capabilities.

In conclusion, kNN-Prompt represents a significant stride in non-parametric LLM enhancement, providing a pathway for improved task versatility and zero-shot learning capabilities while maintaining computational feasibility.