Pretraining Autoregressive LLMs with Retrieval: A Comprehensive Study

The paper "Shall We Pretrain Autoregressive LLMs with Retrieval? A Comprehensive Study" explores the impact and implications of integrating retrieval mechanisms into the pretraining of large autoregressive LLMs. The paper is presented in the context of current advancements in LLMs such as GPT and Retro, with a focus on evaluating the benefits of combining retrieval capabilities at different stages of LLM development.

Key Contributions

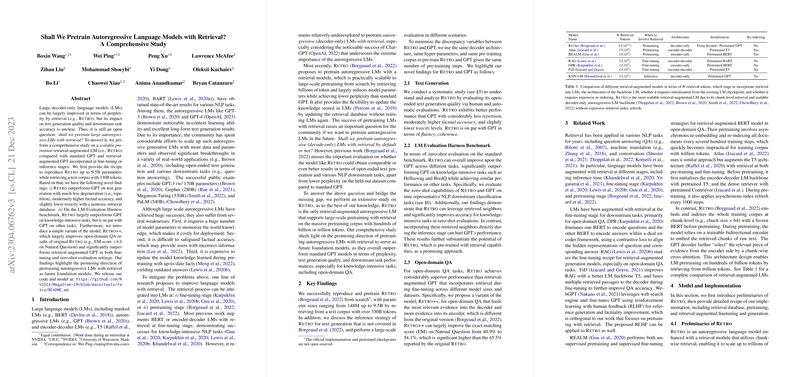

- Reproduction of Retro Models: The research successfully reproduces and scales the Retro model up to 9.5 billion parameters. This encompasses retrieving text from a vast corpus of 330 billion tokens, aligning with a comprehensive evaluation against traditional GPT models.

- Evaluation of Text Generation: Retro is shown to outperform GPT on open-ended text generation tasks, particularly by reducing text degeneration, enhancing factual accuracy, and slightly decreasing toxicity. This suggests that the retrieval component effectively supplements the model's knowledge base.

- Performance on Downstream Tasks: The paper conducts evaluations on various benchmarks, notably demonstrating that Retro excels in knowledge-intensive tasks. For example, significant improvements were observed in tasks like open-domain QA.

- Retro++ Innovation: A variant named Retro++ is introduced, which markedly improves performance on open-domain question answering benchmarks. This model modification exploits the most relevant retrieved evidence, thereby enhancing accuracy and generation quality.

Numerical Results and Implications

- Perplexity Reduction: Retro achieves lower perplexity compared to standard GPT across various model sizes, indicating improved LLM efficiency through retrieval augmentation.

- Downstream Task Performance: A marked improvement in accuracy on knowledge-intensive benchmarks was observed, highlighting the utility of retrieval-enhanced LMs in tasks that demand access to vast, explicit knowledge.

Theoretical and Practical Implications

The findings propose that pretraining autoregressive LLMs with retrieval capabilities could set a new standard for future foundational models. This approach not only reduces the need for larger parameter sizes by offloading some knowledge storage to an external database but also provides a method to update models with fresh information without extensive retraining.

- Theoretical Impact: The integration of retrieval mechanisms into LLMs suggests a shift in how knowledge is managed within LMs, balancing between internalized knowledge and external retrieval.

- Practical Impact: This method has potential applications in real-world scenarios where factual accuracy and information update frequency are crucial, such as in legal, medical, and educational domains.

Speculation on Future Developments

Looking forward, this research opens avenues to test even larger-scale retrieval-augmented models, exploring how dynamic retrieval updates during generation can further enhance model performance. It suggests the possibility of real-time retrieval augmentation, which could make LLMs more adaptive and contextually aware.

Overall, this paper provides a compelling case for the incorporation of retrieval mechanisms in the pretraining of autoregressive LLMs, illustrating both their current utility and potential for future advancements. The adaptability and resource efficiency offered by such models make them attractive candidates for a wide range of applications, signaling a promising direction for ongoing research in the field of AI and NLP.