- The paper introduces scene controllability by integrating segmentation maps via a modified VQ-VAE to achieve more coherent image generation.

- The method employs specialized loss functions and a transformer with classifier-free guidance to enhance image fidelity and text alignment.

- State-of-the-art results are demonstrated through high-resolution outputs and improved handling of out-of-distribution prompts, setting a new benchmark.

Make-A-Scene: Scene-Based Text-to-Image Generation with Human Priors

Introduction

The paper "Make-A-Scene: Scene-Based Text-to-Image Generation with Human Priors" introduces a novel approach to text-to-image conversion by incorporating scene controllability and domain-specific enhancements. Unlike traditional models that rely solely on text inputs, this method integrates a scene-based control mechanism, which allows for a more structured and coherent generation process, addressing the limitations of existing methods in terms of controllability, human perceptual alignment, and quality.

Methodology

The core of the proposed method lies in augmenting an autoregressive transformer with additional scene tokens derived from segmentation maps. The model encodes these maps using a modified VQ-VAE, dubbed VQ-SEG, enabling implicit conditioning on scene layouts during image generation. By adopting this architecture, the authors introduce a versatile control feature that encompasses:

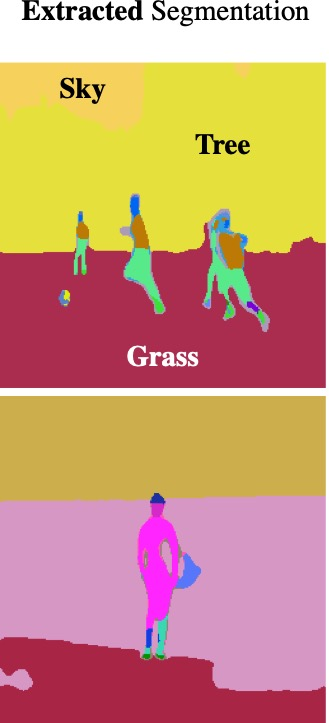

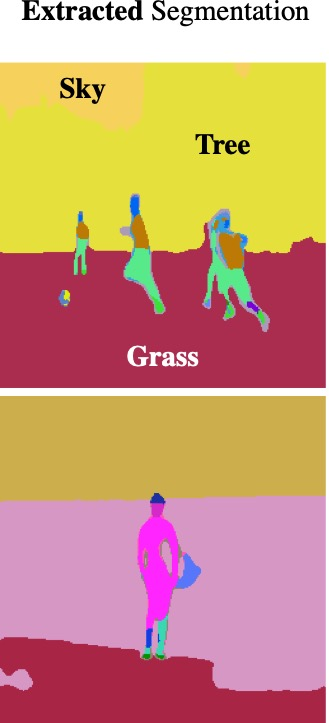

- Scene Editing and Anchoring: Users can edit the scene by manipulating segmentation maps, thereby inducing specific alterations in the generated output (Figure 1).

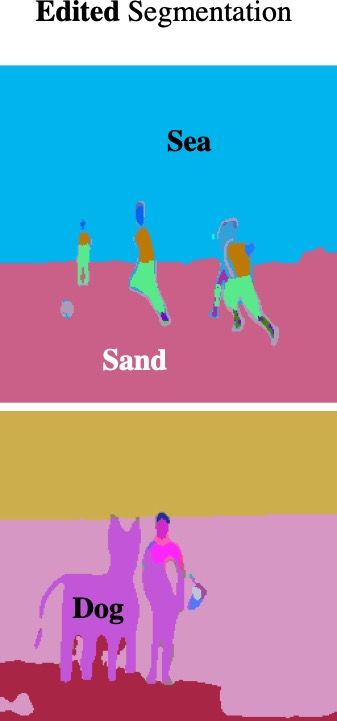

- Overcoming Out-of-Distribution Challenges: Scene sketches help tackle unusual object and scenario generation, previously unattainable by text inputs alone (Figure 2).

- Story Illustration: The method proves effective in illustrating narratives, a capability demonstrated through a storytelling video.

Figure 2: Overcoming out-of-distribution text prompts with scene control. By introducing simple scene sketches (bottom right) as additional inputs, our method is able to overcome unusual objects and scenarios presented as failure cases in previous methods.

Enhancements and Loss Functions

To enhance image fidelity and coherence, particularly in critical regions such as faces, the authors propose several targeted loss functions:

- Face and Object Awareness: Specialized losses like face-aware feature-matching are applied, utilizing pre-trained networks to emphasize regions of human interest, improving the perceptual quality of generated faces and salient objects.

- Object-Aware Vector Quantization: This approach extends the perceptual emphasis to objects, leveraging VGG-based feature-matching for enhanced object representation in the generated images.

The transformer model used in this work benefits from classifier-free guidance, a technique allowing seamless switching between conditional and unconditional sampling streams. This method combines the outputs to refine token predictions, leading to improved text alignment and image quality without the need for post-generation filtering.

Figure 1: Generating images through edited scenes. For an input text (a) and the segmentations extracted from an input image (b), we can re-generate the image (c) or edit the segmentations (d) by replacing classes (top) or adding classes (bottom), generating images with new context or content (e).

Experimental Evaluation

The model achieves state-of-the-art results across various metrics, including FID and human evaluations. A comprehensive ablation paper highlights the incremental benefits of each component, verifying their contributions to the overall performance.

- Quality and Resolution: The proposed model surpasses previous methods by generating high-resolution images at 512×512 pixels, a significant improvement over the common 256×256 resolution boundary.

- Human Preferences: Subjective evaluations consistently favor this method over alternatives like DALL-E and CogView, particularly in terms of image quality, realism, and text alignment.

Conclusion

This research extends the capabilities of text-to-image generation by introducing scene-based control mechanisms and perceptually-aligned enhancements, paving the way for more interactive and practical applications such as storytelling. The integration of domain-specific knowledge, especially regarding human perceptual biases, significantly elevates the model’s output quality, establishing a new benchmark in the field. Future work could explore further practical applications and potential improvements in token space representation, potentially broadening the scope and adaptability of this approach.