Conditional Prompt Learning for Vision-LLMs: An Overview

The paper "Conditional Prompt Learning for Vision-LLMs" by Zhou et al. presents an intriguing method for adapting pre-trained vision-LLMs to downstream tasks. The primary contribution of this work is the development of Conditional Context Optimization (CoCoOp), an improvement over the previously established Context Optimization (CoOp) method. This essay offers an expert analysis of the paper, reflecting on its methodologies, results, and broader implications for the field.

Background and Motivation

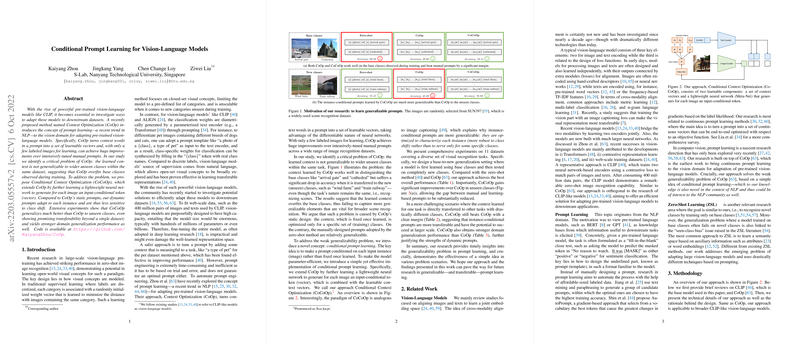

Vision-LLMs like CLIP and ALIGN have shown remarkable zero-shot performance by leveraging large image-text pair datasets. These models employ a text encoder to generate class-specific vectors based on manually designed prompts, such as "a photo of a [class]". While effective, the process of manual prompt engineering is labor-intensive and suboptimal. CoOp was proposed to automate this process by transforming the prompt context words into learnable vectors. However, CoOp suffers from overfitting to base classes, which limits its generalizability to unseen classes within the same dataset.

Methodology

To address CoOp's limitations, the authors introduce CoCoOp, a novel approach that generates instance-conditional prompts for each input image. CoCoOp incorporates a lightweight neural network referred to as the Meta-Net, which generates an input-conditional token. This token is then combined with learnable context vectors to form the final prompt. This dynamic nature allows CoCoOp to adapt more effectively to class shifts, enhancing its generalizability.

Formally, if we denote the Meta-Net by and the learnable context vectors by , the prompt for the -th class is conditioned on the input image as $\bm{t}_i (\bm{x}) =\{\bm{v}_1 (\bm{x}), \bm{v}_2 (\bm{x}), \hdots, \bm{v}_M (\bm{x}), \bm{c}_i\}$. Here, with . The final prediction is made using a softmax over cosine similarities between the image feature vector and class-specific prompts.

Experimental Results

The efficacy of CoCoOp was validated through comprehensive experiments on 11 diverse datasets, covering generic and fine-grained object classification, scene recognition, and action recognition. The results in the base-to-new class generalization setting exhibit significant improvements in the generalizability of the learned prompts, with CoCoOp outperforming CoOp by an average margin of 8.47% in unseen classes. Notably, CoCoOp's instance-conditional design also showed promising transferability across different datasets, highlighting its robustness to broader contextual shifts.

When evaluated for domain generalization, using ImageNet as the source and various domain-shifted versions as targets, CoCoOp's performance remained superior or at par with CoOp and CLIP, confirming that dynamically adjusting prompts per instance is beneficial for handling out-of-distribution data.

Implications and Future Directions

The implications of this research are multifaceted. Practically, CoCoOp's approach presents a scalable and efficient solution for adapting large-scale pre-trained models to specific downstream tasks without exhaustive manual tuning. This methodology significantly reduces the risk of overfitting to training data, thereby enhancing the model's ability to generalize to new classes and domains.

Theoretically, this work opens intriguing avenues for further exploration in prompt learning. Future research could explore more sophisticated designs for the Meta-Net, larger-scale implementations, and hybrid training datasets combining multiple sources. The design principles established here could also inspire advancements in related fields such as natural language processing, where conditional prompt learning has not yet been fully explored.

In summary, the advancements presented in this paper signify a notable step forward in the domain of vision-LLMs. By addressing generalizability issues and proposing a parameter-efficient conditional prompt learning framework, the authors have provided a robust and adaptable approach that promises to foster further developments and applications in AI.