Essay on "PromptKD: Unsupervised Prompt Distillation for Vision-LLMs"

The paper "PromptKD: Unsupervised Prompt Distillation for Vision-LLMs" presents an innovative methodology for enhancing vision-LLMs (VLMs) such as CLIP, utilizing prompt distillation techniques in an unsupervised framework. This approach aims at transferring knowledge from a larger teacher model to a lightweight student model using prompt-driven imitation facilitated by unlabeled domain images.

Overview of the Methodology

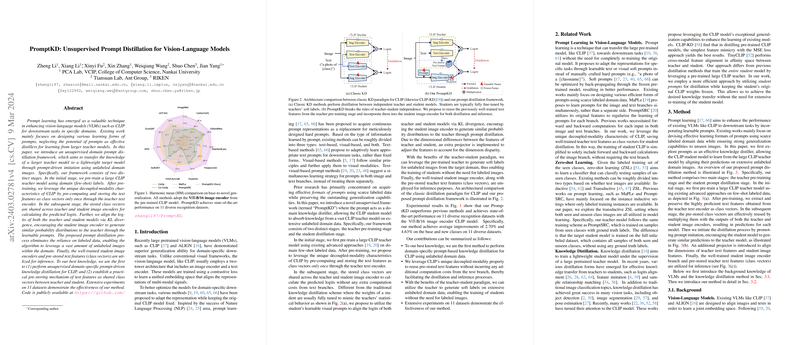

The proposed method, PromptKD, introduces a two-stage framework. The first stage involves pre-training a large CLIP teacher model with few-shot domain labels. This pre-training optimizes the model for domain-specific tasks and stores the text features from the teacher's text encoder as class vectors. These pre-computed text features act as shared vectors between the teacher and student models, thus ensuring efficiency during the second stage of the framework.

In the subsequent stage, prompt distillation occurs. Here, both the teacher and student image encoders share the class vectors for logit calculation. By aligning the logits of the teacher and student models through KL divergence, the student learns to produce outputs akin to the teacher's, utilizing learnable prompts. This process effectively removes the reliance on labeled datasets, making use of large volumes of unlabeled images.

Results and Implications

Extensive experiments on 11 diverse datasets reveal that PromptKD achieves a state-of-the-art performance, outperforming contemporaneous methods across base-to-novel generalization tasks. Specifically, it demonstrates an average improvement of 2.70% for base classes and 4.63% for novel classes over previous benchmark scores. The framework leverages the architecture and pre-training benefits of VLMs like CLIP, focusing on learnable soft prompts that better adapt the model to domain-specific knowledge.

PromptKD highlights significant implications for the future development of AI, particularly in the vision-language domain. By employing unsupervised methods and eliminating labeled data requirements, the framework not only reduces the constraints posed by dataset limitations but also enhances the adaptability and scalability of VLMs. This has practical implications for scenarios where obtaining labeled data is challenging or costly.

Speculation on Future Developments

The adoption of distillation frameworks that utilize prompt-based techniques presents several avenues for advancement. Future research might explore intricate combinations of textual and visual modalities in prompting mechanisms, particularly considering the decoupled-modality characteristics that CLIP exploits. Additionally, further exploration into optimizing projector designs or distillation hyperparameters—specifically relating to different datasets and tasks—could lead to performance gains.

Another potential direction is exploring more sophisticated models or alternative architectures, with ViT-B/16 and ViT-L/14 serving as precedents. Investigating the impact of these architectures on distilled representations might yield valuable insights into prompt learning capabilities across varying model scales.

Conclusion

PromptKD introduces a novel unsupervised prompt distillation framework that addresses key limitations in VLMs, emphasizing efficiency and performance improvements. The framework's innovative approach of utilizing shared class vectors and prompt-driven knowledge transfer has demonstrated tangible improvements across numerous tasks. As the field progresses, the concepts and methodologies presented in this paper could catalyze further developments in the field of vision-language processing and beyond.