FLAVA: A Foundational Language And Vision Alignment Model

The paper introduces FLAVA, a foundational model designed to address tasks across vision, language, and their multimodal combinations. The innovation lies in its ability to handle unimodal and multimodal data concurrently using a unified architecture, thus aiming to address the limitations of existing models which typically focus on specific modalities.

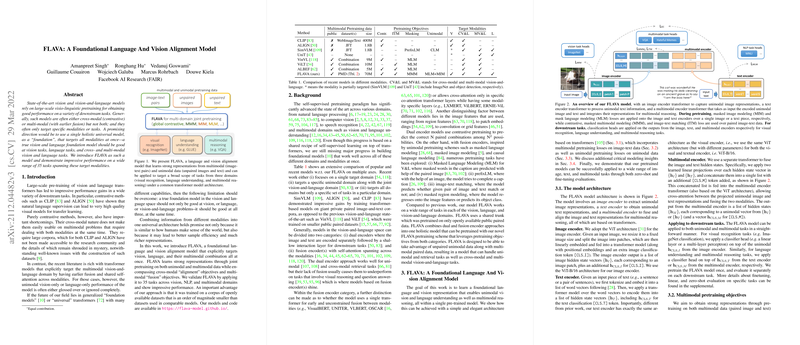

Approach and Architecture

The model leverages a transformer-based architecture that includes an image encoder, a text encoder, and a multimodal encoder component. Each encoder is designed to process its respective input—images or text—and the multimodal encoder is used for fusing information from both modalities for tasks requiring integrated understanding. The transformers used in FLAVA are consistent in configuration, with a shared hidden size across modalities, which facilitates uniformity in processing different modalities.

Pretraining Strategies

FLAVA employs a composite pretraining strategy to enhance its ability to generalize across tasks:

- Global Contrastive Loss: This loss formulation is employed to optimize the alignment between image and text representations. The key distinction here is the model’s use of a global contrastive loss that allows back-propagation across all data batches distributed over multiple GPUs, enhancing the robustness of the learned representations.

- Masked Multimodal Modelling (MMM): This technique is integrated so that the model can predict masked components of both text and image inputs, enabling deeper multimodal interaction.

- Unimodal Objectives: Traditional masked modeling is utilized separately for text and images, allowing FLAVA to leverage unimodal datasets effectively.

Dataset Utilization

FLAVA is trained on a collection of publicly accessible datasets, aggregating 70 million image-text pairs. This democratizes the foundation model approach by relying on data available to all researchers, contrasting with models like CLIP, which depend on large proprietary datasets.

Performance and Comparisons

Evaluation of FLAVA spans 35 tasks covering vision, natural language, and multimodal benchmarks. The experiments demonstrate:

- Vision Tasks: Linear probe evaluations reveal competitive performance across several standard computer vision datasets.

- Language Understanding: The model is finetuned on a suite of NLP tasks from the GLUE benchmark, showing efficacy comparable to well-established LLMs.

- Multimodal Reasoning: Through fine-tuning, FLAVA achieves notable results on tasks such as VQAv2 and image-text retrieval benchmarks, suggesting strong cross-modal capabilities.

Despite being trained on substantially less data, FLAVA outperforms equivalent models that use similar public datasets and approaches those trained on proprietary datasets. The performance is adequate given the model's generalized pretraining strategy applicable across divergent tasks.

Implications and Future Directions

FLAVA's success indicates that significant advancements in vision-LLMs can be achieved without proprietary data, pointing to a future where open and accessible foundation models can provide robust cross-modal capabilities. Future work could explore scaling the dataset further to analyze impacts on performance and generalizability, alongside refining model architectures to better capture nuances in joint multimodal understanding.

Overall, FLAVA exemplifies a shift towards more open, flexible, and versatile foundational models capable of addressing a variety of tasks under a unified framework, paving the way for broader accessibility and research reproducibility in multimodal AI.