OFA: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

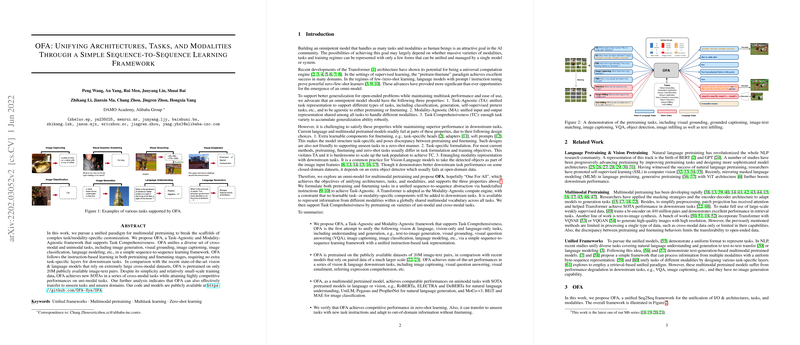

The research paper "OFA: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework" presents a Task-Agnostic and Modality-Agnostic framework aligned with Task Comprehensiveness, termed as OFA. Developed by a team at DAMO Academy, Alibaba Group, this framework aims to consolidate various cross-modal and unimodal tasks, including image generation, visual grounding, image captioning, image classification, and LLMing, into a unified sequence-to-sequence (Seq2Seq) learning paradigm.

Abstract

The proposed OFA framework seeks to address the limitations inherent in task/modality-specific customizations. By utilizing an instruction-based learning strategy in both pretraining and finetuning phases, OFA obviates the need for additional task-specific layers. Despite leveraging a relatively small dataset of approximately 20 million image-text pairs for pretraining, OFA establishes new state-of-the-art (SOTA) results across several cross-modal tasks. Furthermore, the framework demonstrates effective transferability to unseen tasks and domains, thus highlighting its potential versatility. The code and models introduced in this research are accessible at the provided GitHub repository.

Key Components and Contributions

- Unified Framework:

- Task-Agnostic (TA): OFA supports various task types, including classification and generation, without specificity to either pretraining or finetuning.

- Modality-Agnostic (MA): A shared input and output representation enables handling different data modalities.

- Task Comprehensiveness (TC): The framework pretrains on a diverse array of tasks to ensure robust generalization capabilities.

- Architecture and Pretraining:

- OFA employs a Transformer-based encoder-decoder architecture, initialized with weights from BART to represent and process multimodal data using a unified sequence-to-sequence approach.

- Pretraining incorporates a variety of vision {content} language, vision-only, and language-only datasets. An additional emphasis on tasks, such as image infilling and object detection, augments the framework's versatility.

- Results and Analysis:

- OFA achieves superior performance in multiple benchmarks such as VQAv2, SNLI-VE, MSCOCO Image Caption, and referring expression comprehension datasets.

- The framework also compares favorably with other SOTA models for unimodal tasks, performing competitively on the GLUE benchmark for natural language understanding and the ImageNet-1K for image classification.

- For zero-shot learning, OFA exhibits competitive performance although its efficacy is highly sensitive to instruction design.

Empirical Evaluation

Cross-modal Tasks:

- On VQAv2, OFA reaches an Acc of 82.0 on the test-std set, surpassing previous models pretrained on significantly larger datasets.

- For image captioning on the MSCOCO dataset, OFA registers a CIDEr score of 154.9 after CIDEr optimization, demonstrating its generation capabilities.

- RefCOCO, RefCOCO+, and RefCOCOg evaluations reveal that OFA excels in visual grounding, achieving accuracies of 94.03%, 91.70%, and 88.78% on respective test sets.

Unimodal Tasks:

- On the GLUE benchmark, OFA consistently outperforms existing multimodal models, closely approaching the results of language-specific pretrained models like RoBERTa and DeBERTa.

- Image classification tasks yield a Top-1 Accuracy of 85.6% on the ImageNet-1K dataset, competitive with contemporary architectures like BEiT and MAE.

Zero-shot Learning and Task Transfer:

- Zero-shot evaluations on the GLUE benchmark verify that OFA retains robust performance across unseen tasks.

- Case studies on unseen domains illustrate OFA's ability to transfer capabilities to new tasks like grounded question answering, and visual grounding on synthetic images.

Implications and Future Directions

The introduction of OFA underscores significant advancements in consolidating manifold AI tasks and modalities within a unified framework. The primary advantage of OFA lies in its simplicity and versatility. This paradigm shift could eliminate the need for bespoke models tuned to specific tasks or domains, thereby streamlining the development and deployment of multimodal AI systems. Future research could delve into optimizing the instruction design mechanisms within OFA to enhance its zero-shot learning stability and efficiency. Further scaling of the dataset could also bolster the model's generalization and transfer capabilities.

Conclusion

The OFA framework represents a significant stride towards achieving a universal AI model capable of handling an extensive range of tasks and modalities through a unified Seq2Seq framework. By demonstrating robust performances across various benchmarks and datasets, OFA paves the way for future research focused on broadening the scope of multimodal models and fine-tuning their transferability and efficiency. The availability of OFA's code and models further invites the research community to extend and build upon this pioneering work.