Swin Transformer V2: Scaling Up Capacity and Resolution

Ze Liu et al. present a significant advancement in computer vision with their paper "Swin Transformer V2: Scaling Up Capacity and Resolution." This work addresses several complexities in scaling vision models, notably training instability, resolution gaps between pre-training and fine-tuning, and the demand for vast labeled datasets. The paper outlines three key innovations: the residual-post-norm and cosine attention mechanism for improving training stability, a log-spaced continuous relative position bias (Log-CPB) for effective transfer between different resolutions, and the employment of a self-supervised pre-training method, SimMIM, to reduce dependence on labeled data.

Contributions and Innovations

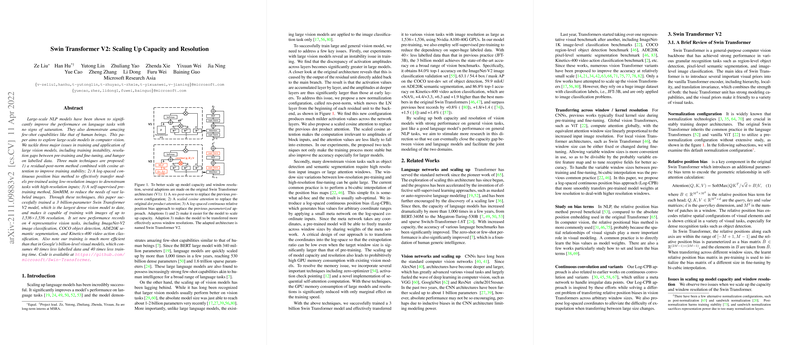

- Residual-Post-Norm and Cosine Attention: The residual-post-norm technique is introduced to mitigate training instability, which becomes pronounced as model size increases. By normalizing the output of each residual block before merging it back into the main branch, the amplitude of activations does not accumulate excessively at deeper layers. This approach is complemented by the scaled cosine attention, which replaces the dot product attention and further stabilizes training by normalizing attention computations. This dual approach addresses issues observed in large models, leading to more robust training processes and improved performance, particularly in larger models.

- Log-Spaced Continuous Relative Position Bias (Log-CPB): To handle the challenge of transferring models pre-trained on low-resolution images to tasks requiring high-resolution inputs, the authors propose the Log-CPB method. Unlike traditional parameterized approaches, this method uses a small meta network to generate bias values, which can adapt smoothly to different resolutions. By transforming coordinates into log-space, the method ensures better extrapolation across significantly varying window sizes, thus maintaining model performance when scaling up resolutions.

- SimMIM for Self-Supervised Pre-Training: Addressing the hunger for labeled data in large models, the paper leverages SimMIM, a self-supervised learning approach, reducing the need for large-scale labeled datasets. This approach allowed the authors to train a 3 billion-parameter model using significantly fewer labeled images, demonstrating efficiency and resource optimization.

Empirical Performance

The paper provides extensive empirical evidence demonstrating the efficacy of these innovations. The Swin Transformer V2, scaling up to 3 billion parameters, establishes new performance benchmarks across multiple tasks:

- Image Classification: The model achieves 84.0% top-1 accuracy on ImageNet-V2, surpassing previous bests and demonstrating strong performance even with less extensive pre-training as compared to models like ViT-G and CoAtNet-7.

- Object Detection: On COCO object detection, the Swin V2-G model achieves 63.1/54.4 box/mask AP, significantly higher than prior state-of-the-art models.

- Semantic Segmentation: With a result of 59.9 mIoU on ADE20K, the Swin V2-G sets a new benchmark, marked by its ability to handle pixel-level tasks efficiently.

- Video Action Classification: On Kinetics-400, the model achieves 86.8% top-1 accuracy, highlighting its efficacy in video recognition tasks.

Implications and Future Directions

The advancements presented in "Swin Transformer V2" have substantial implications for the future of computer vision models. The proposed techniques not only improve the current performance of vision models but also pave the way for more scalable, stable, and adaptable architectures. This bridging of the gap between vision and LLMs suggests potential future developments in multi-modal AI models capable of handling a wider range of tasks.

Practically, the improvements in training stability and resolution scaling mean that deployable vision models can be more robust and cost-effective, especially in environments where computational resources and labeled data are limited. The use of self-supervised learning techniques like SimMIM points towards a future where the dependency on large labeled datasets is minimized, fostering the development of more generalized and accessible AI solutions.

In conclusion, the Swin Transformer V2 symbolizes a significant step forward in scaling vision models, addressing fundamental challenges, and setting new performance benchmarks. The methodologies and results discussed in this paper will likely inspire further research and development in both academic and industrial contexts, contributing to the evolution of more sophisticated and unified AI systems.