CSWin Transformer: A General Vision Transformer Backbone with Cross-Shaped Windows

The paper "CSWin Transformer: A General Vision Transformer Backbone with Cross-Shaped Windows" introduces the CSWin Transformer, a novel architecture designed to enhance transformer efficiency and effectiveness in general vision tasks. The core innovation of this paper is the Cross-Shaped Window (CSWin) self-attention mechanism, which computes self-attention in horizontal and vertical stripes in parallel, addressing the computational inefficiency of global self-attention and the locality constraints of traditional windowed self-attention.

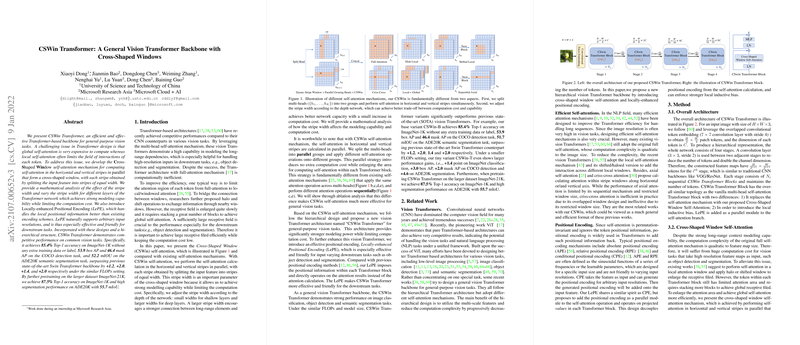

Cross-Shaped Window Self-Attention

The CSWin self-attention mechanism mitigates the computational complexity typically associated with full self-attention methods by dividing the input into horizontal and vertical stripes, which are processed in parallel:

- Parallel Multi-Head Grouping: The self-attention computation splits the multi-heads into two groups. The first group performs horizontal self-attention, and the second group computes vertical self-attention. This design allows each token to have a larger attention area within a single Transformer block relative to previous methods that sequentially apply the same attention operation.

- Dynamic Stripe Widths: The stripe width, which denotes the number of tokens in the stripes, is adjusted dynamically across the network layers. Small stripe widths are used in the shallow layers, while larger widths are employed in the deeper layers, achieving a balance between strong modeling capability and computational cost.

Locally-Enhanced Positional Encoding (LePE)

The authors also introduce a novel Locally-Enhanced Positional Encoding (LePE) scheme that enhances local positional information more effectively than existing positional encoding methods:

- Local Awareness: Unlike traditional absolute or relative positional encodings, LePE operates as a parallel module to the self-attention mechanism, directly modifying the projected values (V) within each Transformer block.

- Resolution Adaptability: LePE naturally supports arbitrary input resolutions, making it especially suitable for downstream tasks such as object detection and segmentation.

Empirical Evaluation

The CSWin Transformer demonstrates superior performance across various computer vision tasks:

- Image Classification: On ImageNet-1K, the CSWin base model (CSWin-B) achieves a Top-1 accuracy of 85.4%, surpassing previous state-of-the-art Swin Transformer by 1.2%. Further pretraining on ImageNet-21K with subsequent fine-tuning on ImageNet-1K achieves an enhanced Top-1 accuracy of 87.5%.

- Object Detection and Instance Segmentation: Using Mask R-CNN on COCO, CSWin-B attains 53.9 box AP and 46.4 mask AP, surpassing Swin Transformer's metrics by 2.0 points each.

- Semantic Segmentation: On ADE20K, CSWin-B reaches 52.2 mIoU, outperforming Swin Transformer by 2.0 points.

Implications and Future Directions

The CSWin self-attention framework's ability to efficiently enlarge the receptive field while maintaining computational efficiency has meaningful implications for a range of vision-based applications, from image classification to more complex tasks such as object detection and segmentation. The hierarchical structure and adaptive stripe width confer robustness and scalability, suggesting that CSWin Transformers can be effectively scaled up for even larger and more complex datasets.

Future research could explore further optimization of the CSWin Transformer architecture, potentially integrating additional innovations such as network pruning, quantization, or integration with other types of neural architectures. Furthermore, expanding the applicability of the CSWin model to tasks like video understanding, 3D vision, or broader multimodal applications could be a fruitful area of exploration.

In conclusion, the CSWin Transformer represents a significant step forward in vision transformer design, offering a scalable, efficient, and high-performing backbone for a variety of vision tasks. The advancements introduced in this paper, particularly regarding the CSWin self-attention mechanism and LePE, pave the way for future research and development in the field of computer vision.