A Contrastive Framework for Self-Supervised Sentence Representation Transfer

The paper presents ConSERT, a novel framework designed to enhance BERT-derived sentence representations through an unsupervised contrastive learning approach. The authors address the prevalent issue of collapsed sentence representations inherent in BERT, which negatively impact performance on semantic textual similarity (STS) tasks. By leveraging unlabeled text data, ConSERT effectively refines sentence embeddings, demonstrating significant improvements in downstream task performance.

Problem and Motivation

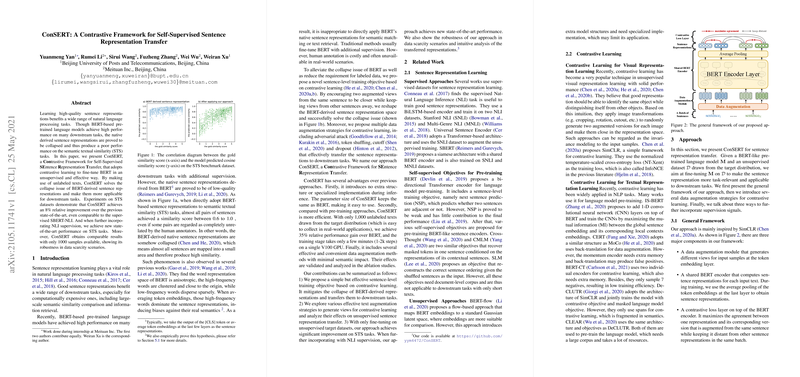

BERT-based models, while successful across numerous NLP tasks, suffer from collapsed sentence representation spaces, where high-frequency tokens disproportionately influence the embedding quality. This impedes their utility in tasks requiring fine-grained semantic similarity assessment. Traditional amelioration involves supervised fine-tuning, which is resource-intensive. ConSERT offers a self-supervised alternative that alleviates these issues by reshaping representation spaces.

Approach

ConSERT employs a contrastive learning objective to align semantically similar sentence representations while separating dissimilar ones. It introduces four data augmentation strategies—adversarial attack, token shuffling, cutoff, and dropout—to create diverse semantic views for the same sentence. These augmentations play a pivotal role in enriching the learned representations without additional structural burdens at inference.

Numerical Results

Experimental evaluations on STS datasets indicate that ConSERT achieves an 8% relative improvement over previous state-of-the-art methods like BERT-flow, even rivaling supervised methods such as SBERT-NLI. Notably, ConSERT maintains competitive performance with only limited data, illustrating its robustness in scenarios of data scarcity.

Implications and Future Directions

The implications of ConSERT are manifold. Practically, it provides a scalable solution for enhancing pre-trained models with minimal data requirements, promoting efficiency in real-world applications. Theoretically, it surfaces insights into the representation collapse problem and posits contrastive learning as a viable solution. Future exploration could involve integrating ConSERT with other pre-trained models or expanding the contrastive framework to broader NLP applications.

Conclusion

ConSERT represents a significant step in unsupervised sentence representation learning, leveraging the power of contrastive learning to bridge the gap between pre-trained models and specific downstream task demands. Its promising results encourage further research into self-supervised methodologies for NLP, with potential applications in heterogeneous data environments where labeled data is scarce or costly to obtain.