Investigating the Reordering Capability in CTC-based Non-Autoregressive End-to-End Speech Translation

The paper addresses a significant challenge in end-to-end speech translation (ST): the potential to perform non-autoregressive (NAR) translation using Connectionist Temporal Classification (CTC) models. The authors explore this domain by constructing a NAR model that avoids the latency issues typically associated with autoregressive (AR) decoders while maintaining competitive translation performance. The research specifically targets the reordering challenges implied by the CTC’s monotonicity assumptions, as reordering is a crucial aspect in translating between languages with differing syntax structures.

Methodology Overview

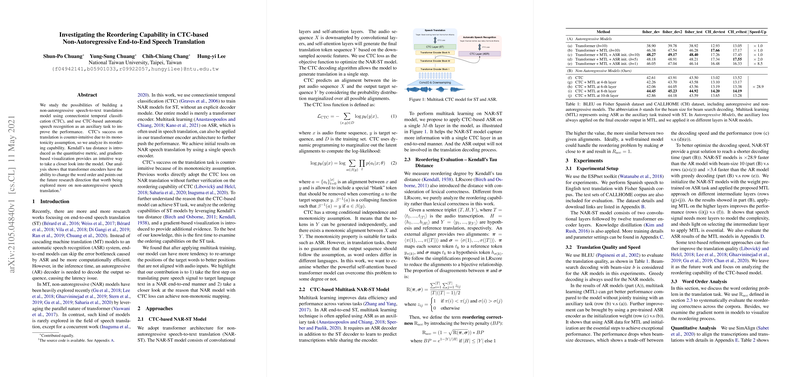

The paper employs a transformer-based architecture for the NAR-ST task, where a single transformer encoder is responsible for both automatic speech recognition (ASR) and ST. Utilization of the CTC loss allows the model to bypass the typical reliance on explicit decoders, promising greater computational efficiency and faster inference speeds. Moreover, multitask learning (MTL) is employed with ASR as an auxiliary task, enhancing the model's capability to handle speech-to-text mapping.

Kendall's tau distance is utilized to quantify the reordering capability, offering an empirical basis for assessing how well CTC-based models can accommodate the necessary word order rearrangements in translations. The researchers further introduce gradient-based visualization techniques to provide insight into how reordering occurs within the model layers.

Experimental Validation

The experiments are conducted on the Fisher Spanish corpus, with evaluation extended to the CALLHOME dataset. The models are benchmarked against both autoregressive and non-autoregressive variants, focusing on BLEU scores as the primary metric for translation quality and decoding speed as the efficiency metric.

The findings demonstrate that the CTC-based NAR models offer a substantial speed-up in decoding time (approximately 28.9 times faster than state-of-the-art AR models with beam-size ten), although with some compromise in translation quality. However, when introducing MTL at higher encoder layers, a notable improvement in BLEU scores is observed, indicating the model’s enhanced ability to manage non-linear word order mappings. Comparisons reveal that, while AR models generally achieve higher scores, indicative of better reordering precision, NAR models optimized with multitask settings show promising reordering capacities unique for tasks where AR-based latencies are unacceptable.

Theoretical and Practical Implications

The research contributes to a deeper understanding of the potential for CTC-based models in handling non-monotonic reorderings in translations, which traditionally has been a stronghold of autoregressive approaches. While the paper reinforces that AR models still excel in handling high reordering difficulty, NAR models offer potential in applications where speed is prioritized, and some trade-off in precision is acceptable.

Future investigations could explore the integration of more sophisticated text refinement techniques and extend reordering evaluations across other language pairs. Such efforts could further reduce the performance gap between NAR and AR models, particularly for languages with significantly different syntactic structures. Additionally, the extension of the CTC model with learned reordering bias that leverages bilingual lexical reordering traits may bridge remaining gaps in efficiency and accuracy. Overall, this paper lays a promising foundation for future advancements in ST technologies leveraging non-autoregressive methodologies.