Evaluation Strategies for Low-Resource Speech-to-Text Translation Leveraging Pre-Trained ASR Models

The paper at hand investigates methodologies aimed at enhancing performance in low-resource speech-to-text translation scenarios. Specifically, it examines the application of pre-trained automatic speech recognition (ASR) models to improve translations between English-Portuguese and Tamasheq-French language pairs under conditions where data availability is significantly limited. Employing an encoder-decoder framework, the authors integrate several techniques, including multilingual ASR initialization and the incorporation of connectionist temporal classification (CTC) as an auxiliary training objective to facilitate reordering of internal representations.

Experimental Setup and Methodology

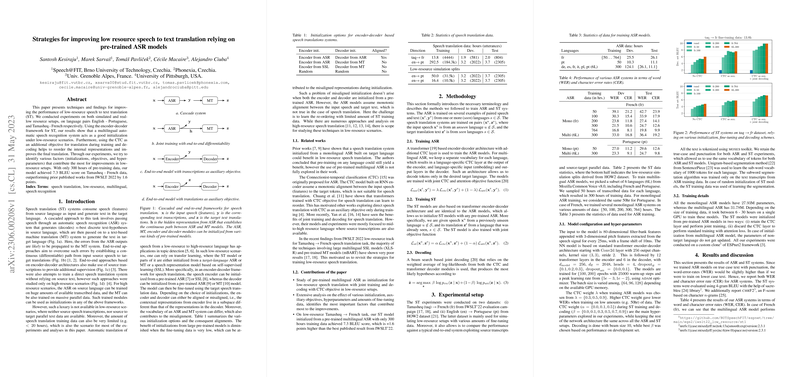

The research deployed a series of experiments on both simulated and authentic low-resource environments. The primary innovation includes the deployment of multilingual ASR systems as an initialization strategy to aid speech translation in resource-scarce environments. The paper further augmented its model using CTC as an auxiliary objective during the training and decoding stages, allowing the translation system to effectively handle misaligned speech-text pairs by improving the alignment and internal representation of audio data.

Key Results

With only 300 hours of pre-training data, the model achieved a BLEU score of 7.3 on the Tamasheq-French dataset, surpassing previous benchmarks from the IWSLT 2022 by 1.6 points. This result underscores the viability of using multilingual ASR models as effective initializations. In low-resource setups, multilingual models trained on combined data exhibited better performance compared to monolingual models trained on larger individual datasets. The integration of CTC contributed significantly to translation accuracy, suggesting its potential as a crucial auxiliary objective in low-resource translation tasks.

Implications and Future Directions

The findings have practical implications, particularly for application in real-world scenarios where translation systems must operate with limited data and resources. The paper highlights the promising potential of multilingual models, especially in circumstances where the target language has only a moderate amount of available transcribed data. The research suggests that similar approaches could be extended to multilateral speech translation tasks, potentially enhancing translation quality across diverse language sets.

Future research could expand upon this work by exploring multilingual training of speech translation models, which could provide additional layers of supervision and improve overall translation quality. Quantifying and addressing the issue of misaligned representations between different modalities also remains an important line of inquiry, potentially yielding more integrated and efficient translation systems. Additionally, investigating the impact of varying pre-training data sizes and configurations might provide deeper insights into optimizing model performance in low-resource environments.

Conclusion

This research provides an insightful investigation into low-resource speech-to-text translation by leveraging multilingual ASR systems and examining the utilization of CTC as an effective auxiliary component. Its implications for improving translation accuracy in data-constrained settings are significant, and it opens up several directions for future exploration within the field of speech processing and machine translation.