An Analysis of ZeRO-Offload: Advancements in Billion-Scale Model Training

The paper "ZeRO-Offload: Democratizing Billion-Scale Model Training" introduces an innovative technique aimed at making the training of large-scale models with over 13 billion parameters viable on a single GPU. This represents a significant enhancement over existing frameworks like PyTorch, which have limitations concerning model size due to memory constraints. A novel approach, ZeRO-Offload offloads a substantial portion of data and computation tasks to the CPU, thus optimizing the resources that are available on both the GPU and CPU without requiring any changes to the model architecture from the data scientist's perspective.

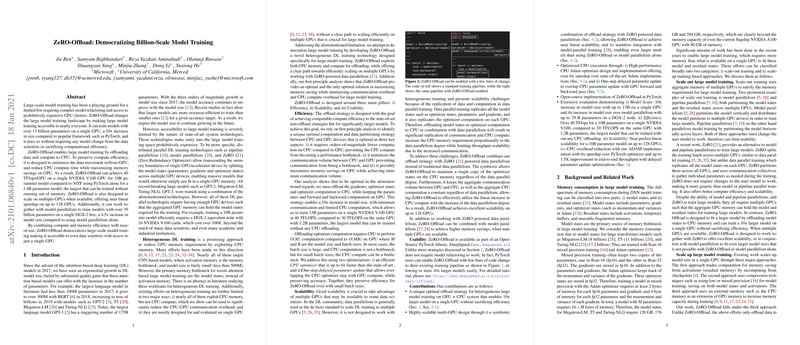

The authors highlight the capability of ZeRO-Offload to execute 10 billion parameter model training at an efficiency of 40 TFlops on a single NVIDIA V100 GPU. Comparison with a 1.4 billion parameter model using PyTorch, which achieves 30 TFlops, underscores the computational advantage and memory management prowess of ZeRO-Offload. This system is further engineered to operate across multiple GPUs, achieving almost linear scale-up to 128 GPUs. Moreover, it integrates effectively with existing model parallelism methods to support training of models exceeding 70 billion parameters on a single DGX-2 box.

The capability of ZeRO-Offload to significantly extend the size of neural network models that can be trained on relatively modest GPU hardware is an important contribution to the AI and machine learning community. By minimizing data transfer between CPU and GPU, and optimizing computational operations on the CPU, ZeRO-Offload successfully balances computational and memory efficiency. This achievement is particularly important as it lowers the barrier to entry, enabling data scientists with limited access to extensive GPU parallel clusters to conduct large-scale model training.

Further evaluation of ZeRO-Offload's impact reveals the potential to transform how large-scale models are developed and deployed, as it democratizes access by using existing hardware configurations more effectively. This paper also sheds light on the broader implications for computational resource management, pointing towards a future where the model size isn't bounded by the availability of high-cost computational infrastructure but rather by innovative utilization of available resources.

Looking forward, the development of tools like ZeRO-Offload suggests ongoing advancements in optimizing memory and computational workloads, potentially leading to even more efficient training paradigms. As the journey towards increasingly expansive models continues, developments of this nature will likely stimulate further exploration into heterogeneous computing systems, distributed training methodologies, and their intersection with next-generation AI infrastructures.