ZeRO++: Extremely Efficient Collective Communication for Giant Model Training

The paper "ZeRO++: Extremely Efficient Collective Communication for Giant Model Training" presents enhancements to the ZeRO optimizer aimed at improving the training efficiency of LLMs on GPU clusters. The innovations introduced are critical given the increased communication bottlenecks encountered when scaling model training across diverse and large-scale distributed systems.

Core Contributions

The authors introduce ZeRO++, a set of communication volume reduction techniques designed to optimize ZeRO’s performance, particularly in resource-constrained environments. The three main strategies are:

- Quantized Weight Communication for ZeRO (qwZ): By quantizing model weights to INT8 during the forward pass all-gather operation, communication volume is halved. Utilizing block-based quantization ensures minimal precision loss, making it feasible to maintain training accuracy.

- Hierarchical Partitioning for ZeRO (hpZ): This involves a secondary partitioning of FP16 weights within compute nodes to eliminate cross-node communication during backward all-gather. It results in significant communication efficiency by utilizing high-bandwidth intra-node links.

- Quantized Gradient Communication for ZeRO (qgZ): A novel all-to-all quantized gradient averaging paradigm replaces the traditional reduce-scatter collective. The quantized data (INT4) is communicated and then expanded back to full precision before reduction, reducing the inter-node communication volume considerably without degrading precision.

Performance and Implications

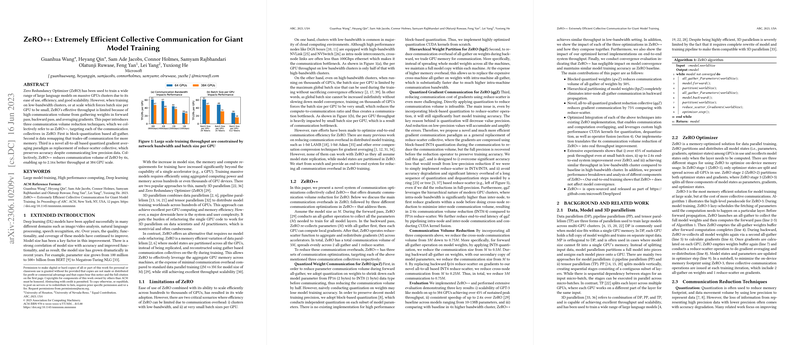

ZeRO++ achieves a 4x reduction in communication volume compared to the baseline ZeRO, which translates into up to 2.16x efficiency improvement at a 384 GPU scale. This optimization is crucial for maintaining high throughput and performance, especially in low-bandwidth settings typical of many cloud environments.

These improvements extend ZeRO’s applicability, potentially democratizing access to efficiently train massive models by lowering the hardware bandwidth requirements. This accessibility is particularly beneficial for organizations with limited computational infrastructure.

Future Directions

The techniques introduced in ZeRO++ could lay the groundwork for further innovations in distributed training strategies. Future research could explore finer granularity in quantization, adaptive communication strategies based on real-time bandwidth availability, and integration with other optimizations like gradient sparsification. Further, as hardware configurations continue to evolve, adaptation of these techniques could help maximize the utilization of emerging interconnect technologies.

Conclusion

ZeRO++ represents a significant advancement in optimizing communication for large-scale model training. By making distributed training more bandwidth efficient, it addresses critical scalability challenges. This makes large model training more accessible, aligning well with the broader goals of scaling AI solutions effectively and efficiently.