Insights into I-BERT: Integer-only BERT Quantization

The paper "I-BERT: Integer-only BERT Quantization" introduces an effective quantization scheme for Transformer-based models, such as BERT and RoBERTa, to enable exclusive integer arithmetic during inference. This development addresses significant challenges faced by contemporary large-scale models concerning memory consumption, inference latency, and power usage. The I-BERT approach is both timely and relevant given the pressing need for deploying such models efficiently at the edge and even in constrained data center environments.

Core Contributions

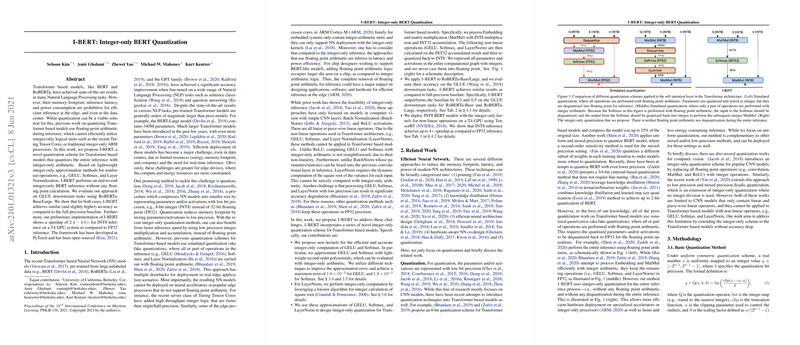

The authors propose an integer-only quantization scheme, distinctly deviating from previous methods that relied on floating-point arithmetic. This approach effectively ensures that calculations are performed entirely using integer operations, facilitating deployment on hardware systems equipped with integer-only processing units like ARM Cortex-M processors and the Turing Tensor Cores.

Key contributions of the I-BERT framework include:

- Polynomial Approximation: The paper describes lightweight integer-only approximation methods for non-linear functions integral to Transformer architectures, including GELU, Softmax, and Layer Normalization. These approximations employ low-order polynomial expressions to maintain inferencing accuracy while adhering to integer arithmetic.

- Implementation and Deployment: The I-BERT framework is implemented utilizing the PyTorch library and has been open-sourced, promoting transparency and reproducibility. The integer-only quantization has been applied to RoBERTa-Base/Large models and shows promising results on various GLUE downstream tasks.

- Accuracy Retention: Remarkably, I-BERT maintains a competitive accuracy level compared to full-precision baselines, achieving slightly higher scores in some cases with minimal degradation in others.

- Latency Improvements: The integer-only inference implementation achieves significant speedup—ranging from 2.4 to 4 times—compared to the FP32 implementation on a T4 GPU system, marking a considerable enhancement in efficiency.

Implications and Future Directions

The implications of this research are multifaceted. Practically, the introduction of integer-only quantization makes it feasible to deploy Transformer models on edge devices where memory, computational power, and energy consumption are critically constrained. This advancement widens the application possibilities for NNs in real-world scenarios, where resources are often limited.

Theoretically, the paper challenges the necessity of floating-point precision in maintaining model performance across a range of NLP tasks. The success of I-BERT's polynomial approximation in preserving the functional aspects of non-linear operations could inform further research into low-resource model deployment strategies.

As AI technologies develop, this work opens up several avenues for exploration. Future research may look into optimized training procedures that incorporate integer-only arithmetic from the start to enhance the end-to-end efficiency of model deployment. Additionally, exploring the application of integer-only techniques beyond NLP to other domains of machine learning could provide new insights and innovations.

In conclusion, the paper presents a significant advancement in the quantization of Transformer models. It aligns with the industry's demand for efficient AI solutions and pushes the boundaries of existing hardware potential without compromising on performance. This equilibrium between efficiency and efficacy is essential for the continued integration of AI into our technological fabric.