An Evaluation of "ZeroQuant: Efficient and Affordable Post-Training Quantization for Large-Scale Transformers"

The paper "ZeroQuant: Efficient and Affordable Post-Training Quantization for Large-Scale Transformers" explores an innovative approach to compress large-scale Transformer models, particularly focusing on BERT and GPT-3-style models. The authors identify the significant demand for efficient deployment of these expansive models due to their substantial memory and computational costs, and propose an end-to-end quantization approach termed ZeroQuant.

Core Contributions

The ZeroQuant methodology is characterized by several key innovations:

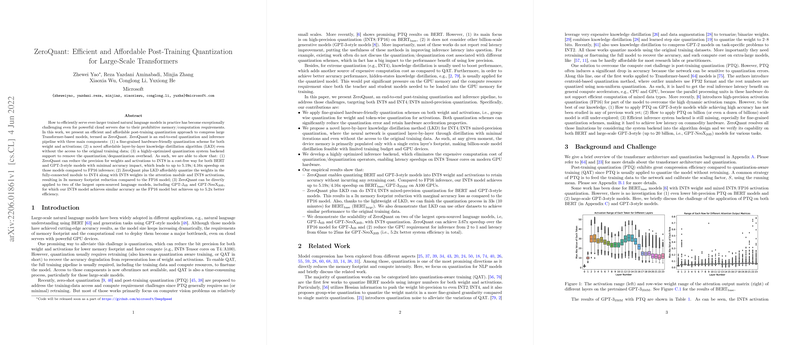

- Hardware-Friendly Quantization Schemes: This approach includes fine-grained techniques such as group-wise quantization for weights and token-wise quantization for activations. These techniques address the limitations associated with static quantization by reducing quantization errors while maintaining compatibility with existing hardware architectures like NVIDIA's T4/A100 Tensor Cores.

- Layer-by-Layer Knowledge Distillation (LKD): In absence of original training data, ZeroQuant employs a novel LKD algorithm. This method quantizes the neural network layer-by-layer and applies knowledge distillation incrementally to minimize accuracy degradation. By reducing memory load, it accommodates constraints posed by significant compute resource demands typical of billion-parameter models.

- Optimized Inference Backend: The paper describes system-level optimizations that eliminate the overhead related to quantization and dequantization operations. This enables significant reductions in latency, demonstrating practical viability in real-world deployments.

Empirical Results

Comprehensive experiments quantify the gains offered by ZeroQuant:

- For BERT and GPT-3 models subjected to INT8 quantization, ZeroQuant achieves up to 5.2x faster inference than their FP16 counterparts, with negligible accuracy loss. This demonstrates both memory efficiency and computational speed improvements, crucial for deployment in environments with constrained resources.

- Through INT4/INT8 mixed-precision quantization, supported by LKD, ZeroQuant achieves a 3x reduction in memory footprint versus the FP16 baseline.

- The system backend optimizations are shown to mitigate latency typically induced by granular quantization schemes, with empirical results underscoring consistency in speedup across different experimental conditions.

Implications and Future Directions

Practically, ZeroQuant presents a solution to the prevalent obstacle of high deployment costs in NLP models. The proposed optimization techniques can be extrapolated to other kinds of deep learning models, potentially broadening their accessibility across devices with varying computational capacities.

Theoretically, the work contributes a nuanced understanding of quantization at scale, highlighting the trade-offs between precision, accuracy, and computational overhead. The success of layer-by-layer distillation, in particular, suggests fertile ground for more granular distillation techniques tailored to model architectures beyond Transformers.

Moving forward, the field might benefit from exploring:

- The impact of quantization on transfer learning applications, where model adaptability is as crucial as speed and efficiency.

- Techniques that extend the presented hardware-friendly quantization to encompass a wider range of hardware accelerators, paving the way for broader application in distributed computing environments.

- Further investigation into the minimal dataset requirements for effective distillation, which could reduce the dependency on large datasets and broaden model applicability to scenarios where data acquisition is challenging.

In conclusion, ZeroQuant offers a structured, efficient approach to model quantization, addressing both operational performance and deployment affordability. It is a compelling contribution to the discussion on reducing barriers to practical NLP model deployment, and sets the stage for continued innovation in model efficiency techniques.