Seeing is Knowing! Fact-based Visual Question Answering using Knowledge Graph Embeddings

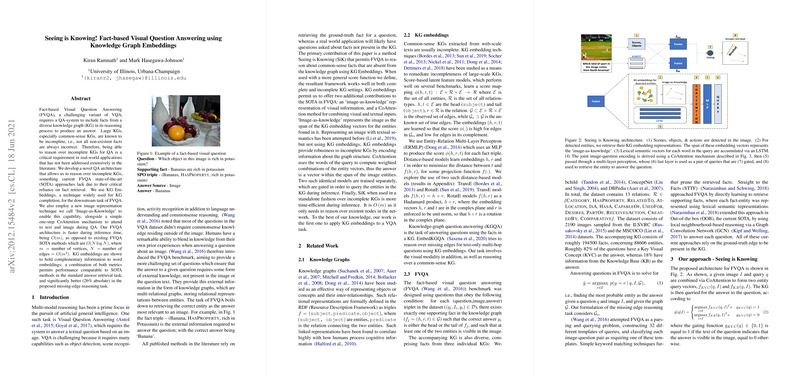

The paper, "Seeing is Knowing! Fact-based Visual Question Answering using Knowledge Graph Embeddings," introduces a novel approach to Fact-based Visual Question Answering (FVQA). FVQA is an extension of the Visual Question Answering (VQA) task, requiring integration of information beyond what is immediately available in the visual domain through the use of Knowledge Graphs (KGs). The authors propose an innovative architecture that addresses the shortcomings of current State-of-the-Art (SOTA) methodologies, particularly when working with incomplete KGs.

Methodology Overview

The proposed architecture leverages KG embeddings for robust reasoning over incomplete KGs in FVQA tasks. By utilizing "Image-as-Knowledge" representations, the model encodes visual entities as vectors in the multi-dimensional space of KG embeddings. This is complemented by a CoAttention mechanism for effectively combining visual and textual inputs. This methodology allows the system to maintain computational efficiency, with an inference complexity of compared to the of existing methods.

The core innovation here is the use of KG embeddings to encode both visual and common-sense information, acting as a bridge between incomplete knowledge graphs and question answering tasks. The authors demonstrate that these embeddings, particularly when combined with traditional word embeddings, offer improved performance, achieving approximately 26% absolute increase in answering questions involving missing-edge reasoning.

Results and Implications

The empirical results indicate that the proposed approach achieves performance comparable to, or surpassing, existing techniques in the standard answer retrieval task, with strong improvements noted in scenarios simulated to test missing-edge reasoning. Specifically, the incorporation of a composite score metric—consisting of KG similarity, Jaccard similarity, and GloVe similarity—proved effective in advancing performance by integrating complementary lexical and graphical information.

The implication of these findings is significant, offering a pathway for models to reason reliably in environments where not all background knowledge is encoded in the accessible KG structure. This could bear immense practical utility in real-world applications, where data can often be fragmented or incomplete.

Theoretical Contributions

Theoretically, this work introduces an important extension to the domain of multi-modal machine reasoning, pushing the envelope of how artificial systems can emulate human-like reasoning with incomplete information. The notion of representing images through KG embeddings presents an intriguing fusion of multi-relational data and visual recognition capabilities.

Prospective Research Directions

Looking forward, this paper opens several avenues for further exploration. Future developments may involve refining the robustness of embeddings to handle even more significant layers of incompleteness or enhancing the interpretability of CoAttention mechanisms. Additionally, there is potential for applying this approach in broader AI systems, beyond visual question answering, into other multi-modal reasoning areas, considering the integration of contextual embeddings to further enrich data representations.

The proposed method indeed reflects a valuable stride towards AI systems capable of contextual understanding, emphasizing the utility of embeddings in bridging gaps between disconnected or incomplete knowledge sources. This contributes to a nuanced understanding of how real-world information processing tasks can be approached more comprehensively.