MedQA: An Open Domain QA Dataset for Medical Problems

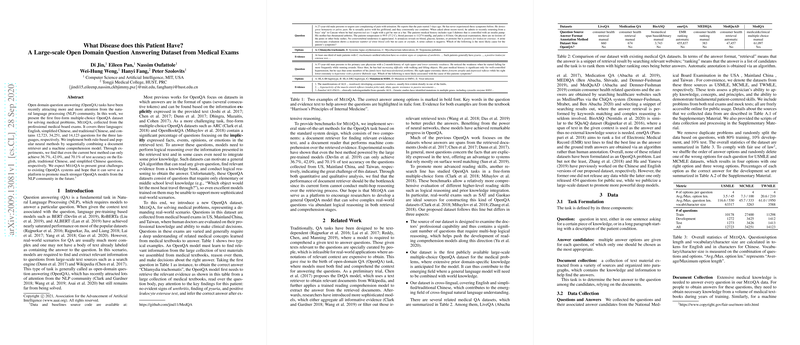

The paper introduces MedQA, a sophisticated, large-scale, open domain question answering (OpenQA) dataset specifically designed for medical problem-solving. This dataset presents a substantial challenge to contemporary NLP models and serves as a platform to propel the development of OpenQA models in the community.

Dataset Description

MedQA is derived from professional medical board exams, encompassing English, simplified Chinese, and traditional Chinese languages. It consists of 12,723 questions in English, 34,251 in simplified Chinese, and 14,123 in traditional Chinese. This distribution highlights the dataset's linguistic diversity and the potential it holds for cross-lingual NLP research.

The dataset is formulated as a multiple-choice QA task, where questions are not simply text-dependent but require extensive comprehension and reasoning skills. The QA tasks in MedQA are extracted from real-life medical exams, demanding not only linguistic processing but also deep medical knowledge.

Methodology

To benchmark MedQA, the authors implement both rule-based and neural methods, focusing on a two-step system design: document retrieval followed by a document reader. The retrieval step utilizes methods such as PMI and customized IR systems to retrieve relevant evidence from a comprehensive text corpus comprising medical textbooks. The document reader employs neural architectures, including BiGRU and state-of-the-art pre-trained models like BERT and RoBERTa.

Despite utilizing advanced models, the experimental results highlight the difficulty of MedQA. The best-performing system achieves 36.7% accuracy for English, 42.0% for traditional Chinese, and 70.1% for simplified Chinese. These results indicate the complexity of the dataset and the limitations of current OpenQA models.

Challenges

MedQA introduces several unique challenges:

- Domain-Specific Knowledge: Unlike general QA tasks, MedQA requires understanding of professional medical concepts, pushing the boundaries of pre-trained LLMs which typically excel in tasks requiring common-sense knowledge.

- Diverse Question Types: The dataset includes two primary question types: straightforward knowledge queries and complex patient-case evaluations necessitating multi-hop reasoning.

- Evidence Retrieval Complexity: The need for extensive reasoning over large-scale documents makes retrieval challenging, particularly when multi-step inference is required.

Implications and Future Work

The introduction of MedQA has significant implications for future AI developments. The dataset's complexity necessitates advances in multi-hop reasoning capabilities, robust information retrieval techniques, and integration of specialized knowledge domains in QA models. Furthermore, the cross-lingual component of MedQA supports exploration into language-agnostic QA systems.

Future research could focus on enhancing retrieval systems' reasoning abilities, improving comprehension models specifically for domain-specific knowledge, and leveraging transfer learning to improve performance on such challenging datasets. MedQA stands as a critical resource to catalyze innovation and development of robust, intelligent QA systems that can thrive in real-world, complex environments.