Overview of "Huatuo-26M, a Large-scale Chinese Medical QA Dataset"

The research paper introduces Huatuo-26M, a comprehensive Chinese medical question-answering (QA) dataset that includes 26 million QA pairs. This dataset is distinguished by its unparalleled scale, aiming to meet the data demands of pre-trained LLMs (PLMs) in medical NLP applications. The authors emphasize the difficulties of applying PLMs to medical fields, highlighting the urgent need for abundant and high-quality data. This dataset is expected to enhance the capabilities of PLMs in the medical domain.

Dataset Composition and Acquisition

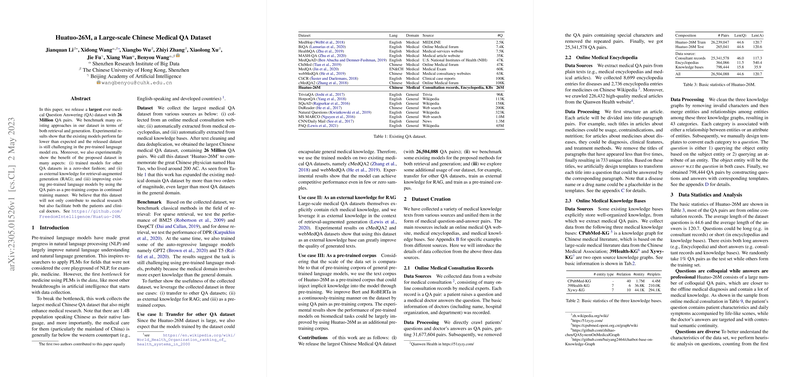

The Huatuo-26M dataset comprises QA pairs derived from diverse sources: online medical consultation records, medical encyclopedias, and existing medical knowledge bases. The data was meticulously collected, cleaned, and de-duplicated, resulting in a dataset that not only surpasses existing medical QA datasets in scale but also offers a wide variety of questions and professional answers.

- Online Medical Consultation Records: The largest portion of the dataset was obtained from online platforms where patients seek advice from medical professionals. This contributed to a significant number of colloquial questions paired with expert responses.

- Medical Encyclopedias and Knowledge Bases: Additional data was harvested from medical encyclopedias and structured knowledge bases, complementing the data with more systematic and formal medical information.

Benchmarking and Evaluation

Huatuo-26M was assessed using classical retrieval and generative models, marking a rigorous benchmark in the domain:

- Retrieval Models: Sparse (BM25, DeepCT) and dense (DPR) retrieval models were evaluated, demonstrating that existing models face challenges when applied to this dataset, primarily due to the intricate medical knowledge required.

- Generative Models: The authors fine-tuned T5 and GPT2 models on Huatuo-26M data. Despite significant improvements post fine-tuning, the generated answers still indicate room for improvement, reflecting the complexity and diversity of the dataset content.

Practical and Theoretical Implications

The dataset has several potential applications:

- Transfer Learning: Models pretrained on Huatuo-26M can generalize well to other medical QA datasets, demonstrating its efficacy as a pre-training resource for transfer learning.

- Retrieval-Augmented Generation (RAG): Leveraging the dataset as an external knowledge base significantly improves text generation quality in RAG setups, highlighting the dataset's value in integrating PLMs with non-parametric memory.

- Continued Pre-training: Using Huatuo-26M as a pre-training corpus enhances existing models' performance in medical NLP tasks, as evidenced by improvements on the CBLUE medical benchmark.

Conclusions and Future Directions

Huatuo-26M stands as a landmark contribution to Chinese medical NLP, providing a substantial resource that aligns with the pressing need to integrate AI into healthcare. The dataset offers extensive opportunities for future research, notably in enhancing LLM-based QA systems' ability to reason with domain-specific knowledge. However, the authors caution that the dataset may contain inaccuracies and that generation technologies' current limitations pose risks in real-world medical applications. Future improvements could involve expanding the dataset to other languages and systematically curating the data to ensure higher accuracy and reliability.

Overall, Huatuo-26M presents both opportunities and challenges, charting a path forward for increased AI involvement in healthcare through more effective and accessible LLMs.