Explainability in Deep Reinforcement Learning

The paper "Explainability in Deep Reinforcement Learning" authored by Alexandre Heuillet, Fabien Couthouis, and Natalia Díaz-Rodríguez presents an in-depth analysis of the current landscape in Explainable Reinforcement Learning (XRL), a subset of Explainable Artificial Intelligence (XAI) that is gaining importance in making Reinforcement Learning (RL) models more interpretable to humans. RL models often operate as complex systems, perceived as black boxes, which necessitates the development of explainability methods to make these models transparent, especially in critical applications impacting the general public.

Overview of Explainable Reinforcement Learning

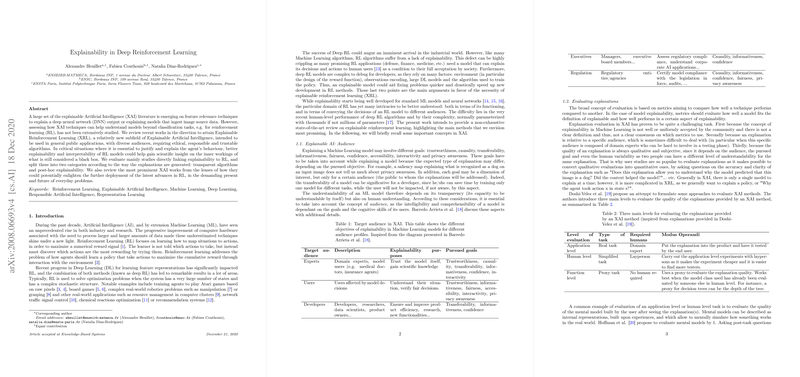

Explaining the behavior of RL models presents unique challenges compared to traditional machine learning models, as RL encompasses agents learning optimal policies through interaction with an environment rather than just mapping inputs to outputs. The paper categorizes XRL techniques into two main types: transparent algorithms and post-hoc explainability.

- Transparent Algorithms: These include methods inherently designed to be interpretable. In the RL context, transparent methods typically involve designing algorithms for specific tasks, incorporating structural insights directly into the model. Examples include hierarchical reinforcement learning and reward decomposition, which assist in both achieving state-of-the-art performance on particular tasks and providing explanations for agent decisions. The introduction of causal models, such as action influence models, further enhances understanding by mapping causal relationships within decision processes.

- Post-Hoc Explainability: Post-hoc methods aim to enhance the interpretability of trained black-box models. Techniques such as saliency maps and interaction data analysis help elucidate the aspects of input influencing decision-making. Although promising, these methods require careful consideration to avoid fallacies in interpretation, as they often rely heavily on visual or statistical methods that might oversimplify or misrepresent complex decision-making processes of RL models.

Implications and Future Research

The dichotomy between transparent algorithms and post-hoc strategies highlights the dual approach needed to advance explainability in RL. Integrating representation learning, disentanglement, and compositionality are potential strategies to further enhance transparency. Moreover, theoretical advancements that could democratize these approaches across various complex environments and algorithms are crucial. Current methods exhibit limitations as they are typically tailored to specific environments or rely on task-specific features, impeding their universal applicability.

A notable point emphasized in the paper is the importance of not only achieving model performance but also providing actionable insights to various audiences, from domain experts to end-users. The comprehensive taxonomy presented in the paper could guide future XRL research by marrying the theoretical advancements in AI with real-world accountability and transparency demands.

Conclusion

The discussion in this paper underlines the crucial need for extendable, audience-oriented approaches that ensure RL systems are as interpretable as they are performant. Moving forward, the field of XRL will require innovation in foundational techniques, potentially drawing from areas such as symbolic AI, and lifelong learning, and interdisciplinary insights to meet the growing needs of real-world applications. The progression towards more generalized frameworks and universally applicable post-hoc methods will be foundational in bridging the gap between RL model outputs and their interpretability to humans, thus fostering broader acceptance and deployment within society.