An Analysis of "A Survey on Explainable Reinforcement Learning: Concepts, Algorithms, and Challenges"

The surveyed paper, "A Survey on Explainable Reinforcement Learning: Concepts, Algorithms, and Challenges," authored by Yunpeng Qing et al., provides an extensive review of the advancing field of Explainable Reinforcement Learning (XRL). The paper is constructed to offer a structured perspective on the subsisting body of research, focusing on explainability in reinforcement learning (RL) and the distinct frameworks that have been developed to enhance it. This analysis delineates key conceptual frameworks and methodologies put forth by the authors and discusses their implications.

Core Contributions and Methodological Taxonomy

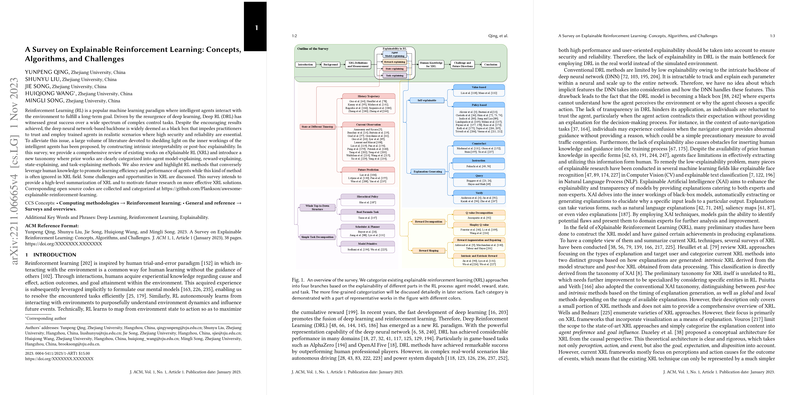

The authors present a detailed taxonomy that classifies explainable reinforcement learning techniques primarily into four categories: agent model-explaining, reward-explaining, state-explaining, and task-explaining methods. Each category reveals a different facet of RL explainability:

- Agent Model-explaining Methods: These methods target the intrinsic explainability of the agent's decision-making mechanisms. The paper further divides these into self-explainable models and explanation-generating models. The former leverages inherently interpretative models like decision trees and fuzzy controllers. In contrast, the latter includes auxiliary constructs that generate explanations through logical reasoning and counterfactual analysis.

- Reward-explaining Methods: This category underscores the division and reformation of rewards into explainable segments, highlighting different aspects of the reward functions that impact agent behavior. Methods such as reward decomposition and reward shaping are examined for their potential to provide nuanced insights into the agent's learning incentives.

- State-explaining Methods: By analyzing the state signals that an RL agent processes, these methods aim to elucidate which state features significantly impact decision-making. The methodologies include inference of historical trajectory, analysis of the current observation, and future state prediction.

- Task-explaining Methods: The paper outlines hierarchical reinforcement learning (HRL) approaches that afford architectural explanation by decomposing complex tasks into simpler, manageable subtasks. This decomposition offers intrinsic insight into task execution strategies employed by RL agents.

Implications and Opportunities for Future Research

The paper adeptly showcases the diversified approaches employed to attain explainability within RL systems. This comprehensive taxonomy elucidates that the complexity and opacity of RL algorithms can be effectively mitigated by targeting specific components or employing hierarchical structures.

However, the survey acknowledges the nascent stage of XRL and highlights several areas demanding further exploration. One notable aspect is the integration of human knowledge into the RL paradigm, which holds promise for enhancing explainability while leveraging human cognitive intuitions. The prospect of developing more sophisticated, universally applicable evaluation metrics also remains underexplored. Effective evaluation tools are paramount for judging the practical utility of various explainability methods, which will be a focus for future discourse and development within the AI research community.

Theoretical and Practical Considerations

From a theoretical standpoint, the proposed classification fosters a structured understanding of the different dimensions and scopes of explainability within RL systems. The inclusion of reward-explaining and state-explaining approaches highlights the importance of isolating and explicating task components for an in-depth interpretation of how agents learn and make decisions.

Practically, enhancing explainability is crucial for the deployment of RL systems in real-world scenarios that demand transparency and reliability, such as autonomous driving and healthcare. Addressing challenges like balance between model performance and interpretability, as well as creating adaptive techniques for varied environments, will be significant for moving forward.

Conclusion

In conclusion, this paper delivers a substantive review of the prevailing trends and methodologies in explainable reinforcement learning, and it initiates important discussions on overcoming current limitations. Through the thorough examination of diverse XRL methods, the authors provide a foundation upon which future advancements can build, ensuring RL technologies are both effective and interpretable for broader real-world applications.