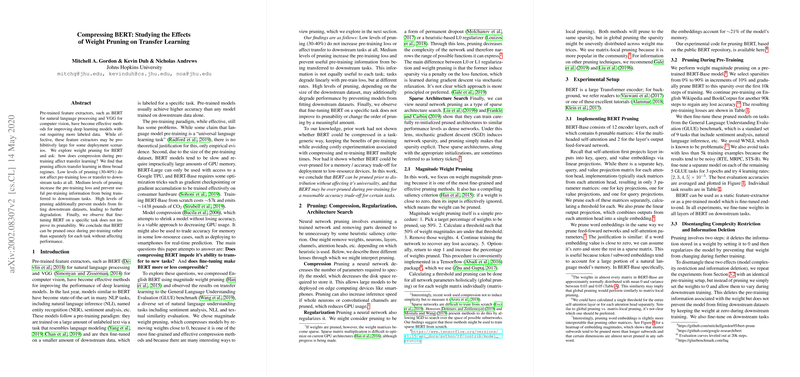

Effects of Weight Pruning on BERT for Transfer Learning

The paper "Compressing BERT: Studying the Effects of Weight Pruning on Transfer Learning" investigates the implications of applying weight pruning techniques to BERT, a prominent pre-trained model for NLP. The authors aim to evaluate how such compression affects the model's performance during the crucial stage of transfer learning.

Key Findings

- Pruning Regimes:

- Low Levels of Pruning (30-40%): At this pruning level, BERT maintains its pre-training loss and effectively transfers knowledge to downstream tasks. This suggests that a significant portion of BERT's weights are not critical for its standard functions.

- Medium Levels of Pruning: Increasing pruning leads to higher pre-training loss and hinders effective transfer to downstream tasks. Each task's degradation varies, indicating different levels of dependency on the pruned pre-training information.

- High Levels of Pruning: At this stage, BERT experienced further degradation as it struggles to fit downstream datasets due to reduced model capacity.

- Task Agnostic Compression Viability:

- The paper reveals that BERT can be pruned uniformly during pre-training without separately pruning for each downstream task, maintaining performance levels across tasks. Notably, pruning efficiency is not enhanced even with additional fine-tuning on specific tasks post-pruning.

- Implications of Pruning:

- Compression vs. Accuracy Trade-off: Pruning provides opportunities to compress models significantly, essential for deploying on low-resource devices without substantial losses in accuracy.

- Insight into Model Architecture: Pruning acts as both compression and architecture search, shedding light on the redundancies within BERT’s network architecture.

- Practical Considerations:

- Developers can apply a 30-40% pruning strategy across the board without repeated experimental costs for multiple downstream tasks, implying practical efficiency benefits.

Implications and Future Directions

The research extends beyond immediate applications, proposing thought-provoking questions about replicability across other large NLP models such as XLNet, RoBERTa, and GPT-2. Given their similar architectures, the findings regarding pre-trained models’ compressibility and the maintenance of transfer learning efficacy may apply broadly.

In future developments, focusing on more nuanced methods for maintaining inductive bias during pruning seems promising. Understanding task-specific relationships of LLMing with pruning could help researchers devise more precise, optimally compressed models.

Conclusions

This paper provides crucial insights into the efficiency and limitations of pruning BERT for transfer learning. BERT can be effectively compressed without losing its generalization capabilities, which is vital for resource-constrained deployment scenarios. However, preserving inductive bias in the face of over-pruning remains a challenge, indicating the intricate balance needed between model robustness and memory efficiency. These insights are pivotal for future advances in model compression and transfer learning methodologies within NLP.