DistilBERT: A Distilled Version of BERT with Superior Efficiency

In the evolving landscape of NLP, large-scale pre-trained models have become an indispensable tool, enhancing performance across a wide array of tasks. However, the computational demands and environmental costs of these models present significant challenges, particularly when aiming for efficient on-device computations. The paper "DistilBERT, a distilled version of BERT: smaller, faster, cheaper, and lighter" by Victor Sanh, Lysandre Debut, Julien Chaumond, and Thomas Wolf addresses these issues by introducing a smaller and more efficient version of BERT, named DistilBERT.

Pre-training with Knowledge Distillation

Traditionally, knowledge distillation has been employed for building task-specific models. In contrast, this work leverages distillation during the pre-training phase to create a general-purpose language representation model. By employing a triple loss function that combines LLMing, distillation, and cosine-distance losses, the authors have demonstrated a reduction in model size by 40% while retaining 97% of BERT's language understanding capabilities. This results in a model that is 60% faster at inference time.

Methodology

Model Architecture and Training

DistilBERT maintains the general architecture of BERT but with several modifications aimed at reducing computational complexity while maximizing efficiency:

- The number of layers is halved.

- Token-type embeddings and the pooler are removed.

- Optimal initialization is achieved by selecting one layer out of two from the teacher model (BERT).

The training of DistilBERT was conducted on a concatenation of English Wikipedia and Toronto Book Corpus, using 8 16GB V100 GPUs over approximately 90 hours. This is notably more efficient compared to other models, such as RoBERTa, which required substantial computational resources.

Distillation Process

The distillation process involves training a student model to replicate the behavior of a larger teacher model. The training objective combines:

- Cross-Entropy Loss (): Distillation loss over the teacher's soft target probabilities.

- Masked LLMing Loss (): Standard loss used in BERT's pre-training.

- Cosine Embedding Loss (): Aligns the directions of the student and teacher hidden state vectors.

Experimental Results

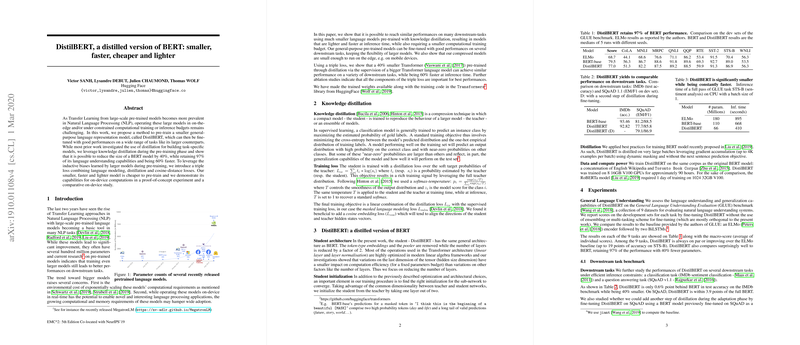

The efficacy of DistilBERT was evaluated on the General Language Understanding Evaluation (GLUE) benchmark, comprising nine diverse NLP tasks. The results demonstrate that DistilBERT consistently matches or surpasses the ELMo baseline and retains 97% of BERT's performance despite a substantial reduction in parameters (Table 1).

Further evaluations on downstream tasks like IMDb sentiment classification and SQuAD v1.1 show that DistilBERT achieves comparable performance to BERT with significantly reduced inference time and computational requirements (Tables 2 and 3). Additional distillation steps during fine-tuning further enhance DistilBERT's performance close to BERT's capabilities.

Ablation Study

A comprehensive ablation paper indicates that each component of the triple loss substantially contributes to the model's performance. Notably, removing the cosine-distance loss and the masked LLMing loss reduces the GLUE macro-score appreciably, underscoring the importance of these components in the distillation process (Table 4).

Implications and Future Work

The introduction of DistilBERT has significant implications for both practical applications and future research:

- On-device Computation: The efficient architecture of DistilBERT makes it suitable for edge applications, including mobile devices, as demonstrated by comparative inference speed and model size evaluations.

- Environmental Impact: The reduced computational requirements of DistilBERT align with growing concerns about the environmental cost of large-scale model training.

Future research may explore further optimizations and applications of distillation techniques, considering other architectures and diverse NLP tasks. Additionally, integrating techniques like pruning and quantization with distillation may yield even more efficient models.

In conclusion, DistilBERT presents a compelling solution to the trade-offs between model size, performance, and efficiency, marking a significant step towards more sustainable and accessible NLP technologies. This work not only broadens the accessibility of advanced LLMs but also sets a precedent for future research in model compression and efficient training methodologies.