TinyBERT: Distilling BERT for Natural Language Understanding

The paper "TinyBERT: Distilling BERT for Natural Language Understanding" presents a method to reduce the size and inference time of BERT models while retaining substantial performance. This paper introduces TinyBERT, a distilled version of BERT designed specifically for deployment in resource-constrained environments.

Key Contributions

The paper makes the following key contributions:

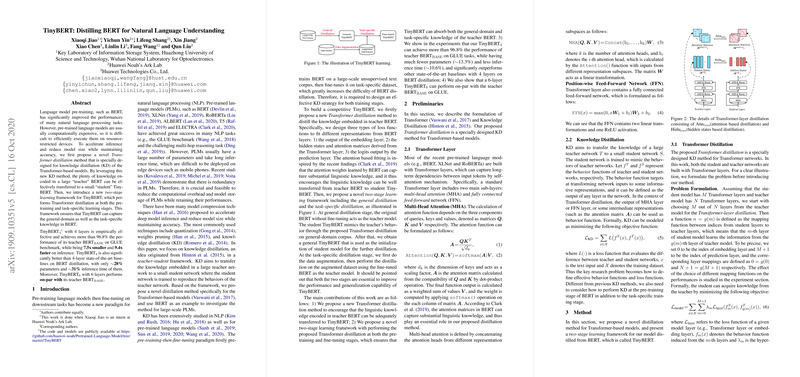

- Transformer Distillation Method: This novel method is tailored to the needs of Transformer-based models like BERT. It encompasses multiple distillation objectives that ensure the transfer of crucial knowledge from the teacher BERT model to the compact TinyBERT student model.

- Two-stage Learning Framework: The framework consists of:

- General Distillation: Applied during the pre-training phase to capture general-domain knowledge. This phase ensures that TinyBERT inherits the linguistic generalization capability of the base BERT.

- Task-specific Distillation: Conducted during the fine-tuning phase. It further refines TinyBERT by learning task-specific knowledge from the fine-tuned base BERT.

Experimental Results

Quantitative Outcomes

- Model Efficiency: The TinyBERT model, with 4 layers, achieves approximately 96.8% of the performance of BERT on the GLUE benchmark. This is accomplished while being 7.5 times smaller and 9.4 times faster in terms of inference.

- Comparative Performance: TinyBERT surpasses other 4-layer distilled models like BERT-PKD and DistilBERT with roughly 28% of the parameters and 31% of the inference time compared to these models.

Model Architecture and Settings

- Student Model: TinyBERT has a hidden size of 312 and a feed-forward size of 1200.

- Teacher Model: BERT is utilized as the teacher model with its 12 layers and a hidden size of 768.

- Mapping Function: The layer mapping function adopted is for effective knowledge transfer.

Analysis and Implications

Importance of Learning Procedures

The paper's ablation analysis demonstrates the necessity of both general and task-specific distillation procedures:

- General Distillation (GD): Provides a stable initialization by transferring general-domain information.

- Task Specific Distillation (TD): Further optimizes TinyBERT on specific tasks using augmented datasets.

Theoretical and Practical Implications

Theoretically, the proposed two-stage learning framework successfully captures both generalized and specialized knowledge, creating a robust small model performance-wise. Practically, TinyBERT’s substantial improvements in size and speed make it suitable for deployment on edge devices, such as mobile phones.

Future Directions

The research opens several pathways for future developments:

- Distillation from Larger Models: Extending the distillation techniques to wider (e.g., BERT) or deeper models.

- Hybrid Compression Techniques: Combining knowledge distillation with other compression methods like quantization and pruning to achieve even more lightweight models suitable for diverse applications.

In conclusion, the paper meticulously addresses the challenge of compressing BERT models without significant performance degradation, thereby facilitating their usability in resource-constrained environments. It establishes a robust foundation upon which future model compression techniques can be built and refined.