- The paper shows that unsupervised language models such as BERT can implicitly store factual knowledge with high precision.

- It employs the LAMA framework to convert triples into cloze statements and evaluates retrieval efficiency using rank-based precision metrics.

- The analysis reveals robust performance across diverse relation types, highlighting potential for next-generation hybrid KB systems.

LLMs as Knowledge Bases?

Introduction

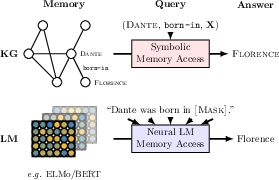

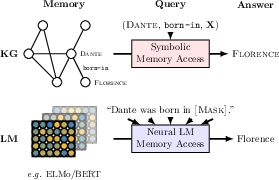

The paper “LLMs as Knowledge Bases?” explores the potential of contemporary LMs, particularly BERT, to serve as unsupervised knowledge repositories. These models, traditionally optimized for linguistic tasks, are assessed here for their ability to implicitly store and retrieve factual information. Given the flexibility of LMs, they offer unique benefits over structured knowledge bases (KBs), as they do not require extensive schema engineering or manual annotations.

Figure 1: Querying knowledge bases (KB) and LLMs (LM) for factual knowledge.

Methodology

The study employs a probing strategy leveraging the LAMA (LLM Analysis) framework, designed to evaluate the factual and commonsense knowledge encapsulated in these models. This involves converting triples and question-answer pairs into cloze statements and querying LMs by filling in masked tokens.

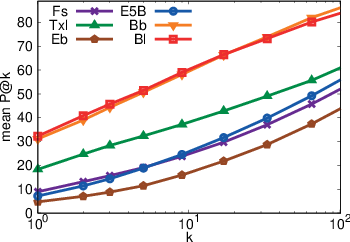

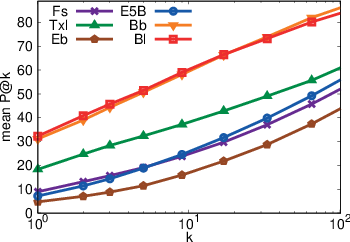

The evaluation spans various knowledge sources: T-REx for Wikidata triples, Google-RE for manually extracted facts from Wikipedia, ConceptNet for commonsense knowledge, and SQuAD for question-answer pairs. Performance is measured primarily with precision at rank k (P@k), considering rank-based metrics to understand the retrieval efficiency relative to traditional baselines like supervised relation extraction (RE) systems.

Analysis and Results

Initial results indicate BERT-large demonstrates notable proficiency in retrieving relational information, achieving precision levels comparable to traditional knowledge extraction systems aided by oracle-driven entity linkage. Notably, BERT performs robustly across various relation types, though certain N-to-M relations present challenges.

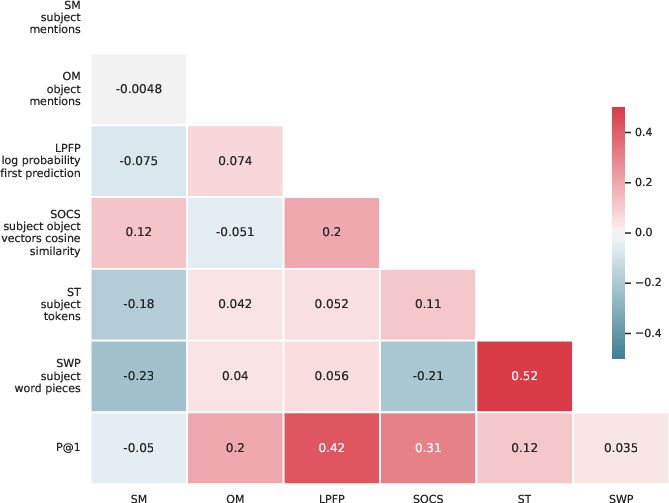

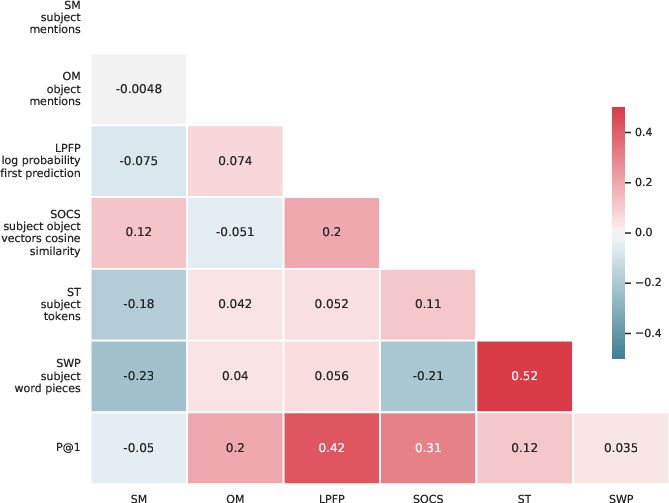

Further analysis reveals dependencies between model predictions and factors such as the frequency of objects within the training set, subject-object similarity, and prediction confidence. These observations underscore the latent knowledge storage capabilities of LMs.

Figure 2: Mean P@k curve for T-REx varying k. Base-10 log scale for X axis.

Figure 3: Pearson correlation coefficient for the P@1 of the BERT-large model on T-REx and a set of metrics.

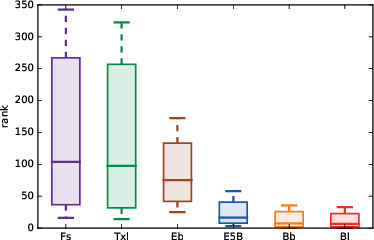

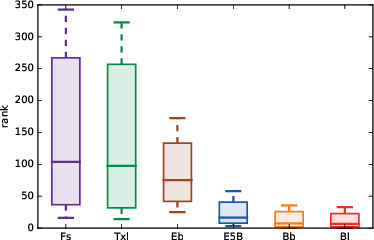

An intriguing aspect of the analysis is the evaluation of BERT's robustness to the phrasing variations of query inputs. Evaluation results (Figure 4) suggest that BERT and ELMo 5.5B are less sensitive to query framing, potentially indicative of their richer contextual embeddings.

Comparative Evaluation

The study contrasts LLMs' retrieval capacities with a range of existing systems, including frequency-based and DrQA informed configurations. Despite being unsupervised, BERT achieves competitive scores, particularly in open-domain question answering, where it approaches the performance of structured knowledge systems using supervised data.

Figure 4: Average rank distribution for 10 different mentions of 100 random facts per relation in T-REx. ELMo 5.5B and both variants of BERT are least sensitive to the framing of the query but also are the most likely to have seen the query sentence during training.

Discussion and Implications

The paper highlights the significant potential of LLMs as repositories for general and domain-specific knowledge. BERT's high rank accuracy without fine-tuning advocates for their use in applications requiring knowledge representation without the rigidity of traditional KBs. However, limitations remain in handling sparse relations and the variances in prediction associated with different cloze templates.

Future work could involve refining the models' fine-tuning strategies to enhance recall precision for less commonly encountered patterns. Moreover, the transition towards integrating structured knowledge extraction with LM embeddings could yield next-generation hybrid systems that leverage the strengths of both paradigms.

Conclusion

In conclusion, this paper demonstrates that LLMs, particularly BERT, can effectively function as substantial KBs, challenging conventional information retrieval paradigms. By shifting focus towards unsupervised knowledge representation, LMs showcase promising applications extending beyond traditional linguistic tasks, advocating for expanded research in this emergent domain.