Examining the Extraction of Knowledge From LLMs via Automated Prompt Generation

The paper "How Can We Know What LLMs Know?" by Zhengbao Jiang et al. addresses the challenge of effectively probing knowledge embedded within LLMs (LMs) by improving the technique used to generate prompts. Traditional methods utilizing manually crafted prompts are limited in their capacity to accurately assess the knowledge contained in LMs, thereby providing only a lower bound on what LMs can actually recall. This paper proposes automated methods to generate high-quality prompts, which both expands the diversity of prompts and increases the accuracy of LMs in fact retrieval tasks.

Key Contributions

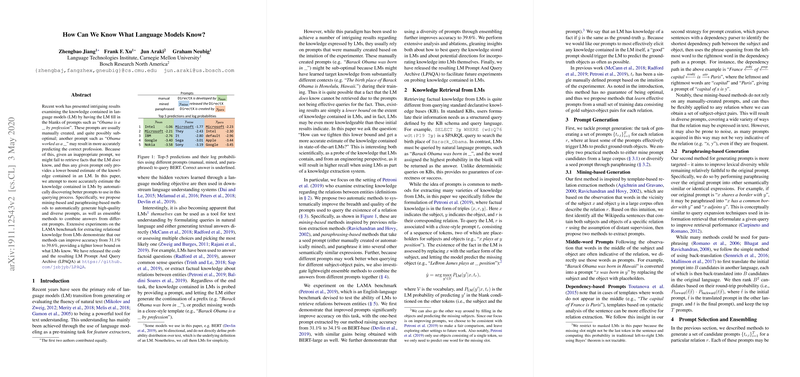

- Automated Prompt Generation: The paper introduces two distinct methods for prompt generation—mining-based and paraphrasing-based:

- Mining-based Generation: Extracts prompt candidates from large corpora using techniques inspired by relation extraction. Prompts are generated by identifying words in the middle of subject-object pairs or by using dependency paths.

- Paraphrasing-based Generation: Produces semantically similar prompts by paraphrasing existing ones through back-translation methods.

- Prompt Combination via Ensembling: The paper explores methods to combine multiple prompts to query LMs:

- Rank-based Ensembling: Prompts are ranked and combined by averaging their log probabilities.

- Optimized Ensembling: Uses a softmax distribution over prompts, where parameters are optimized based on training data to maximize fact recall probability.

- Empirical Evaluation: Extensive experiments were conducted on the LAMA benchmark, particularly its T-REx subset, achieving a notable improvement in accuracy from 31.1% to 39.6% for BERT-base and from 32.3% to 43.9% for BERT-large using optimized ensemble methods. The paper also evaluated the framework on other pre-trained models such as ERNIE and KnowBert, showcasing consistent improvements.

Numerical Results and Findings

- Individual Prompt Improvement: Use of the best-automatically generated prompts improved the prediction accuracy to 34.1% for BERT-base and 39.4% for BERT-large.

- Ensemble Performance: Optimized ensemble methods yielded substantial gains, achieving up to 39.6% accuracy with BERT-base and 43.9% with BERT-large.

- Cross-Model Consistency: Ensembling methods provided robust improvements across different LMs, underscoring the generality and effectiveness of the proposed approach.

Analysis and Implications

Practical Implications

- Enhanced Knowledge Extraction: By utilizing a diverse set of optimized prompts, the ability of LMs to recall factual knowledge is significantly improved. This has practical implications for deploying LMs in knowledge-intensive applications such as question answering and information retrieval.

- Robust Query Mechanisms: The research highlights the sensitivity of LMs to prompt phrasing. Developing robust mechanisms that can adaptively rephrase or paraphrase queries could yield more reliable outputs in real-world applications.

Theoretical Implications

- Model Understanding: The paper provides insights into the internal workings of LMs, revealing their dependence on specific query formulations. This could inform further research into model architecture that can uniformly handle varied inputs.

- Future Developments in AI: The emphasis on prompt optimization might foster advancements in self-improving models that can learn to generate and optimize their prompts, leading to more autonomous AI systems.

Future Directions

- Prompt Robustness: Future work could explore developing models that remain consistent irrespective of slight changes in query phrasing, enhancing the robustness of LMs.

- Integration with External Knowledge Bases: Combining the prompt optimization techniques with LMs that integrate external knowledge bases might further boost performance.

- Optimizing for Macroscale Accuracy: Research can also focus on improving macro-averaged accuracy to ensure LMs can accurately retrieve a more diverse set of unique facts.

In conclusion, this paper provides a compelling answer to how better prompts can tighten the lower bound of knowledge extraction from LMs. The combination of mining-based and paraphrasing-based generation methods, along with sophisticated ensemble techniques, significantly enhances the performance of fact retrieval tasks. This represents a critical step towards more accurate and reliable AI systems capable of effectively leveraging the vast amounts of knowledge they encapsulate.