Mitigating Gender Bias in Natural Language Processing: A Literature Review

In recent discourse on ethical AI, the issue of gender bias in NLP systems has gained prominence. As NLP models continue to diversify their applications, recognizing their potential to perpetuate societal biases is crucial. This paper provides a comprehensive review of current methods aimed at identifying and mitigating gender bias in NLP, focusing on representation bias and the efficacy of existing debiasing techniques.

Key Highlights and Findings

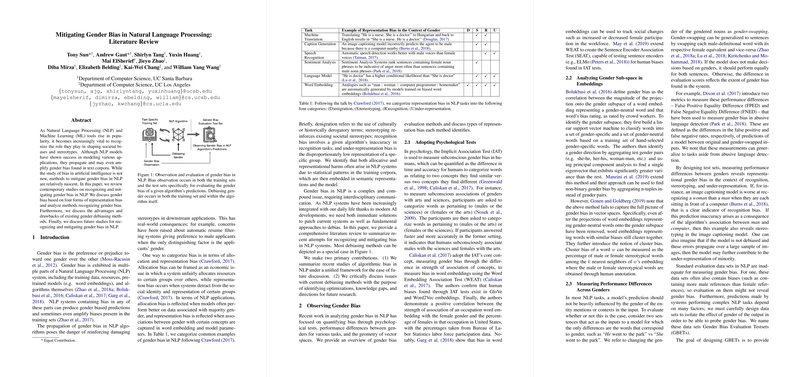

The paper classifies gender bias in NLP systems into two main types: allocation bias and representation bias. The authors emphasize the importance of understanding these biases as they examine several representation biases, such as denigration, stereotyping, recognition, and under-representation. Each of these biases has distinct manifestations across various NLP tasks, like machine translation, caption generation, and sentiment analysis.

The paper reviews methods such as the Word Embedding Association Test (WEAT) and the Sentence Encoder Association Test (SEAT) to detect biases embedded within word representations. These tests have provided evidence correlating word embeddings with gender stereotypes widely acknowledged in human psychology.

Debiasing Techniques

- Data Manipulation: Data augmentation using gender-swapping emerges as a pragmatic approach to mitigate biases. This method entails generating parallel datasets with reversed gender references to balance biased training corpora. Although effective across tasks such as coreference resolution and sentiment analysis, the approach has its limitations, such as increased training time and potential for generating nonsensical sentences.

- Embedding Adjustment: Techniques like gender subspace removal in word embeddings, and learning gender-neutral embeddings, have shown success in debiasing word representations. However, these methods are principally effective in Euclidean spaces and predominantly apply to English, thus requiring adaptation for languages with more complex gender constructs.

- Algorithmic Adjustments: The paper describes methods to constrain predictions during model inference to ensure the amplification of bias is minimized. Adversarial learning is also explored as a mechanism to obscure the prediction model’s access to gender information, embodying a robust strategy to attenuate bias in real-time applications.

Implications and Future Directions

The findings underscore the critical need for standardized metrics to evaluate gender bias across NLP applications due to the modular nature of debiasing efforts. Further interdisciplinary research, which integrates insights from social sciences, may enhance understanding and effectively mitigate gender biases. The future trajectory of this research could explore debiasing in multilingual settings and account for non-binary gender biases, transcending the binary gender frameworks currently prevalent.

While this review illustrates the nascent stage of gender bias mitigation in NLP, it sets the stage for ongoing discussions and developments in creating ethically aware AI systems. As these methodologies evolve, they hold promise in shaping NLP technologies that are equitable and inclusive in their linguistic representations and applications.