Analyzing Gender Bias Mitigation in Neural NLP: Counterfactual Data Augmentation vs. Word Embedding Debiasing

The paper "Gender Bias in Neural Natural Language Processing" addresses the critical issue of gender bias in neural NLP systems and evaluates two major bias mitigation strategies: Counterfactual Data Augmentation (CDA) and Word Embedding Debiasing (WED). This research paper provides an intricate examination of how such biases can manifest in NLP tasks like coreference resolution and LLMing, and systematically explores methods to mitigate these biases effectively.

Key Contributions

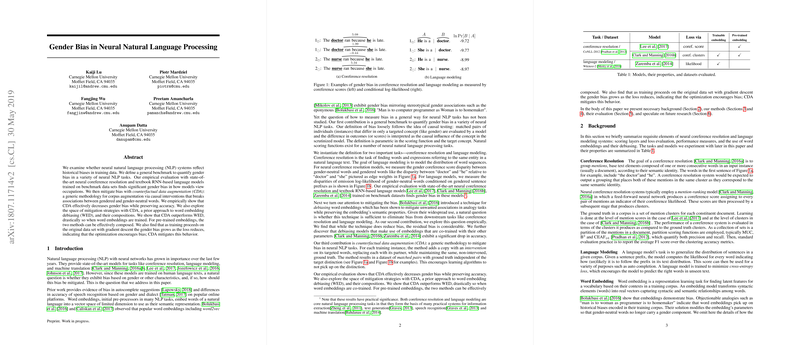

- Definition and Quantification of Gender Bias: The paper begins by establishing a framework for measuring gender bias in NLP tasks. The authors define bias through causal testing, assessing variations in model outputs when presented with matched pairs of sentences that differ only in gender-specific terms. This approach allows for a quantitative assessment of how gender bias manifests in downstream NLP tasks.

- Empirical Evaluation of Gender Bias: Using state-of-the-art models for coreference resolution and LLMing, the authors conduct empirical evaluations on benchmark datasets. The findings confirm the presence of significant gender biases, particularly in how occupations are perceived with respect to gendered pronouns. This serves as a foundation for further exploration of mitigation techniques.

- Mitigation Strategies and Their Efficacy: The paper introduces Counterfactual Data Augmentation as a robust strategy that involves expanding the training corpus with altered sentences that swap gender-specific terms, effectively counteracting biases that may be learned during training. CDA is rigorously compared against WED, a technique that attempts to neutralize bias within word vectors by adjusting their parameters to remove gendered components.

- Comparative Analysis of Mitigation Techniques: CDA demonstrates superiority over WED, notably in scenarios where embeddings are co-trained with model parameters. For pre-trained embeddings, a combination of CDA and WED yields promising results. The paper illustrates that CDA not only significantly mitigates gender bias across various tasks but also maintains predictive accuracy without compromise, which is a notable achievement compared to WED, especially when pre-trained embeddings are adjusted.

- Dynamic Bias Accumulation Observations: Through temporal analysis of training processes, the paper observes that gender bias tends to increase as models optimize their parameters on the original dataset using gradient descent. CDA is shown to mitigate this adverse behavior effectively, preventing the optimization process from amplifying biases further during training.

Implications and Future Directions

The results from this paper underscore the existence of non-trivial gender biases within neural NLP systems and provide empirical evidence supporting the adoption of counterfactual data augmentation as a viable method for mitigating such biases. The implications extend across multiple NLP applications, fostering the development of fairer and more inclusive technologies.

The paper opens various avenues for further research. As neural NLP systems continuously evolve, there remains a compelling need to explore bias in more complex models such as neural machine translation systems. Furthermore, understanding the underlying mechanisms that cause these models to develop biases offers opportunities for integrating bias constraints directly within model architectures and training protocols.

In conclusion, the paper proves to be a foundational piece in the ongoing effort to identify and address gender bias in NLP, offering both a critical diagnostic framework and an effective mitigation strategy in CDA, while also paving the way for advancements in developing unbiased NLP technologies.