Multimodal Transformer with Multi-View Visual Representation for Image Captioning

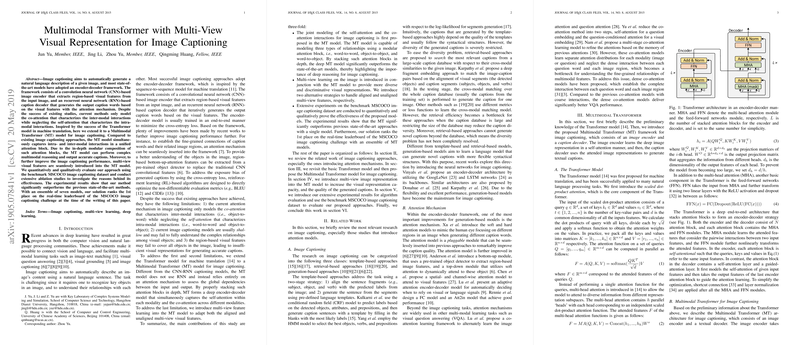

The paper addresses a significant aspect of image captioning, a complex task requiring both vision and language understanding. The central contribution of this work lies in the adaptation of the Transformer model—well-regarded for its efficacy in natural language processing tasks such as machine translation—to handle the intricacies of image captioning. This involves a novel Multimodal Transformer (MT) framework that integrates image and text modalities more effectively than previous models, such as the convolutional neural network (CNN) and recurrent neural network (RNN)-based encoder-decoder frameworks. The MT model exhibits notable advancements in handling inter-modal interactions as well as intra-modal interactions through its unified attention mechanism.

Technical Contributions and Methodology

The MT framework leverages the strength of self-attention mechanisms to concurrently capture intra-modal relations (e.g., object-to-object, word-to-word) and inter-modal relationships (word-to-object). This is a departure from prior approaches that primarily focus on co-attention but tend to overlook the self-attention component. The authors propose a Multimodal Transformer where the architecture functions without the RNN layer, relying solely on a stack of attention blocks to model and capture deep dependencies within and across modalities. The method’s potential lies in its modular composition, allowing for in-depth, complex multimodal reasoning that contributes to the accurate generation of captions.

Another key element is the introduction of multi-view visual features, derived from various object detectors with distinct backbones. By processing multi-view features, the framework enhances the representation capacity of the image encoder. Two models are presented: aligned multi-view and unaligned multi-view encoders, which enable flexible integration of region-based features either through unified pre-computed bounding boxes or adaptive alignment across diverse sets of features, respectively. The latter, in particular, represents a significant advancement in retaining the richness and diversity of multi-view features suitable for accurate image understanding.

Empirical Results

The MT model was evaluated on the MSCOCO benchmark dataset, performing extensive tests to demonstrate superior performance compared to conventional methods. Significant improvements were observed on various metrics, including BLEU, METEOR, and CIDEr, with the MT model achieving the top score on the MSCOCO leaderboard with an ensemble approach. Qualitative analyses support the quantitative results, with attention maps indicating that the MT can adeptly focus on relevant image regions and corresponding descriptive words, showcasing its fine-grained captioning capability.

Ablation studies further inform the model's performance concerning various configurations, including the number of attention blocks and the use of multi-view features. The findings indicate that while increasing the depth of the model (i.e., number of attention blocks) substantially improves results, utilizing unaligned multi-view features yields measurable performance benefits over single-view inputs.

Implications and Future Directions

The implications of this paper are multifaceted. Practically, the MT framework's excellence in capturing the intricate interplay between visual features and linguistic structures can be advantageous in real-world applications, like AI-assisted content creation, image search, and accessibility technologies. From a theoretical perspective, the work sets a precedent for further exploration into transformer-based models in multimodal contexts, indicating the necessity of more sophisticated attention mechanisms to push the boundaries of what multimodal AI systems can achieve.

Speculating about future developments, extending the MT approach to handle additional modalities beyond the current visual and linguistic ones—such as auditory or haptic data—could further expand the application horizons. Additionally, ongoing refinement and optimization, such as reducing computational complexity while maintaining model performance or exploring transformer architecture variations, hold promise for further advancements in multimodal AI. Integrating these insights, the research opens pathways for continued innovation at the intersection of deep learning, computer vision, and natural language processing.