An Analysis of "Unsupervised Data Augmentation for Consistency Training" by Xie et al.

The paper "Unsupervised Data Augmentation for Consistency Training," authored by Qizhe Xie and colleagues, addresses a significant challenge in deep learning: the need for large amounts of labeled data for training models effectively. The authors propose a novel technique named Unsupervised Data Augmentation (UDA), which leverages advanced data augmentation methods within a consistency training framework, demonstrating substantial improvements in various semi-supervised learning tasks across both language and vision domains.

Key Contributions

The authors make several key contributions to the field of semi-supervised learning:

- Conceptual Advancement in Data Augmentation for SSL: They explore the efficacy of using advanced data augmentation methods, such as RandAugment for vision and back-translation for text, over traditional noising techniques (e.g., Gaussian noise, dropout). This is a marked shift from earlier approaches where simpler forms of noising were prevalent in consistency training frameworks.

- Empirical Validation Across Diverse Tasks: UDA is empirically validated on a suite of tasks. For instance, on the IMDb text classification dataset with only 20 labeled examples, UDA achieves an error rate of 4.20, effectively surpassing state-of-the-art models which were trained with 25,000 labeled examples. Similarly, for the CIFAR-10 benchmark, UDA achieves an error rate of 5.43 with 250 labeled examples, outperforming previous methods.

- Robustness and Transfer Learning: UDA shows robustness by performing well when combined with transfer learning techniques. Particularly, fine-tuning from models like BERT yields significant improvements even when large amounts of labeled data are available. This is evident in experiments on ImageNet where UDA enhances top-1 accuracy from 58.84% to 68.78% with 10% labeled data, and from 78.43% to 79.05% when using the full labeled set alongside 1.3 million unlabeled examples from an external dataset.

Methodological Insights

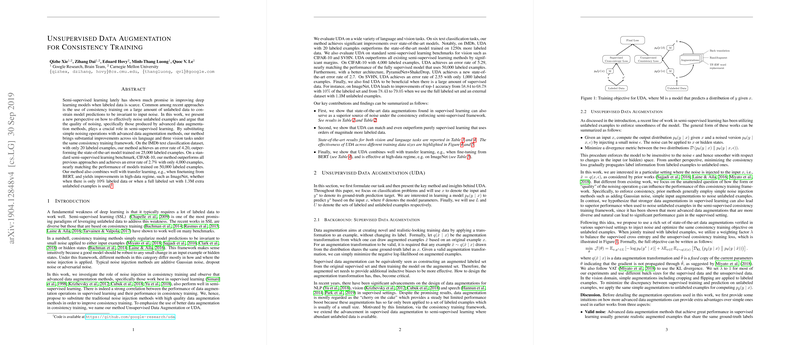

The core of UDA rests on improving the data augmentation process for unlabeled data within the consistency training framework. The idea is to replace traditional noise injections with advanced data augmentation operations validated in supervised settings:

- RandAugment:

For image classification, UDA employs RandAugment which systematically applies diverse transformations to generate a broader spectrum of augmented data without needing a search algorithm to optimize augmentation policies.

- Back-Translation:

For text classification, back-translation is used to create paraphrases, thereby retaining the semantic content while introducing meaningful variability. The augmented sentences are then used to enforce consistency in model predictions.

Theoretical Underpinnings

The paper sketches a theoretical justification demonstrating how UDA, by utilizing advanced data augmentation techniques, effectively enhances semi-supervised learning. Essentially, the argument posits that better augmentations create a more connected graph of data points, enabling efficient propagation of label information throughout the dataset. This theoretical insight aligns with their empirical findings, where augmentation diversity correlates strongly with improved performance.

Numerical Results and Interpretation

The paper reports strong numerical results across multiple benchmarks:

- On CIFAR-10, UDA achieves a 5.43% error rate with 250 labeled examples, a significant reduction from previous bests.

- On IMDb, with only 20 labeled examples, UDA attains a 4.20% error rate, notably outperforming models trained on 25,000 examples.

- For large-scale datasets like ImageNet, UDA enhances top-1 accuracy substantially in both low-data and high-data regimes, demonstrating its scalability and effectiveness.

Implications and Future Directions

The practical implications of this research are profound, particularly in scenarios where labeled data is scarce. UDA’s ability to leverage large volumes of unlabeled data for performance gains can democratize access to powerful deep learning models for groups with limited resources for data annotation.

Theoretically, the findings imply that the benefits of advanced data augmentation in supervised learning extend to semi-supervised contexts. This raises several avenues for future exploration:

- Enhancing augmentation strategies: Investigating other state-of-the-art augmentations like mixup and augmentations tuned specifically for various domains.

- Cross-domain applications: Leveraging UDA for semi-supervised learning in other data-rich but label-scarce environments like medical imaging.

- Combining UDA with unsupervised representation learning techniques: This could further enhance the performance by leveraging the strengths of both paradigms.

In conclusion, “Unsupervised Data Augmentation for Consistency Training” by Xie et al. provides significant insights and methodologies that push the boundaries of semi-supervised learning. By introducing advanced data augmentation techniques into the consistency training framework, the authors lay a robust foundation that can be built upon for future advancements in AI and deep learning.