BioBERT: A Pre-trained Biomedical Language Representation Model for Biomedical Text Mining

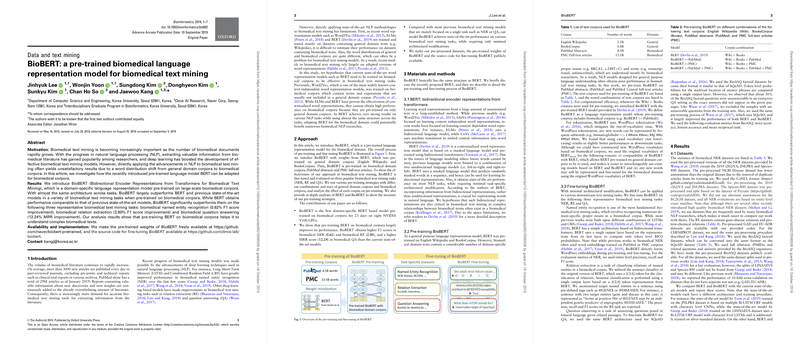

The paper "BioBERT: a pre-trained biomedical language representation model for biomedical text mining" introduces BioBERT, a language representation model specifically pre-trained on biomedical corpora to enhance the performance of various biomedical text mining tasks. Standard state-of-the-art NLP methodologies like BERT often exhibit sub-optimal results when directly applied to biomedical texts due to the unique terminologies and distributions inherent in biomedical corpora. This paper investigates the adaptation and pre-training of BERT on domain-specific corpora to bridge these gaps.

Motivation

The rapid accumulation of biomedical literature has spurred the necessity for sophisticated text mining tools to extract meaningful insights. Traditional NLP models pre-trained on general-domain corpora like Wikipedia and BooksCorpus face significant performance degradation when applied to biomedical text because of domain-specific terminology. This word distribution shift necessitates the development of domain-specific models.

Approach

BioBERT is conceptualized by initializing weights from the original BERT model, which was trained on general-domain corpora, and subsequently pre-training it on large-scale biomedical corpora, including PubMed abstracts and PMC full-text articles. The pre-training involves 23 days of computational effort utilizing eight NVIDIA V100 GPUs to absorb and generalize the biomedical domain's unique linguistic features. The fine-tuning of BioBERT is meticulously executed on three major biomedical text mining tasks: named entity recognition (NER), relation extraction (RE), and question answering (QA).

Results

BioBERT significantly outperforms both traditional models and the original BERT in various biomedical text mining tasks. Specific enhancements were observed in:

- Biomedical Named Entity Recognition (NER): BioBERT achieved a 0.62% improvement in the F1 score.

- Biomedical Relation Extraction (RE): It demonstrated a 2.80% improvement in the F1 score.

- Biomedical Question Answering (QA): Here, BioBERT improved MRR scores by a substantial 12.24%.

These results underscore the proficiency of BioBERT in understanding complex biomedical texts and its superior performance over general-domain models.

Implications

The implications of the research are multifaceted. Practically, the deployment of BioBERT promises to enhance the efficiency and accuracy of biomedical information extraction systems, potentially accelerating research and discovery in the biomedical field. Theoretical implications suggest that domain-specific pre-training is a crucial factor in optimizing LLMs for specialized domains. Furthermore, the public release of BioBERT’s pre-trained weights and source code for fine-tuning opens avenues for future research and model enhancement by the wider scientific community.

Future Developments

BioBERT's success signifies a promising trajectory for domain-specific LLMs. Future developments could include:

- Expansion into other specialized fields (e.g., legal or financial text mining).

- Enhancing the model with additional biomedical corpora to further improve its domain adaptation.

- Investigating the integration of BioBERT with other advanced NLP techniques such as reinforcement learning.

In conclusion, BioBERT represents a significant advancement in the application of deep learning models to the biomedical domain, offering substantial improvements in the critical tasks of NER, RE, and QA. This paper's release of BioBERT's pre-trained weights and fine-tuning resources facilitates ongoing improvements and applications by the biomedical research community.