BioMegatron: Larger Biomedical Domain LLM

The paper "BioMegatron: Larger Biomedical Domain LLM" presents an insightful exploration of LLMs tailored for the biomedical domain. Recognizing the unique challenges and opportunities in biomedical NLP, this research investigates the underexplored aspects of model size and domain-specific pre-training.

Key Contributions

This paper builds upon the successes of domain-specific transformers, such as BioBERT and SciBERT. However, while previous works primarily focused on leveraging existing architectures with domain-specific data, this paper delves deeper into the interplay between sub-word vocabulary, model size, pre-training corpora, and domain transfer.

Model Variants and Configurations:

- BioMegatron Models:

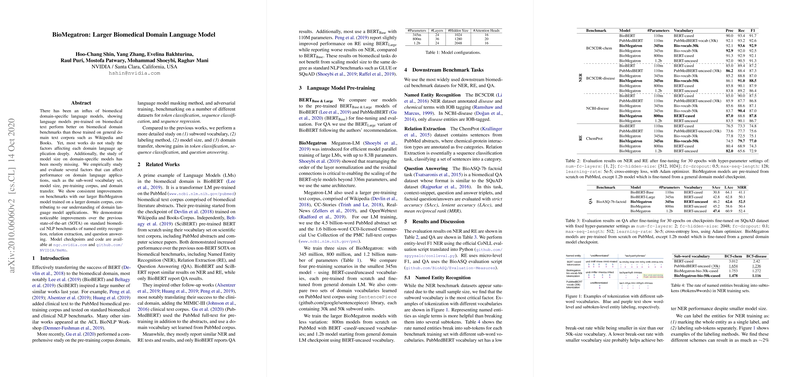

- The authors developed three sizes of the BioMegatron model, with parameter counts of 345 million, 800 million, and 1.2 billion.

- The models were pre-trained using the Megatron-LM framework, known for efficiently training LLMs.

- Pre-training Corpus:

- Utilized a corpus comprising 4.5 billion PubMed abstracts and the 1.6 billion-word PMC full-text corpus.

- Different configurations were tested: cased and uncased BERT vocabularies, and domain-specific vocabularies with 30k and 50k subword units.

Evaluation and Results

The paper details extensive evaluations on standard biomedical NLP benchmarks, including Named Entity Recognition (NER), Relation Extraction (RE), and Question Answering (QA).

- NER Performance: The results showed that subword vocabulary significantly affects NER tasks. BioMegatron's vocabulary setup provided notable improvements in F1 scores.

- RE Performance: Model scalability positively impacted RE task performance, highlighting the importance of considering both vocabulary and model size.

- QA Performance: Larger model sizes provided better results, though marginal gains were noted beyond a certain threshold.

Noticeably, BioMegatron demonstrated improvements over existing state-of-the-art models in several benchmarks, providing evidence for the advantages of model scalability and biomedical-specific vocabularies.

Implications and Future Directions

This research offers several implications and potential future work avenues in the field of AI and NLP:

- Domain-Specific Optimization: It suggests that domain-specific vocabulary and tailored model configurations are critical for achieving superior performance in specialized areas like biomedical NLP.

- Scalability Considerations: While larger models generally perform better, the paper highlights that the relationship between model size and performance is complex, especially for domain-specific tasks.

- Cross-Domain Generalization: Findings indicate that domain-specific models might be less versatile across different domains. Future studies could investigate cost-effective ways to enhance cross-domain efficacy without compromising domain-specific performance.

This work advances the understanding of NLP in specialized domains, reinforcing the necessity for carefully designed LLMs that cater to the nuances of the target corpus. The insights gleaned suggest productive directions for continued enhancement of AI capabilities in the biomedical field, contributing meaningfully to both theoretical knowledge and practical applications.