Probing Sentence Embeddings for Linguistic Properties

In the pursuit of understanding the linguistic properties encapsulated by sentence embeddings, the paper titled "What you can cram into a single vector: Probing sentence embeddings for linguistic properties" by Conneau et al. sets out an empirical paper that scrutinizes such embeddings using a variety of probing tasks. This examination is aimed at revealing the extent to which these embeddings capture syntactic and semantic information inherent in sentences.

Overview of Probing Tasks

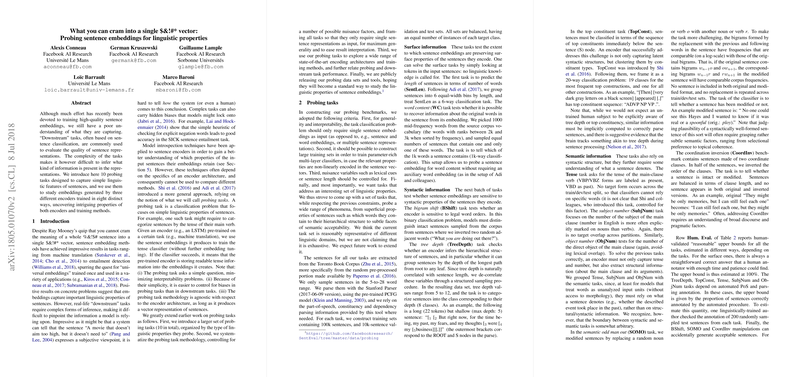

The authors introduce a suite of ten probing tasks designed to analyze the linguistic capabilities of sentence embeddings. These tasks are:

- Sentence Length (SentLen): Determines if embedding can predict the length of a sentence.

- Word Content (WC): Tests whether embeddings can reflect the presence of specific words.

- Bigram Shift (BShift): Assesses if embeddings detect unnatural word orders.

- Tree Depth (TreeDepth): Evaluates the capturing of hierarchical sentence structure.

- Top Constituents (TopConst): Classifies sentences based on their top constituents in parse trees.

- Tense: Identifies the tense of main clause verbs.

- Subject Number (SubjNum): Determines the number (singular/plural) of the subject.

- Object Number (ObjNum): Evaluates the capturing of the number of the object.

- Semantic Odd Man Out (SOMO): Detects semantic anomalies in sentences.

- Coordination Inversion (CoordInv): Checks the ability to detect clause order inversion in sentences.

These tasks are derived from sentences in the Toronto Book Corpus, ensuring data comprehensiveness and linguistic diversity. Task sets are balanced and controlled for nuisance factors such as sentence length and lexical content to ensure that they probe the intended linguistic properties accurately.

Sentence Embedding Models and Training Methods

The paper evaluates three encoder architectures: BiLSTM-last, BiLSTM-max, and Gated ConvNet. These architectures are trained on various tasks including Neural Machine Translation (NMT) for multiple language pairs (En-Fr, En-De, En-Fi), autoencoding, Seq2Tree, SkipThought, and Natural Language Inference (NLI), to generate sentence embeddings. These methodologies represent a broad spectrum of both supervised and unsupervised learning techniques.

Results and Analysis

The results reveal substantial variance in the linguistic properties captured by different models and training regimes. The BiLSTM-max encoder, notably, demonstrates a strong initial capacity to capture linguistic information even when untrained, underscoring the effectiveness of its architectural bias. On the other hand, models trained on NMT tasks show more proficiency in capturing complex linguistic features compared to those trained on NLI, although NLI models exhibit superior performance in downstream NLP tasks.

The probing results are corroborated against various strong baselines like Naive Bayes with tf-idf features and Bag-of-Vectors using fastText embeddings, illustrating the relative effectiveness of pre-trained embedding models. A noteworthy observation is that simpler word content and order features, although not challenging, still provide significant utility in downstream NLP applications, reflecting perhaps a tendency of current embeddings to overfit on superficial sentence properties.

Correlations with Downstream Tasks

The paper includes an evaluation of embedding methods on well-established downstream tasks (e.g., sentiment analysis, question classification, paraphrase detection). Correlational analysis between probing tasks and downstream task performance reveals intriguing patterns: simple word content tasks correlate positively with downstream performance, whereas more nuanced syntactic and semantic tasks such as SOMO and CoordInv also maintain a positive correlation, albeit less pronounced.

This correlation matrix underscores the reality that while deep syntactic properties are valuable, the success in downstream tasks might still heavily rely on shallower lexical features.

Implications and Future Developments

The systematic probing of sentence embeddings established by this paper serves dual purposes: it provides a diagnostic toolset for linguistic capability assessment and also hints at the directions for developing more robust, linguistically informed embedding techniques. Future work could extend probing tasks to other languages and operationally refine multi-task training methodologies to amplify the embedding performance on both probing and downstream tasks.

Conclusion

The paper provides insightful research into the linguistic properties encoded by sentence embeddings through a methodical set of probing tasks. By analyzing different encoder architectures and training methods, the paper offers a granular understanding of what these models capture, thus paving the way for more linguistically sophisticated embedding strategies in future AI developments.