- The paper introduces CheXNet, a 121-layer DenseNet that outperforms radiologists in pneumonia detection on chest X-rays.

- It employs dense connections and pretrained ImageNet initialization to improve gradient flow and achieve state-of-the-art F1 scores.

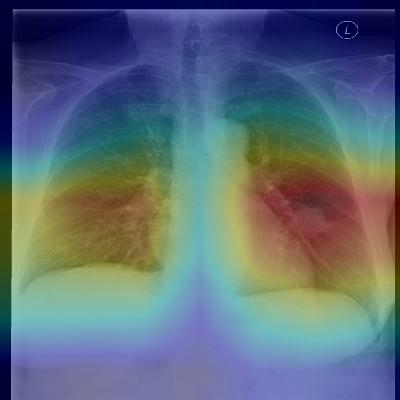

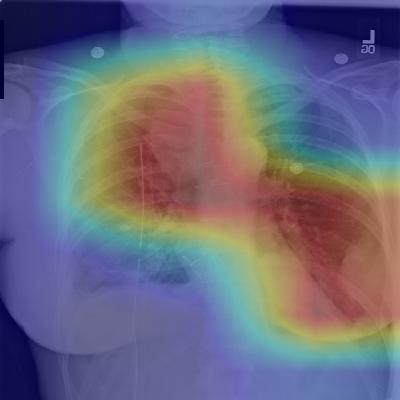

- The model also detects 14 thoracic diseases and provides Class Activation Maps for interpretable clinical decision support.

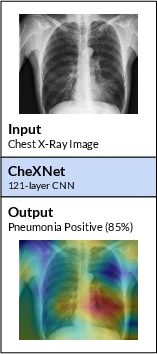

CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning

Overview

The paper "CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning" addresses the critical need for accurate, automated pneumonia detection using chest X-rays, a prevalent diagnostic tool in clinical practice. With significant morbidity and mortality associated with pneumonia, the effective diagnosis and management of the disease are paramount, especially in resource-limited settings where access to skilled radiologists is scarce. The research introduces CheXNet, a 121-layer convolutional neural network designed to classify pneumonia and other thoracic diseases from X-ray images, benchmarking its performance against experienced radiologists.

Model Architecture and Training

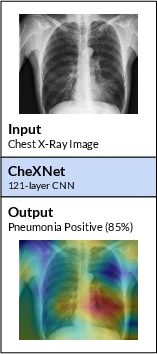

CheXNet employs a DenseNet architecture with 121 layers, utilizing dense connections to enhance the flow of information and gradients, enabling the training of deep networks. The network was trained on the ChestX-ray14 dataset, which contains over 100,000 frontal-view X-ray images labeled with 14 diseases. The model's architecture includes a final fully connected layer followed by a sigmoid nonlinearity to output the probability of pneumonia. Initialization with pretrained ImageNet weights and training with the Adam optimizer ensure robust performance. The implementation is scalable, employing standard convolutional operations essential for medical image analysis.

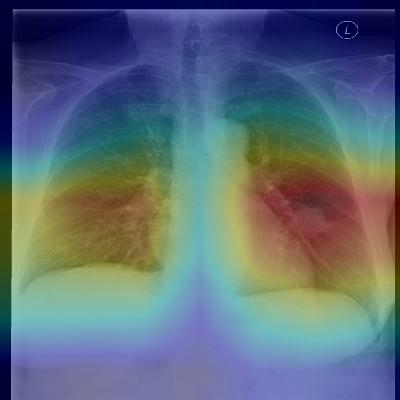

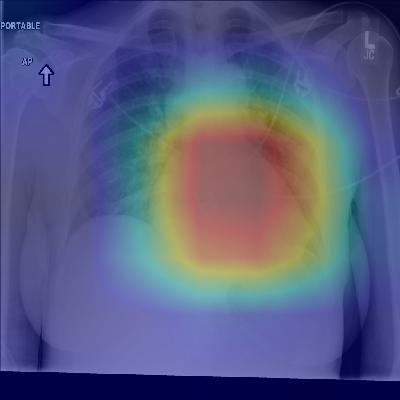

Figure 1: CheXNet is a 121-layer convolutional neural network that takes a chest X-ray image as input, and outputs the probability of a pathology.

CheXNet surpasses the average diagnostic performance of the evaluated radiologists on the test set, highlighting its potential in clinical applications. It achieves an F1 score of 0.435 compared to the radiologist average of 0.387, demonstrating statistical significance in performance superiority. The model's architecture and training pipeline effectively optimize discrimination between pneumonia and non-pneumonia cases, leveraging deep learning advancements to achieve radiologist-level accuracy.

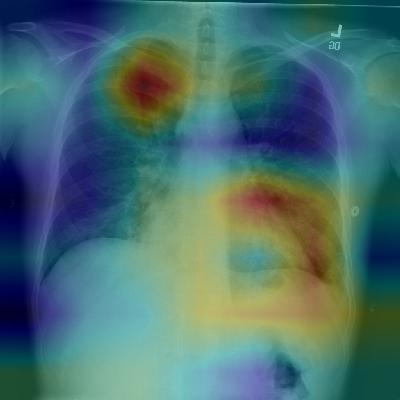

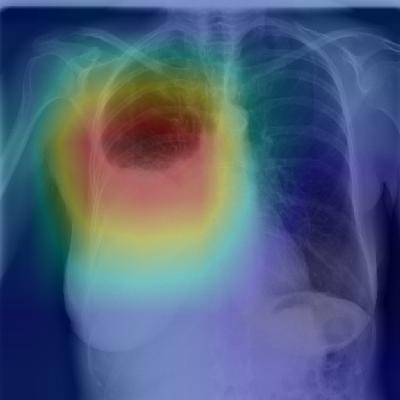

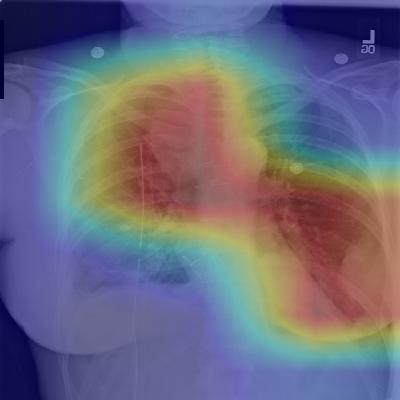

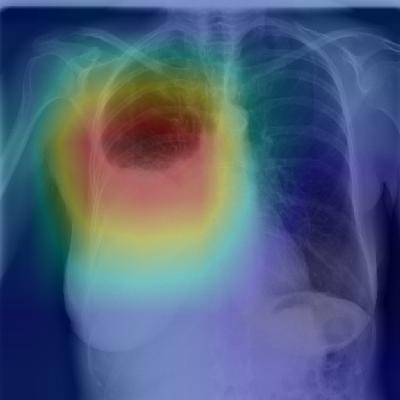

Figure 2: Patient with multifocal community-acquired pneumonia. The model correctly detects the airspace disease in the left lower and right upper lobes to arrive at the pneumonia diagnosis.

Multi-Pathology Detection

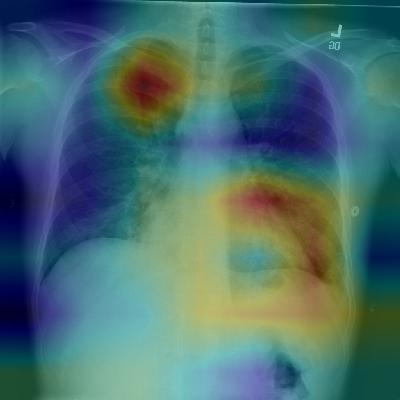

Beyond pneumonia, CheXNet's architecture permits simultaneous detection of multiple pathologies, extending its utility across diverse clinical scenarios. By utilizing a multi-label classification approach, the model achieves state-of-the-art results across all 14 pathologies in the ChestX-ray14 dataset. This capability underscores the model's applicability in comprehensive diagnostic workflows where multiple conditions need screening.

Model Interpretation and Clinical Implications

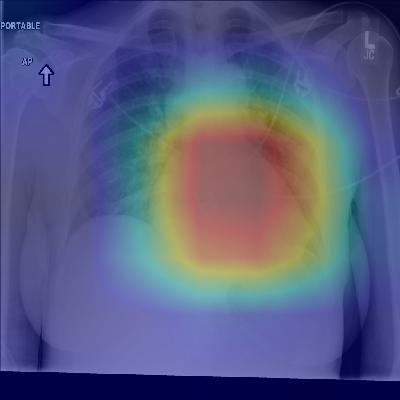

Class Activation Maps (CAMs) are integral to CheXNet, providing interpretable outputs by localizing critical image regions indicative of specific pathologies. This feature enhances clinician trust and facilitates integration into point-of-care settings by offering visual explanations of model predictions. Clinical deployment can be transformative, particularly in underserved regions lacking diagnostic expertise.

Conclusion

CheXNet exemplifies the potential of deep learning to augment diagnostic radiology, offering a robust, scalable solution for pneumonia detection that aligns with expert radiologist performance. Its deployment can significantly impact healthcare delivery, bridging gaps in diagnostic access and improving outcomes in settings constrained by limited radiology resources. Future iterations may explore additional modalities and integration with patient history for further performance gains.