Analysis of the Need for Novel Evaluation Metrics in NLG

In the paper titled "Why We Need New Evaluation Metrics for NLG," the authors Novikova et al. rigorously analyze the effectiveness of current automatic evaluation metrics for Natural Language Generation (NLG) systems, specifically criticizing the widely used word-overlap-based metrics. They contend that these metrics, including BLEU, TER, ROUGE, and others, fail to correlate robustly with human judgment, particularly at the sentence level.

Core Investigation and Findings

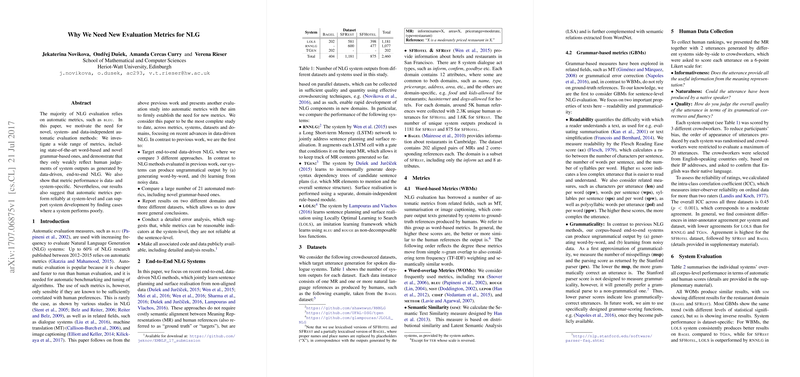

The authors conduct a comprehensive paper comparing 21 automated metrics across multiple NLG systems, datasets, and domains. The paper concludes that:

- Word-based Metrics (WBMs) such as BLEU are particularly ineffective at mirroring human ratings for sentence-level evaluations. While they achieve some level of reliability at the system level, they falter significantly when it comes to capturing the nuanced judgment of human evaluators based on metrics such as informativeness, naturalness, and quality.

- Grammar-based Metrics (GBMs), encompassing readability and grammaticality measures, present an alternative by focusing on intrinsic properties of the generated text rather than direct comparisons with reference texts. However, they are also not devoid of shortcomings as they can be influenced by manipulations aimed at boosting grammar scores without improving data relevance or informativeness.

- The correlation between automatic metrics and human judgment is system- and dataset-specific, emphasizing the necessity of context-aware evaluation methods.

Implications and Future Directions

The research demonstrates a pressing need for more sophisticated evaluation methodologies in NLG. This necessity is driven by the inadequacies of current metrics, which can result in evaluations that do not reflect the true effectiveness of NLG systems. For practical and theoretical advancements in the field, the development of metrics that align better with human quality judgments is crucial. Future research directions may include the exploration of:

- Context-sensitive evaluation, where metrics evaluate generated text within the conversational or narrative context rather than standalone sentences, which could provide a deeper understanding of content relevance.

- Extrinsic evaluation metrics that focus on task success, integrating the outputs' effectiveness in real-world scenarios.

- Reference-less metrics that assess semantic content fidelity and overall quality without relying on predefined human-written examples. These could be based on sophisticated machine learning techniques, such as neural networks, to estimate the quality of outputs from the semantic representation directly.

Conclusion

This paper highlights crucial limitations in existing evaluation practices for NLG and calls for innovative approaches that better reflect human judgment. While current automatic metrics offer convenience and speed, they lack the robustness needed for accurate and reliable performance assessment of NLG systems. The pursuit of more nuanced and multi-faceted evaluation strategies is imperative for advancing both the performance assessment and the development of NLG technologies.