An Analysis of EAST: An Efficient and Accurate Scene Text Detector

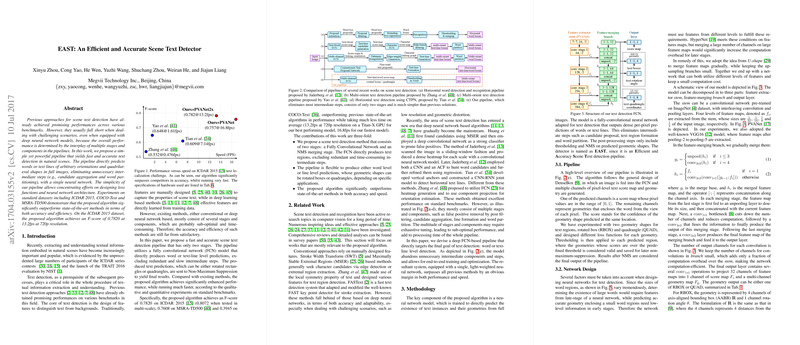

The paper entitled "EAST: An Efficient and Accurate Scene Text Detector" presents a streamlined yet robust approach to the challenging task of text detection in natural scenes. Traditional methods, despite leveraging deep neural networks, often encounter difficulties in real-world scenarios due to the complexity and multiplicity of stages in their pipelines. EAST (Efficient and Accurate Scene Text) detector simplifies this workflow by deploying a single neural network to directly predict text in full images. This eliminates unnecessary intermediate steps, significantly enhancing both the speed and accuracy of text detection.

Methodology

The proposed model is grounded in a two-stage pipeline consisting of a fully convolutional network (FCN) and a subsequent non-maximum suppression (NMS) procedure. The network is trained to output text regions either as rotated rectangles (RBOX) or quadrangles (QUAD), adaptable depending on specific application domains. Notably, the RBOX includes axis-aligned bounding boxes with an additional rotation channel, while the QUAD is characterized by coordinate shifts for the vertices of the quadrangle.

Pipeline and Network Design:

- Feature Extraction and Merging: The model leverages a feature extraction stem, employing architectures such as VGG16 or PVANet. It amalgamates feature maps at different scales to balance the needs of detecting both small and large text instances.

- Output Generation: The final layers project merged feature maps into a dense per-pixel text score map and geometric descriptors, representing either RBOX or QUAD outputs.

- Loss Functions: The scale-invariant IoU loss for RBOX and a normalized smoothed-L1 loss for QUAD ensure robust learning across text instances of various scales.

Experimental Results

The proposed model was benchmarked across datasets including ICDAR 2015, COCO-Text, and MSRA-TD500. The results indicate substantial improvements over the state-of-the-art methods:

- ICDAR 2015: A notable F-score of 0.8072 with multi-scale testing and 0.7820 without it at 720p resolution, outperforming the closest competitors by over 0.16 in absolute F-score.

- COCO-Text: Achieved an F-score of 0.3945, significantly ahead of previous methods, underscoring superior performance in large-scale datasets.

- MSRA-TD500: Exhibited an F-score of 0.7608, marginally surpassing the best prior results, notably excelling in line-level detection scenarios with varying text orientations.

Computational Efficiency:

The EAST detector stands out for its computational efficiency. Using a Titan X GPU, it achieves a frame rate of up to 16.8 FPS, ensuring real-time applicability. This contrasts sharply with slower, more complex models discussed in previous literature.

Implications and Future Directions

The EAST detector's simplicity in its pipeline facilitates direct and efficient detection of textual information, making it a strong contender for wide-ranging applications from autonomous driving to augmented reality. The holistic approach avoids pitfalls associated with piecemeal stages, which can be suboptimal and time-consuming. Practically, this method opens avenues for deploying real-time text detection systems on the edge and in mobile environments.

In the theoretical landscape, the methodological innovations suggest several future research directions:

- Curved Text Detection: Developing a geometry formulation that can handle curved text could expand the model's versatility.

- Integration with Recognizers: Combining the EAST detector with optical character recognition (OCR) systems could streamline the pipeline for end-to-end text processing.

- General Object Detection: Extending similar simplifications to general object detection tasks might yield analogous gains in efficiency and accuracy.

In summary, the EAST detector exemplifies how simplifying complex workflows can lead to substantial enhancements in performance benchmarks. This paper's contribution is a significant stride in bridging the gap between theoretical advancements and practical deployments in scene text detection.