Overview of FAST: Faster Arbitrarily-Shaped Text Detector

The paper "FAST: Faster Arbitrarily-Shaped Text Detector with Minimalist Kernel Representation" presents a novel framework for efficient and accurate scene text detection. The primary contributions of this research are the introduction of a minimalist kernel representation (MKR) and the development of a neural architecture tailored specifically for text detection via a network architecture search (NAS) mechanism. FAST, as a text detection framework, addresses the critical challenges of processing arbitrarily-shaped text efficiently without compromising on detection accuracy, thus offering a solution suitable for real-time applications.

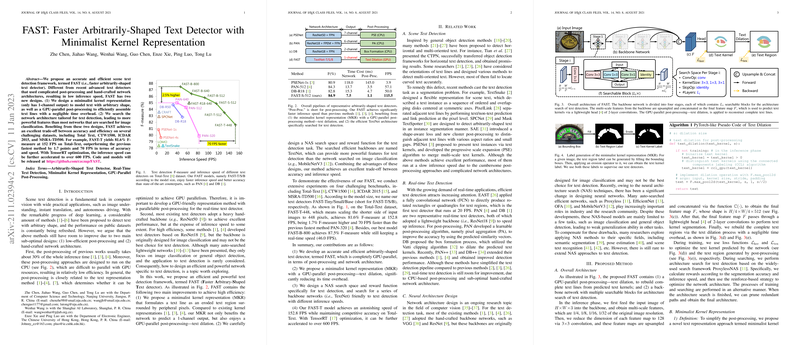

The groundwork of FAST lies in improving two sub-optimal aspects of prior text detection methods: the lagging efficiency of CPU-based post-processing and the inadequacies of hand-crafted network architectures. By offering an integrated and GPU-efficient pipeline, FAST optimizes performance in text detection across varying datasets and text orientations.

Key Innovations and Methodology

- Minimalist Kernel Representation (MKR):

- The MKR simplifies text representation to a 1-channel output, which stands in contrast to the more complex multi-channel approaches in existing methods. This reduces the computational load and enhances inference speed.

- Post-processing is optimized through a GPU-accelerated text dilation method that efficiently reconstructs text lines from eroded kernels. This design reduces latency, a common issue with traditional CPU-bound approaches.

- Architecture Search via NAS:

- FAST employs NAS to derive efficient backbone models designated as TextNet, optimized for the specific tasks of text detection rather than generic image classification. These backbones leverage reparameterizable convolutions, avoiding the inefficiencies of traditional architectures.

- The architecture search process incorporates a custom reward function that balances segmentation accuracy and inference speed, thus producing architectures that meet varied computational constraints and application needs.

- Performance and Benchmarking:

- FAST demonstrates substantial improvements over previous state-of-the-art in terms of both precision and processing speed across several datasets such as Total-Text, CTW1500, ICDAR 2015, and MSRA-TD500.

- The method achieves remarkable speed-accuracy trade-offs with reported F-measures like 81.6% at 152 FPS on the Total-Text dataset. FAST can achieve even more significant acceleration to over 600 FPS with the use of TensorRT.

Implications and Future Directions

The implementation of a minimalist kernel in text detection frameworks not only reflects design efficiency but also enriches the avenues for real-time applications in various domains such as augmented reality, autonomous driving, and instant translation. The MKR essentially aligns the text representation with GPU's strengths, paving the way for further developments in the areas of video stream processing and low-latency environments.

Moreover, the utilization of NAS to derive task-specific architectures heralds a shift towards customized solutions in computer vision tasks. This methodological leap hints at broader applications of NAS in other domains requiring specialized architectures for optimal performance.

Looking forward, the principles outlined in this paper can be extrapolated to explore new domains requiring real-time processing of complex visual information. The potential for integrating MKR into more general object detection frameworks or extending the NAS approach to seamlessly generate models optimized across diverse hardware platforms presents a promising avenue for further research and development.

In summary, FAST offers a forward-thinking approach to tackling the constraints of real-time text detection, effectively bridging the gap between accuracy and efficiency. This framework not only sets a benchmark in text detection tasks but also provides a template for other detection applications in the landscape of computer vision research.