Overview of "A Simple, Fast Diverse Decoding Algorithm for Neural Generation"

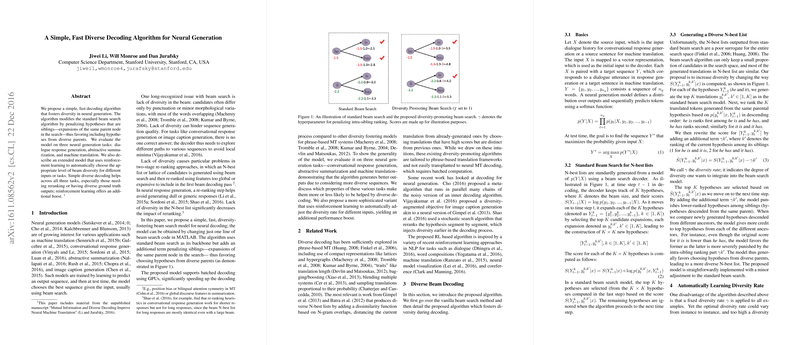

This paper discusses an advanced decoding algorithm designed to enhance diversity in neural generation tasks. The algorithm provides a simple and efficient modification of the traditional beam search approach, promoting diversity by penalizing sibling hypotheses, which are expansions of the same parent node. This strategy incentivizes selecting hypotheses originating from varied parental nodes. The authors evaluate their proposed method across multiple neural generation tasks, including dialogue response generation, abstractive summarization, and machine translation. Furthermore, they introduce an extended model leveraging reinforcement learning to dynamically adjust the level of diversity across different tasks and inputs.

Core Contributions and Experimental Setup

- Diverse Decoding Model: The primary contribution is a diversity-promoting variant of the beam search. It achieves this by introducing an intra-sibling ranking penalty, which encourages the selection of diverse hypotheses. This modification entails a minor change to the original beam search algorithm, facilitating easy implementation and integration into existing systems.

- Task Evaluations: The paper evaluates the proposed model on three distinct neural generation tasks:

- Dialogue Response Generation: Implemented on the OpenSubtitles dataset, the algorithm boosts performance notably, particularly in settings requiring reranking and for generating longer responses.

- Abstractive Summarization: Both single and multi-sentence summarization scenarios were tested. The model successfully generated summaries exhibiting improved ROUGE scores, especially in settings that incorporate global document-level features through reranking.

- Machine Translation: Examined using WMT'14 datasets from English to German, the diversity-augmented algorithm showed modest improvements, with more pronounced benefits in reranking settings.

- Reinforcement Learning (RL) Extension: A sophisticated reinforcement learning model, termed diverseRL, adjusts the diversity rate (γ) dynamically based on input characteristics. This approach optimizes the diversity rate by associating it with the evaluation metric, potentially yielding superior performance to static diversity implementations.

Implications and Future Outlook

The implications of this paper span both practical and theoretical realms. Practically, this approach advances the state-of-the-art in producing varied and contextually appropriate outputs across a range of neural generation tasks. It particularly stands out in settings where conventional methods struggle with generating non-trivial diverse outputs. Theoretically, the paper underscores the crucial aspect of balancing diversity in beam search algorithms, dictating that task-specific and input-specific considerations can profoundly influence the resultant diversity-optimal balance.

Future developments could explore further customization and application of the RL framework across varying contexts, potentially encompassing more sophisticated diversity metrics or domain-specific constraints. Additionally, extending this model's applicability to other neural generation domains, such as image captioning, may uncover further potential of diverse decoding strategies.

In conclusion, this paper outlines a significant advancement in the field of neural generation by enhancing the diversity of outputs in a computationally efficient manner. By integrating both consistent diversity adjustments and an adaptive RL approach, it sets a foundational methodology that other researchers can build upon, refine, and apply to an ever-expanding spectrum of AI applications.