Debiasing Word Embeddings: A Formal Overview

The paper "Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings" by Bolukbasi et al. addresses the critical issue of inherent gender biases in word embeddings. Word embeddings, such as the popular word2vec, are crucial for numerous NLP tasks. However, by training on large text corpora, they inadvertently capture and amplify the biases present in the data. This paper makes two significant contributions: providing metrics to quantify gender bias in embeddings and developing algorithms to mitigate this bias while preserving the embeddings' utility.

Metrics for Quantifying Bias

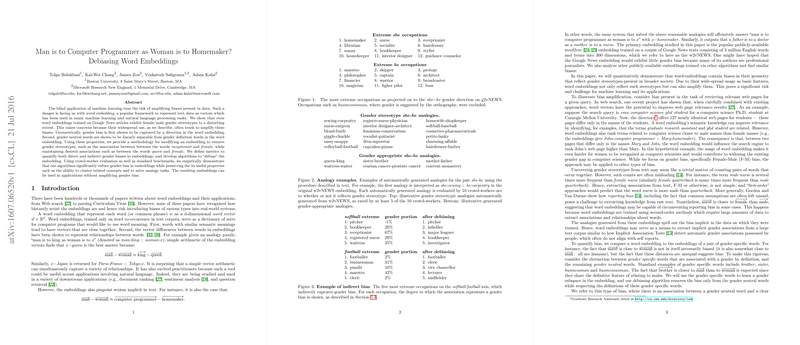

The authors first establish the presence of gender biases in word embeddings, using Google News-trained word2vec as their primary example. They show that embeddings reflect common gender stereotypes, such as associating male with programming and female with homemaking. This is quantified through two metrics: direct and indirect bias.

- Direct Bias: It is measured by assessing the projection of gender-neutral words along the gender direction identified by principal component analysis (PCA) of gender-specific word pairs. For example, words like "nurse" are closer to "female" than "male" in the vector space.

- Indirect Bias: This measures the influence of gender on the similarities between gender-neutral words. For instance, occupations like "receptionist" are more similar to "softball" than "football", reflecting an indirect gender bias.

Debiasing Algorithms

To mitigate these biases, the authors propose two algorithms: Hard Debiasing and Soft Debiasing.

- Hard Debiasing: This method involves two steps: neutralizing and equalizing. First, it ensures that all gender-neutral words have zero projection on the gender direction. Second, it equalizes pairs of gender-specific words so that all gender-neutral words are equidistant to each word in the pair (e.g., "programmer" should be equally distant from "man" and "woman"). The results show a significant reduction in gender stereotypes, as evaluated by crowd-worker experiments and benchmark tasks.

- Soft Debiasing: This algorithm seeks a balance between preserving original embeddings and mitigating gender bias through a semi-definite programming approach. It allows for a trade-off controlled by a tuning parameter, which ensures flexible debiasing suitable for varied applications.

Evaluation and Implications

The debiased embeddings were evaluated using:

- Analogy Tasks: Testing the embeddings on gender-specific analogy tasks. For example, the correct analogy after debiasing should be "he is to doctor as she is to physician" rather than "nurse".

- Standard Benchmarks: Metrics such as RG, WS, and analogy tests from Mikolov et al., showing no performance degradation post-debiasing.

The algorithms effectively reduce gender bias while maintaining useful properties of word embeddings, like clustering related concepts and solving analogy tasks.

Practical and Theoretical Implications

This work has significant implications for both practical applications and theoretical research:

- Practical Use: Embeddings are widely used in web search, recommendation systems, and various NLP applications. Debiased embeddings can help prevent the amplification of gender stereotypes in these systems, thus promoting fairness.

- Theoretical Research: This research sets a precedent for addressing other forms of biases, such as racial or ethnic biases in word embeddings. The authors show that similar principles can be applied to reduce biases beyond gender.

Future Directions

Future research could focus on:

- Extending the Algorithms: Adapting the debiasing framework to other types of biases, such as those based on race or culture.

- Cross-Linguistic Analysis: Evaluating and mitigating biases in word embeddings across different languages, especially those with grammatical gender.

- Real-World Evaluation: Testing debiased embeddings in real-world applications to paper their impact on reducing bias in deployed systems.

In conclusion, Bolukbasi et al.'s paper presents a robust approach to identifying and mitigating gender biases in word embeddings, ensuring that machine learning systems do not inadvertently perpetuate societal stereotypes. The proposed methods stand to make a substantial impact on the development of fair and unbiased AI systems.