- The paper introduces DWGF, a training-free framework that leverages pretrained latent diffusion models to solve inverse problems using a gradient flow approach.

- It formulates posterior sampling as a regularized Wasserstein gradient flow of the KL divergence in latent space, effectively balancing fidelity and diversity.

- Experimental results show competitive PSNR and LPIPS metrics, though the method produces diverse yet blurry reconstructions with higher FID scores.

Gradient Flow Methods for Inverse Problems with Latent Diffusion Priors

Introduction

This paper introduces Diffusion-regularized Wasserstein Gradient Flow (DWGF), a training-free framework for solving ill-posed inverse problems by leveraging pretrained latent diffusion models (LDMs) as priors. The approach is motivated by the limitations of hand-crafted priors in high-dimensional settings and the computational inefficiency of pixel-space diffusion models. DWGF formulates posterior sampling as a regularized Wasserstein gradient flow (WGF) of the Kullback-Leibler (KL) divergence in the latent space, providing a principled method for Bayesian inference in inverse problems.

Methodology

Given an observation y=A(x0)+ϵ with known forward operator A and Gaussian noise ϵ, the goal is to sample from the posterior p(x0∣y)∝p(y∣x0)pdata(x0). In the context of LDMs, the prior is defined on a latent variable z0, and the posterior is approximated as qμ(x0∣y)=∫pϕ−(x0∣z0)μ(z0∣y)dz0.

DWGF seeks the optimal latent posterior μ⋆(z0∣y) by minimizing a regularized functional:

μ⋆(z0∣y)∈μargminF[μ(z0∣y)]+γR[μ(z0∣y)]

where F is the KL divergence between the approximate and true posteriors, and R is a regularization term based on the diffusion prior.

Wasserstein Gradient Flow

The optimization is performed via WGF in the space of probability measures:

∂t∂μt=∇z0⋅(μt∇z0δμδL[μ])

where L[μ]=F[μ]+γR[μ]. The corresponding ODE for particles is:

dtdz0,t=−∇z0δμδL[μ]

Functional Derivatives

- The gradient of F is derived using the reparameterization trick and chain rule, with the intractable data score ∇x0logp(x0) approximated via the VAE encoder.

- The regularization R is a weighted KL divergence along the diffusion process, with gradients computed via Monte Carlo over particles.

Algorithmic Implementation

- The ODE is simulated using a particle-based approach, with gradients treated as loss terms and optimized using Adam for improved convergence.

- Deterministic encoding is used due to negligible posterior variance, simplifying the data score computation.

- The method is agnostic to the specific LDM architecture and can be extended to conditional settings.

Experimental Results

DWGF is evaluated on FFHQ-512 for box inpainting and 8× super-resolution, compared against PSLD and RLSD baselines. Metrics include FID, PSNR, and LPIPS.

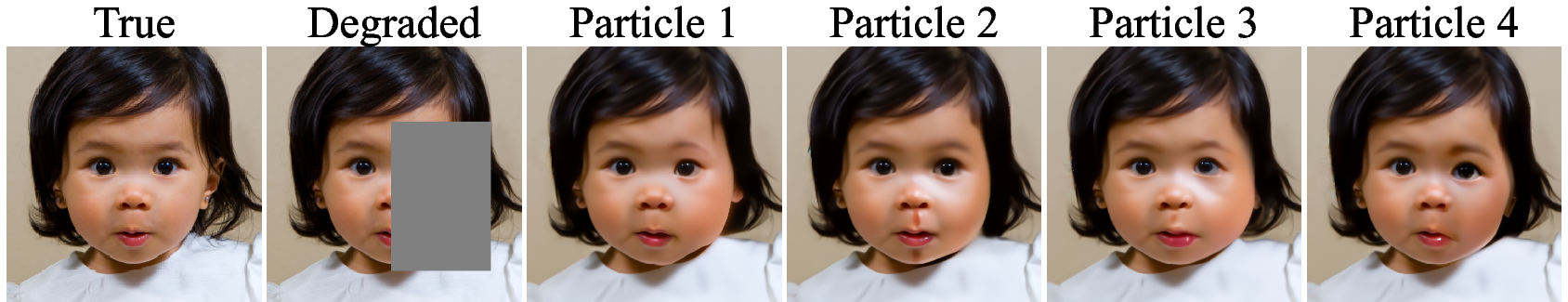

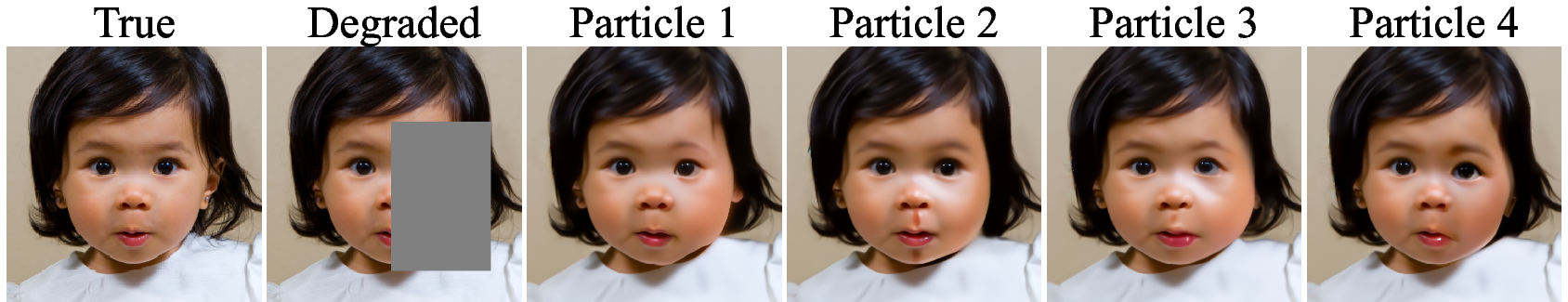

Figure 1: Diversity of the particles x0 produced by DWGF on large box inpainting.

DWGF achieves competitive PSNR and LPIPS but exhibits significantly higher FID, indicating less realistic sample quality. Qualitative analysis reveals that DWGF produces diverse but blurry reconstructions, attributed to the mode-seeking nature of the KL divergence. The particle cloud generated by DWGF demonstrates substantial diversity, but the lack of sharpness and realism is a notable limitation.

Implementation Considerations

- Computational Requirements: The particle-based Monte Carlo approximation incurs substantial memory and compute overhead, especially for large N and high-dimensional latent spaces.

- Optimizer Choice: Adam is used for ODE discretization, introducing momentum and adaptive preconditioning, which is unconventional but empirically beneficial for ill-conditioned landscapes.

- Regularization: The choice of regularization weight γ and data consistency parameter λ is critical for balancing fidelity and diversity.

- Scalability: The method is compatible with few-step and consistency models, suggesting potential for acceleration and reduced sampling steps.

Limitations and Future Directions

DWGF does not match state-of-the-art FID scores, likely due to insufficient regularization and hyperparameter tuning. The framework is extensible to incorporate entropic regularization or repulsive potentials to mitigate mode collapse and improve sample realism. Integration with consistency models and control variates for variance reduction are promising avenues for future research. The particle-based approach may be further optimized for memory efficiency.

Theoretical Implications

DWGF provides a rigorous gradient flow perspective for posterior sampling in inverse problems, bridging optimal transport theory and generative modeling. The use of WGF in latent space enables principled regularization and efficient sampling, with potential applications in broader Bayesian inference tasks.

Conclusion

DWGF offers a principled, training-free approach for solving inverse problems with latent diffusion priors, leveraging Wasserstein gradient flows of KL divergence. While competitive in perceptual and reconstruction metrics, the method currently underperforms in sample realism. Future work should focus on enhanced regularization, integration with fast generative models, and improved computational efficiency. The gradient flow framework opens new directions for Bayesian inference and generative modeling in high-dimensional inverse problems.