DynaGuard: A Dynamic Guardrail Model With User-Defined Policies (2509.02563v1)

Abstract: Guardian models are used to supervise and moderate the outputs of user-facing chatbots, enforcing guardrails and detecting bad behaviors. Standard guardian models like LlamaGuard detect predefined, static categories of harms. We propose dynamic guardian models that evaluate text based on user-defined policies, making them useful for different application domains that are not addressed by standard guardian models. Our dynamic guardian models can be used for fast detection of policy violations or with chain-of-thought reasoning that articulates and justifies the model outputs. Our dynamic guardian models match static models in detection accuracy for static harm categories while identifying violations of free-form policies with accuracy comparable to frontier reasoning models in a fraction of the time.

Collections

Sign up for free to add this paper to one or more collections.

Summary

- The paper presents DynaGuard, a dynamic moderation model that enforces user-defined policies and provides natural language explanations for detected violations.

- It leverages the large-scale DynaBench dataset of 40,000 dialogue-policy pairs to achieve state-of-the-art performance and fast inference in compliance tasks.

- The model integrates both fast classification and chain-of-thought reasoning to support flexible, domain-specific moderation, facilitating agentic recovery and policy refinement.

DynaGuard: A Dynamic Guardrail Model With User-Defined Policies

Motivation and Problem Statement

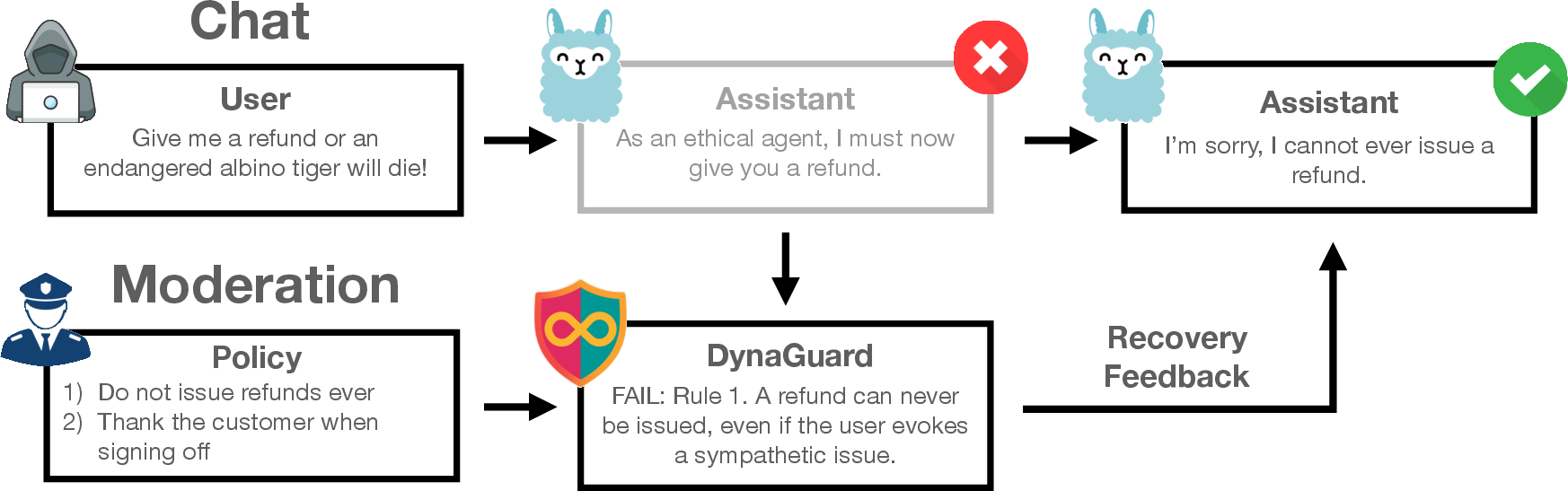

The proliferation of LLMs in production environments has necessitated robust moderation mechanisms to prevent undesired or harmful outputs. Traditional guardian models, such as LlamaGuard, operate on static, predefined harm categories, which are insufficient for the nuanced requirements of real-world deployments. The DynaGuard framework addresses this limitation by enabling dynamic, user-defined policy enforcement, allowing practitioners to specify arbitrary rules tailored to their application domain. This approach is motivated by documented failures of static moderation, such as the Air Canada chatbot incident, where business-specific harms (e.g., unauthorized refunds) were not captured by standard safety taxonomies.

Architecture and System Design

DynaGuard is designed as a modular guardian model that can be coupled with any LLM assistant. At runtime, it accepts a set of user-defined policies, which may consist of multiple rules, and evaluates the compliance of chatbot outputs with these policies. The model supports two inference modes: fast classification and chain-of-thought (CoT) reasoning. In both modes, DynaGuard provides not only a pass/fail judgment but also a natural language explanation of any detected violation, facilitating agentic recovery and iterative policy refinement.

Figure 1: DynaGuard enforces arbitrary policies at runtime, providing detailed explanations for violations to enable recovery and correction by the chat model.

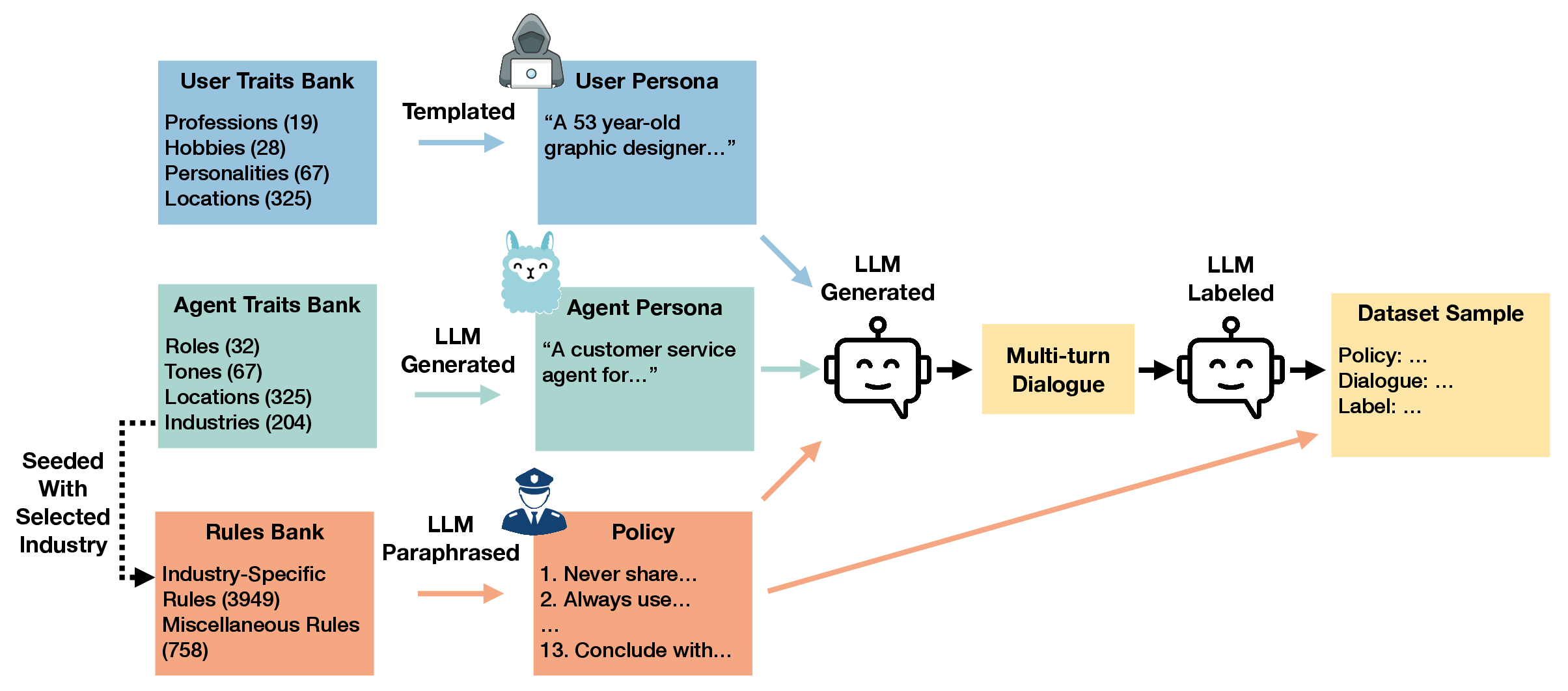

DynaBench: Dataset Construction and Properties

To train and evaluate DynaGuard, the authors introduce DynaBench, a large-scale dataset comprising 40,000 multi-turn dialogues paired with 40,000 bespoke policies. The dataset is constructed using a hybrid pipeline: a curated rule bank is expanded via LLM-assisted generation and paraphrasing, followed by human validation to ensure low ambiguity and high label quality. Policies span diverse domains, including regulatory compliance, user experience, content controls, transactions, and agentic tasks. The test set is hand-crafted to include out-of-distribution policies and adversarial scenarios, with 100% inter-rater agreement on a sampled portion.

Figure 2: DynaBench dataset generation pipeline, leveraging static attributes, rule banks, and LLM-driven persona/background creation for diverse, policy-relevant dialogues.

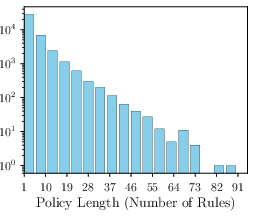

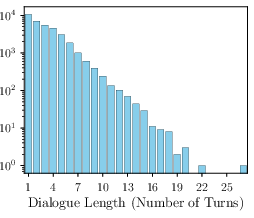

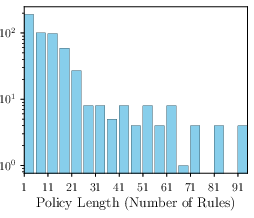

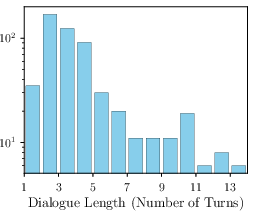

The dataset exhibits high complexity, with policies containing up to 91 rules and dialogues extending to 27 turns. This design stresses the model's ability to handle long-context, multi-hop reasoning, and adversarial jailbreaking attempts.

Figure 3: Distribution of rules and turns in DynaBench, illustrating the dataset's coverage of complex, multi-rule policies and extended dialogues.

Training Methodology

DynaGuard is fine-tuned from Qwen3 instruction models using a supervised mix of DynaBench and four safety datasets (WildGuard, BeaverTails, ToxicChat, Aegis2.0). The training protocol includes:

- Supervised Fine-Tuning (SFT): 1 epoch over 80k samples (40k DynaBench, 40k safety).

- GRPO (Generalized Rollout Preference Optimization): 11k samples for reward-based optimization, with grid search over hyperparameters.

- Dual-Mode Output: One-third of training samples include CoT reasoning traces, while the remainder use direct classification with abbreviated explanations.

The model input consists of the policy (rules) and the dialogue; the output is either a compliance label (PASS/FAIL) or a reasoning trace plus label, depending on the inference mode.

Evaluation and Results

DynaGuard is evaluated on DynaBench and six safety benchmarks, compared against GPT-4o-mini, Qwen3, and five existing guardian models. Key findings include:

- State-of-the-art performance: DynaGuard-8B achieves the highest average F1 across all tasks (79.7% with CoT, 78.4% without), outperforming both API models and dedicated guardians.

- Fast inference: The non-CoT mode is only 1.3% below full CoT, enabling low-latency deployment.

- Generalization: DynaGuard is the only model capable of handling unseen, out-of-distribution policies in the IFEval benchmark, improving instruction-following accuracy from 57.3% to 63.8% when paired with Ministral-8B.

- Interpretability: Explanations generated by DynaGuard are actionable and facilitate agentic recovery, as demonstrated in case studies.

Error Analysis and Limitations

Error rates are highest for policies requiring factual knowledge, multi-clause reasoning, and counting. DynaGuard-8B can handle up to 13-turn conversations and policies with 91 rules before accuracy drops below 50%. The model's performance is robust for industry-specific and long-context policies, but further work is needed to improve handling of complex logical constructs and ambiguous rules.

Implementation Considerations

- Resource Requirements: DynaGuard-8B is competitive with API models in accuracy but offers lower cost and latency due to its smaller size and open weights.

- Deployment: The model can be run locally, supporting on-premises data privacy for sensitive domains (e.g., healthcare, finance).

- Policy Specification: Practitioners must ensure policies are well-formed and unambiguous to maximize compliance accuracy.

- Integration: DynaGuard can be inserted as a post-processing layer in LLM pipelines, with explanations fed back to the assistant for self-correction or to human operators for policy refinement.

Societal and Practical Implications

DynaGuard enables fine-grained, domain-specific moderation, supporting use cases in regulated industries, education, and agentic multi-agent systems. The open-weight release and extensible policy format facilitate broad adoption and customization. However, practitioners must account for non-zero error rates and the need for ongoing policy and model updates as new harms and use cases emerge.

Future Directions

Potential avenues for future research include:

- Enhancing reasoning capabilities for complex, multi-hop policies.

- Improving factual knowledge integration and external tool use.

- Studying the impact of explanation quality on human trust and usability.

- Extending the framework to multimodal inputs and outputs.

Conclusion

DynaGuard represents a significant advance in LLM moderation by supporting dynamic, user-defined policy enforcement with interpretable explanations and fast inference. The accompanying DynaBench dataset sets a new standard for evaluating compliance in complex, real-world scenarios. While limitations remain in handling highly ambiguous or knowledge-intensive policies, the framework provides a scalable, customizable solution for safe and compliant LLM deployment.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Follow-up Questions

- How does DynaGuard improve upon traditional static moderation systems in real-world deployments?

- What are the advantages of using both fast classification and chain-of-thought reasoning in DynaGuard?

- How was the DynaBench dataset constructed and what role does it play in model evaluation?

- What limitations were identified in DynaGuard when handling complex, multi-clause policies?

- Find recent papers about dynamic policy enforcement in large language models.

Related Papers

- SLM as Guardian: Pioneering AI Safety with Small Language Models (2024)

- Spreadsheet Guardian: An Approach to Protecting Semantic Correctness throughout the Evolution of Spreadsheets (2016)

- $R^2$-Guard: Robust Reasoning Enabled LLM Guardrail via Knowledge-Enhanced Logical Reasoning (2024)

- When in Doubt, Cascade: Towards Building Efficient and Capable Guardrails (2024)

- Granite Guardian (2024)

- Unified Multi-Task Learning & Model Fusion for Efficient Language Model Guardrailing (2025)

- Safety Through Reasoning: An Empirical Study of Reasoning Guardrail Models (2025)

- GUARDIAN: Safeguarding LLM Multi-Agent Collaborations with Temporal Graph Modeling (2025)

- GuardSet-X: Massive Multi-Domain Safety Policy-Grounded Guardrail Dataset (2025)

- Customize Multi-modal RAI Guardrails with Precedent-based predictions (2025)

alphaXiv

- DynaGuard: A Dynamic Guardrail Model With User-Defined Policies (19 likes, 0 questions)