- The paper presents a multi-level framework that repositions anthropomorphism as a strategic design parameter in LLMs.

- It categorizes cues into perceptual, linguistic, behavioral, and cognitive dimensions to align system capabilities with user expectations.

- It offers actionable recommendations for calibrating human-like features to boost engagement while mitigating ethical risks.

Multi-Level Framework for Anthropomorphism in LLM Design

Introduction

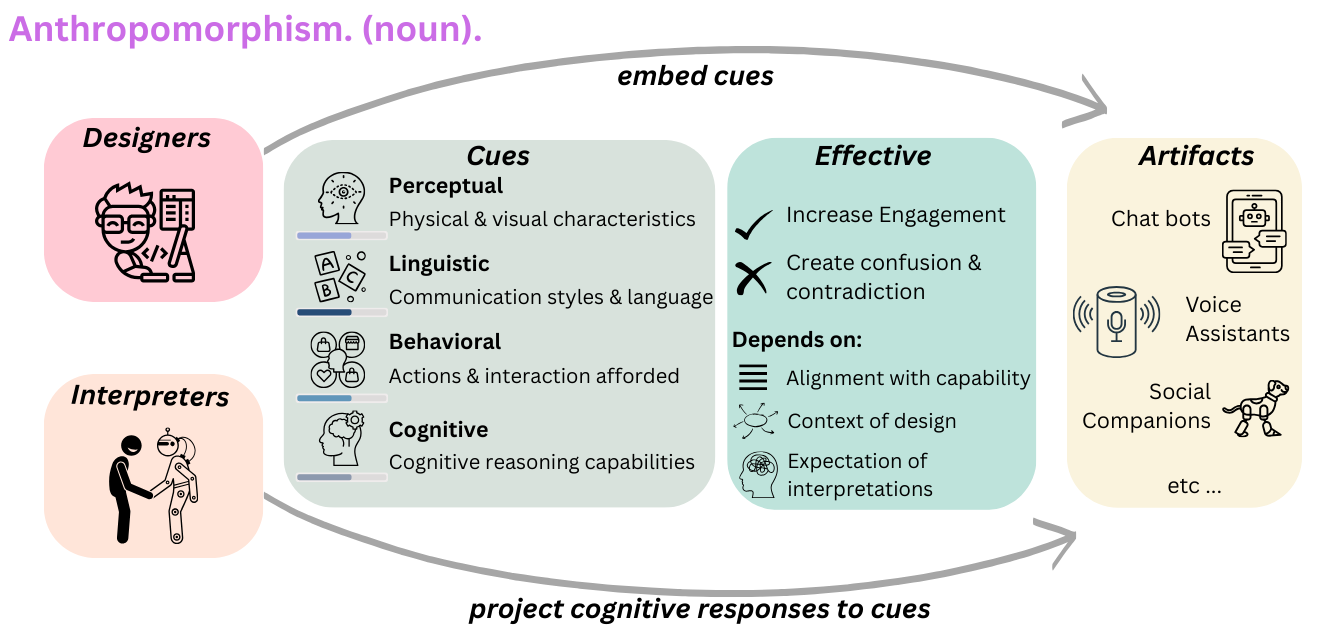

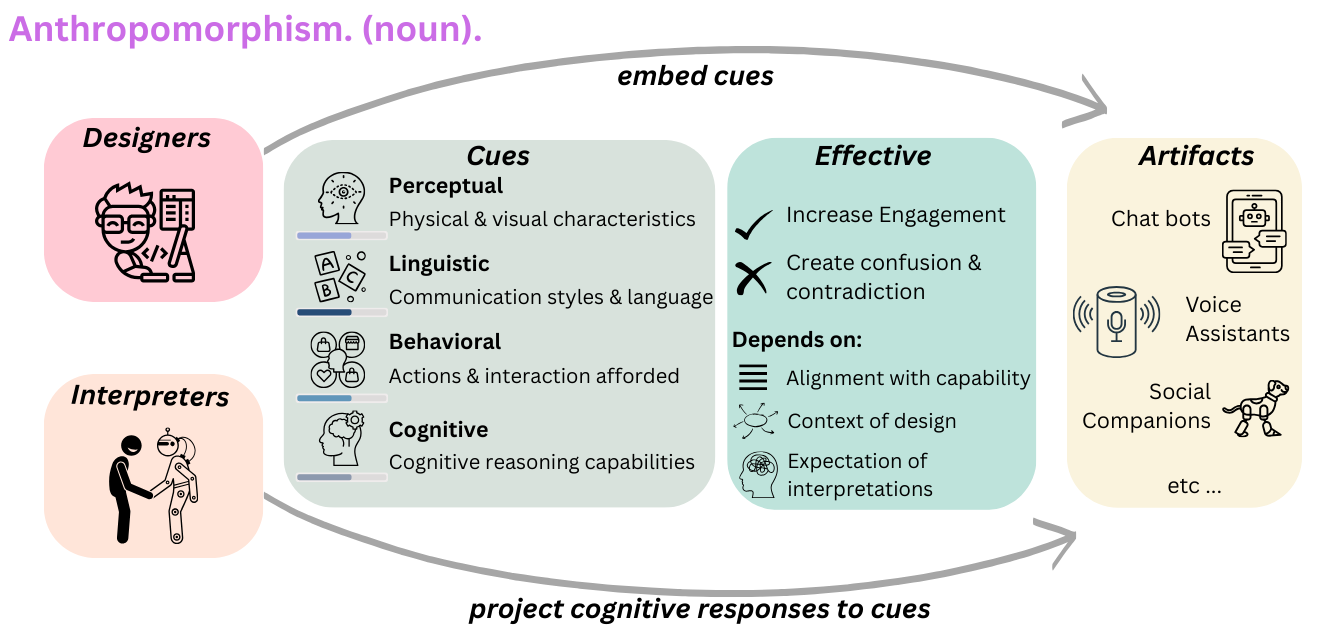

The paper "Humanizing Machines: Rethinking LLM Anthropomorphism Through a Multi-Level Framework of Design" (2508.17573) presents a comprehensive reconceptualization of anthropomorphism in the context of LLMs. Rather than treating anthropomorphism solely as a risk factor or a linguistic artifact, the authors propose a multi-dimensional, reciprocal framework that positions anthropomorphism as a design parameter, intentionally embedded by system designers and interpreted by users. This approach synthesizes perspectives from NLP, HCI, robotics, and information science, and introduces a taxonomy of cues—perceptual, linguistic, behavioral, and cognitive—that can be calibrated to align with user goals, system capabilities, and contextual requirements.

Figure 1: Illustrative definition of Anthropomorphism, showing the reciprocal interaction between designer-embedded cues and interpreter projections.

Historical and Conceptual Foundations

Anthropomorphism has been a central concept in human-machine interaction since Turing's Imitation Game and Weizenbaum's ELIZA effect. The paper traces the evolution of anthropomorphism definitions across three eras: foundational (pre-2000), embodied agents and HRI emergence (2000–2015), and the LLM/dynamic interaction era (2016–present). This diachronic synthesis reveals that anthropomorphism is not a static set of traits but a context-sensitive interaction shaped by design intentions, interpreter expectations, and social norms.

The authors argue that the prevailing risk-centric discourse—focused on over-trust, deception, and bias—has limited the exploration of anthropomorphism's functional benefits. They advocate for a shift toward effectiveness-oriented evaluation, emphasizing the need for actionable design levers and context-sensitive calibration.

Multi-Dimensional Taxonomy of Anthropomorphic Cues

The framework categorizes anthropomorphic cues into four dimensions, each operating on a continuum from low to high intensity:

- Perceptual Cues: Physical or visual features (e.g., avatars, icons) that convey human-likeness. High realism increases social presence but risks cognitive dissonance if not matched by system competence.

- Linguistic Cues: Language choices (pronouns, tone, affective words) that signal humanness. Metrics such as HUMT and AnthroScore quantify cue intensity, but cultural and contextual calibration is essential.

- Behavioral Cues: Actions and interaction patterns (contingent responses, adaptive behaviors) that evoke agency and intentionality. Embodied agents afford richer behavioral cues than purely digital artifacts.

- Cognitive Cues: Reasoning-level signals (reflection, uncertainty, empathy) that suggest internal deliberation or intelligence. High-level cues enhance engagement in relationship-oriented domains but can mislead in high-stakes or transactional contexts.

Each cue dimension is mapped to components of Theory of Mind (ToM), supporting the attribution of mental states and social agency to artifacts.

Effectiveness and Risks of Anthropomorphic Design

The effectiveness of anthropomorphic cues is contingent on three alignment factors:

- Capability-Expectation Alignment: Cues must not overpromise system competence.

- Context-Purpose Alignment: Cue intensity should match the stakes and requirements of the interaction.

- Cultural-Norm Alignment: Cues must respect diverse expectations and avoid normative bias.

Empirical evidence demonstrates that well-calibrated cues can improve trust, usability, and engagement. For example, realistic avatars and emotive language increase perceived credibility, while adaptive behavioral cues enhance rapport and task performance. However, misaligned cues—such as excessive realism or unsolicited autonomy—can erode trust, amplify bias, and produce negative affective responses.

Design Recommendations

The paper provides actionable recommendations for practitioners:

- Align cues with artifact capabilities: Perceptual, behavioral, and cognitive cues should be proportionate to system reliability and task criticality. Overly human-like features risk disappointment and miscalibrated trust.

- Participatory implementation techniques: Enable adaptive, user-controlled anthropomorphism through adjustable parameters and feedback mechanisms. Artifacts should learn and update cue profiles based on interaction data.

- Context-sensitive implementation: Calibrate cue intensity to deployment context, monitor interpreter-artifact dynamics, and adapt to cultural variation.

- Future-oriented evaluations: Develop reliable metrics for all cue dimensions, correlate them with task outcomes, and engage in interdisciplinary collaboration for metric validation.

Limitations and Ethical Considerations

The framework is primarily theoretical and idealizes cue dimensions for analytical clarity. Real-world deployments often feature overlapping modalities and limited designer control, especially in commercial LLMs. Ethical risks include miscalibrated trust, affective misinterpretation, and cultural bias. The taxonomy could be misused for manipulative or persuasive systems, necessitating mitigation strategies such as interpretability indicators and participatory co-design.

Implications and Future Directions

The multi-level framework advances the theoretical understanding of anthropomorphism in LLMs and provides a foundation for systematic, context-sensitive design. Practically, it enables the intentional tuning of human-like features to support user goals, enhance engagement, and maintain trust. Theoretically, it invites further research into cross-cue dynamics, participatory adaptation, and empirical validation across diverse domains and cultures.

Future work should focus on developing robust metrics for behavioral and cognitive cues, modeling interdependencies among cue dimensions, and empirically testing the framework in real-world deployments. Interdisciplinary collaboration will be essential to ensure that anthropomorphic design remains transparent, socially aligned, and ethically responsible.

Conclusion

This paper reframes anthropomorphism in LLMs as a calibrated, multi-dimensional design parameter, embedded by designers and projected by interpreters. By categorizing cues into perceptual, linguistic, behavioral, and cognitive dimensions, and advocating for effectiveness-oriented evaluation, the framework enables more nuanced, context-sensitive, and user-aware design of human-like AI systems. The approach balances technical considerations with social and ethical imperatives, supporting the development of LLMs that function both as utilitarian tools and as social partners. Future research should empirically validate and refine the framework, with particular attention to cross-cue interactions and participatory adaptation.