NextStep-1: Toward Autoregressive Image Generation with Continuous Tokens at Scale (2508.10711v2)

Abstract: Prevailing autoregressive (AR) models for text-to-image generation either rely on heavy, computationally-intensive diffusion models to process continuous image tokens, or employ vector quantization (VQ) to obtain discrete tokens with quantization loss. In this paper, we push the autoregressive paradigm forward with NextStep-1, a 14B autoregressive model paired with a 157M flow matching head, training on discrete text tokens and continuous image tokens with next-token prediction objectives. NextStep-1 achieves state-of-the-art performance for autoregressive models in text-to-image generation tasks, exhibiting strong capabilities in high-fidelity image synthesis. Furthermore, our method shows strong performance in image editing, highlighting the power and versatility of our unified approach. To facilitate open research, we will release our code and models to the community.

Collections

Sign up for free to add this paper to one or more collections.

Summary

- The paper presents a 14B parameter AR model that directly predicts continuous image tokens, achieving state-of-the-art text-to-image synthesis and image editing.

- It unifies text and image tokens within a single Transformer using a unique flow matching head and a robust tokenizer design for high-fidelity outputs.

- Empirical benchmarks show competitive performance with diffusion models, highlighting significant improvements in compositional reasoning and latent space regularization.

NextStep-1: Autoregressive Image Generation with Continuous Tokens at Scale

Introduction and Motivation

NextStep-1 addresses the limitations of prior autoregressive (AR) text-to-image models, which have historically relied on either computationally intensive diffusion models for continuous image token processing or vector quantization (VQ) for discrete tokenization, both of which introduce significant trade-offs in image quality, training stability, and scalability. The core contribution of NextStep-1 is the demonstration that a large-scale AR model, operating directly on continuous image tokens and trained with a next-token prediction (NTP) objective, can achieve state-of-the-art performance in text-to-image generation, compositional reasoning, and image editing, rivaling or surpassing diffusion-based approaches in several benchmarks.

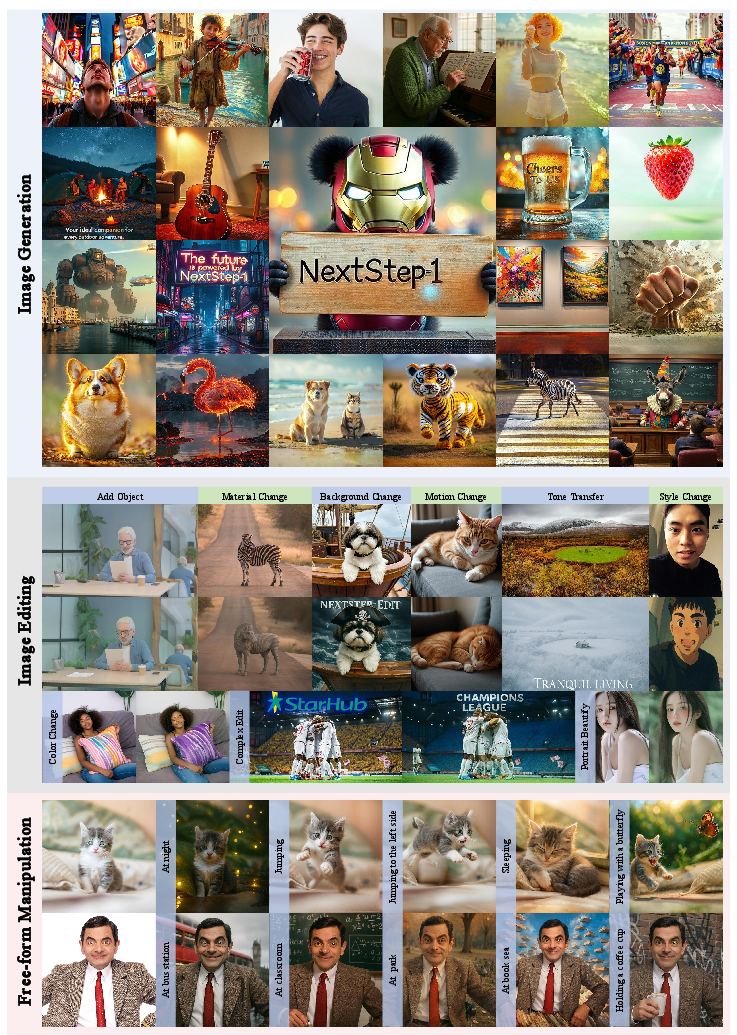

Figure 1: NextStep-1 enables high-fidelity image generation, diverse image editing, and complex free-form manipulation.

Model Architecture and Training Paradigm

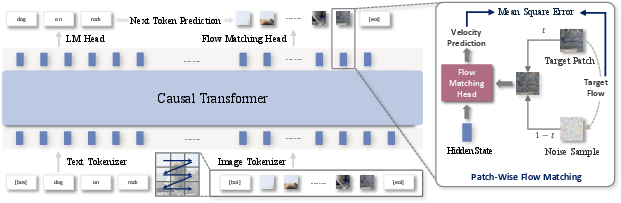

NextStep-1 is a 14B parameter causal Transformer, initialized from Qwen2.5-14B, and augmented with two modality-specific heads: a standard LLMing (LM) head for discrete text tokens and a lightweight, 157M parameter flow matching (FM) head for continuous image tokens. The image tokenizer, fine-tuned from Flux VAE, encodes images into 16-channel latents with 8× spatial downsampling, followed by channel-wise normalization and stochastic noise perturbation to regularize the latent space.

Figure 2: The NextStep-1 framework unifies text and image tokens in a single sequence, with the FM head predicting the flow from noise to target image patch during training and guiding iterative patch generation at inference.

The unified multimodal sequence is autoregressively modeled as p(x)=∏i=1np(xi∣x<i), where xi is either a discrete text token or a continuous image token. The training objective is a weighted sum of cross-entropy loss for text and flow matching loss (mean squared error between predicted and target velocity vectors) for image tokens. The image tokens are pixel-shuffled into a compact 1D sequence via a space-to-depth transformation, facilitating efficient Transformer processing.

Data Curation and Multimodal Coverage

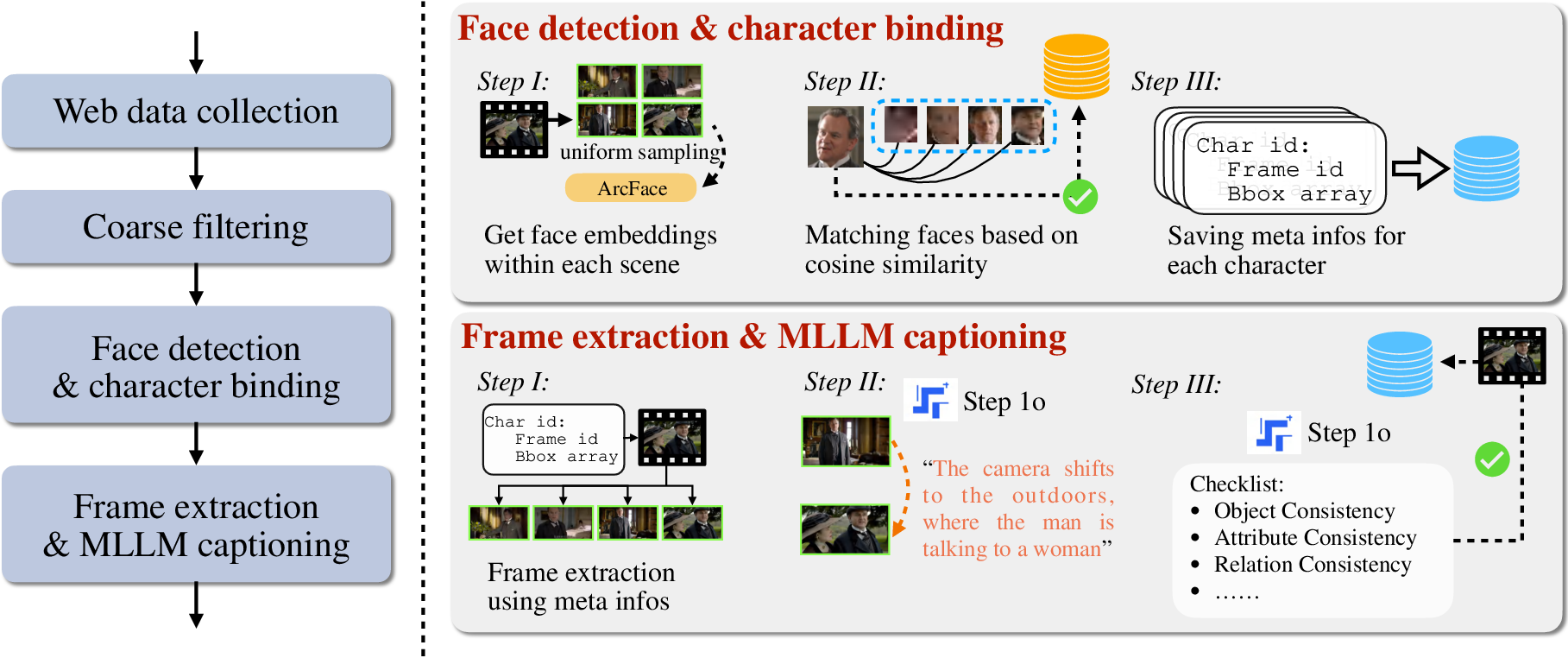

The training corpus comprises four primary data categories: 400B text-only tokens, 550M high-quality image-text pairs, 1M instruction-guided image-to-image samples, and 80M interleaved video-text samples. The image-text pairs are curated via aesthetic, watermark, and semantic alignment filtering, with recaptioning for linguistic diversity. The interleaved data includes character-centric, tutorial, and multi-view samples, enhancing the model's world knowledge and compositional reasoning.

Figure 3: Character-centric data processing pipeline for constructing interleaved video-text datasets.

Training Regimen and Post-Training Alignment

The training follows a three-stage curriculum: (1) foundational learning at 256×256 resolution, (2) dynamic resolution scaling to 512×512, and (3) annealing on a high-quality subset for final refinement. Post-training involves supervised fine-tuning (SFT) on 5M high-quality samples, including chain-of-thought (CoT) data, and Direct Preference Optimization (DPO) using ImageReward-based preference pairs, both with and without explicit CoT reasoning.

Empirical Results and Benchmarking

Text-to-Image Generation

NextStep-1 achieves strong results across multiple benchmarks:

- GenEval: 0.63 (0.73 with Self-CoT), demonstrating robust compositional and spatial alignment.

- GenAI-Bench: 0.88/0.67 (basic/advanced), competitive with leading diffusion models.

- DPG-Bench: 85.28, confirming compositional fidelity in complex scenes.

- OneIG-Bench: 0.417 overall, outperforming all prior AR models by a significant margin.

- WISE: 0.54 (0.67 with Self-CoT), the highest among AR models for world knowledge grounding.

Image Editing

NextStep-1-Edit, fine-tuned on 1M edit-only samples, achieves 6.58 on GEdit-Bench-EN and 3.71 on ImgEdit-Bench, matching or exceeding open-source diffusion-based editors.

Architectural Analysis and Ablations

Flow Matching Head: Transformer Dominance

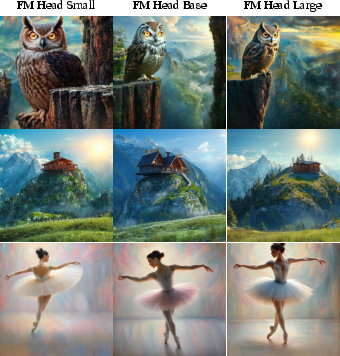

Ablation studies reveal that the size of the FM head has minimal impact on generation quality, indicating that the causal Transformer backbone is responsible for the core generative modeling, while the FM head acts as a lightweight sampler.

Figure 4: Images generated with different FM head sizes show negligible qualitative differences, confirming the Transformer’s dominant role.

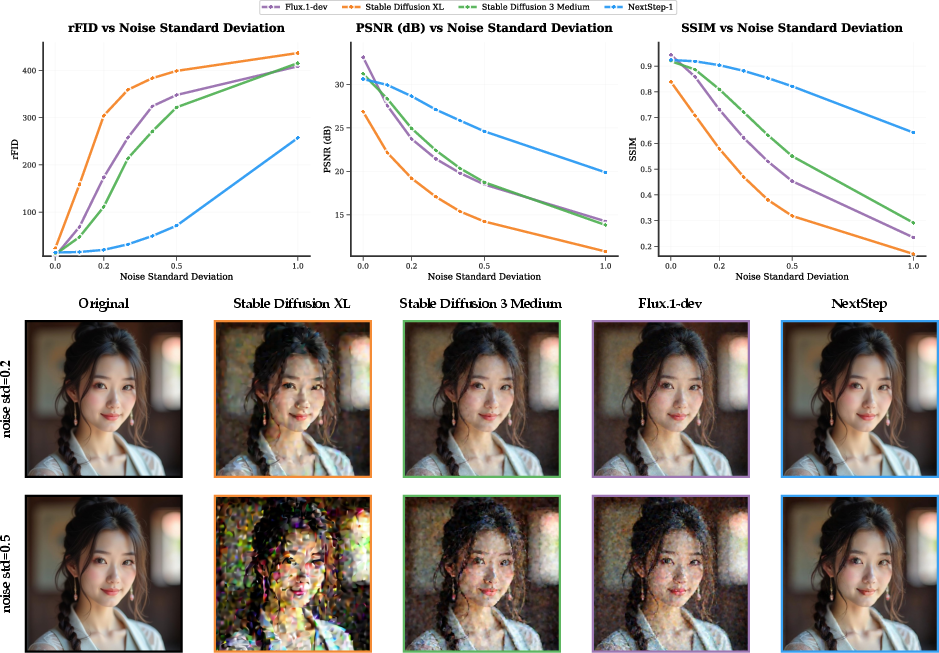

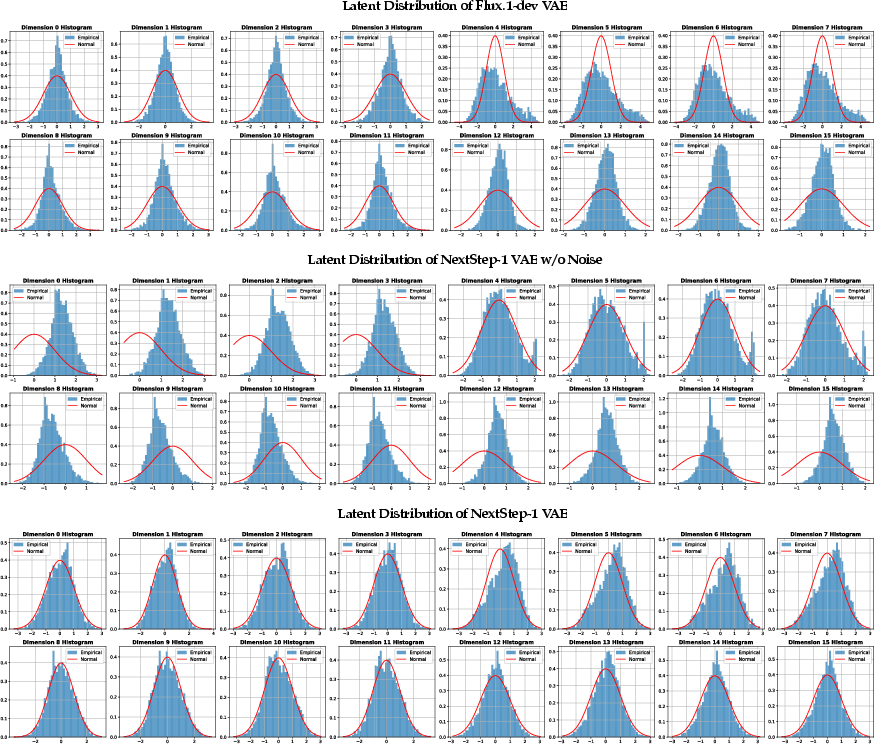

Tokenizer Design: Regularization and Robustness

The image tokenizer’s channel-wise normalization and noise perturbation are critical for stable training and high-fidelity generation. Empirical analysis demonstrates that higher noise regularization during tokenizer training increases generation loss but paradoxically improves synthesis quality, due to enhanced latent robustness and dispersion.

Figure 5: Noise perturbation improves tokenizer robustness, as shown by quantitative metrics and qualitative reconstructions at varying noise levels.

Figure 6: Latent space dispersion increases with noise regularization, supporting better generative performance.

Practical Considerations and Limitations

Inference Latency

Sequential decoding remains a bottleneck, with per-token latency dominated by the Transformer and multi-step FM head sampling. Acceleration strategies such as few-step samplers, speculative decoding, and multi-token prediction are suggested for future work.

High-Resolution Scaling

Autoregressive models require substantially more training steps for high-resolution generation compared to diffusion models, and lack of 2D spatial inductive bias in 1D positional encoding can introduce grid artifacts. Adapting high-resolution techniques from diffusion to AR models remains an open challenge.

SFT Instability

SFT on small datasets is unstable, with improvements only manifesting at million-sample scale. The model is prone to overfitting or negligible gains, complicating checkpoint selection for optimal alignment.

Artifact Analysis

Transitioning to higher-dimensional latent spaces introduces new failure modes, including local and global noise artifacts and grid patterns, likely due to numerical instability, under-convergence, or positional encoding limitations.

Implications and Future Directions

NextStep-1 demonstrates that large-scale AR models with continuous tokens can match or exceed diffusion models in text-to-image and image editing tasks, provided that the tokenizer is robust and the latent space is well-regularized. The findings challenge the prevailing assumption that diffusion is necessary for high-fidelity image synthesis at scale, and highlight the importance of architectural simplicity, data diversity, and latent space conditioning.

Theoretical implications include the decoupling of generative modeling from the sampling head, and the critical role of latent space geometry in AR generation. Practically, the work suggests that further improvements in inference efficiency, high-resolution scaling, and SFT stability are required for AR models to become the default paradigm for multimodal generation.

Conclusion

NextStep-1 establishes a new state-of-the-art for AR image generation with continuous tokens, achieving strong performance across compositional, world knowledge, and editing benchmarks. The work provides compelling evidence that, with appropriate architectural and tokenizer design, AR models can rival diffusion models in both quality and versatility. Future research should focus on inference acceleration, high-resolution strategies, and further analysis of latent space regularization to fully realize the potential of AR generative modeling at scale.

Follow-up Questions

- How does NextStep-1 compare to diffusion-based models in terms of image quality and computational efficiency?

- What are the limitations of using autoregressive models for high-resolution image generation?

- How does the flow matching head enhance the generative performance in this model?

- What impact does diverse multimodal training data have on the model's compositional reasoning capabilities?

- Find recent papers about autoregressive image generation.

Related Papers

- HART: Efficient Visual Generation with Hybrid Autoregressive Transformer (2024)

- High-Resolution Image Synthesis via Next-Token Prediction (2024)

- EditAR: Unified Conditional Generation with Autoregressive Models (2025)

- FlowTok: Flowing Seamlessly Across Text and Image Tokens (2025)

- Token-Shuffle: Towards High-Resolution Image Generation with Autoregressive Models (2025)

- Selftok: Discrete Visual Tokens of Autoregression, by Diffusion, and for Reasoning (2025)

- D-AR: Diffusion via Autoregressive Models (2025)

- Highly Compressed Tokenizer Can Generate Without Training (2025)

- Transition Matching: Scalable and Flexible Generative Modeling (2025)

- DC-AR: Efficient Masked Autoregressive Image Generation with Deep Compression Hybrid Tokenizer (2025)

Authors (50)

First 10 authors:

Tweets

YouTube

alphaXiv

- NextStep-1: Toward Autoregressive Image Generation with Continuous Tokens at Scale (61 likes, 0 questions)