- The paper presents a structured governance framework that systematically assesses and mitigates biases throughout the LLM lifecycle.

- It integrates fairness-aware algorithms and continuous real-time monitoring to ensure ethical and transparent AI deployment.

- The framework addresses challenges like dynamic regulatory environments and data biases to promote equitable GenAI applications.

Introduction to AI Governance Frameworks

The paper "Data and AI governance: Promoting equity, ethics, and fairness in LLMs" explores the urgent necessity for comprehensive governance frameworks to manage biases and ethical challenges in LLMs. With the exponential growth in the adoption of Generative AI and LLMs, regulatory bodies like the European Union have initiated regulatory frameworks, yet there is a gap in addressing the specific complexities inherent in GenAI systems. Biases in LLMs manifest across various dimensions including gender, race, and socioeconomic status, necessitating a robust governance framework to ensure fairness and ethical compliance.

Need for a Governance Framework

Leveraging their previous work on the Bias Evaluation and Assessment Test Suite (BEATS), the authors propose a structured data and AI governance framework. This framework aims to systematically govern, assess, and quantify bias throughout the entire lifecycle of machine learning models—from model development to production monitoring. This structured approach is crucial for enhancing the safety, responsibility, and fairness of AI systems.

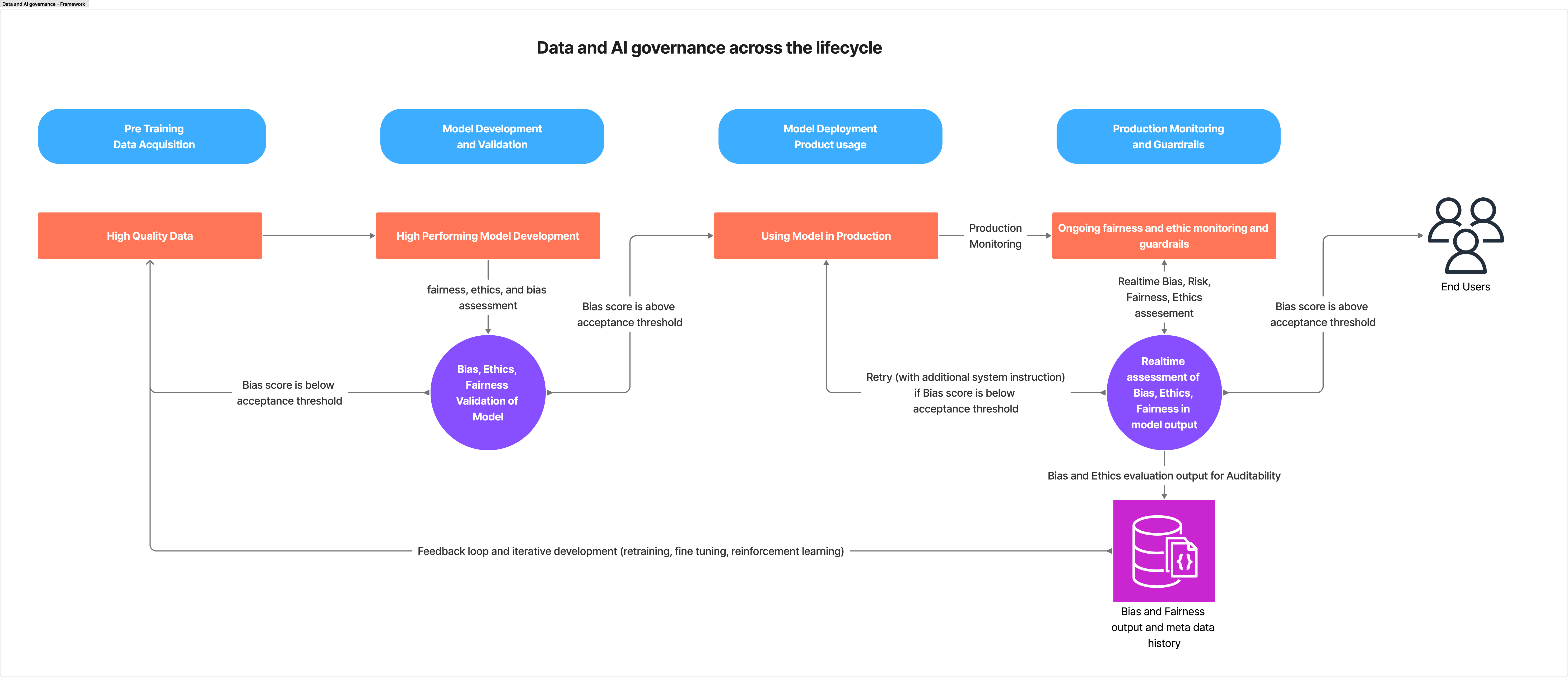

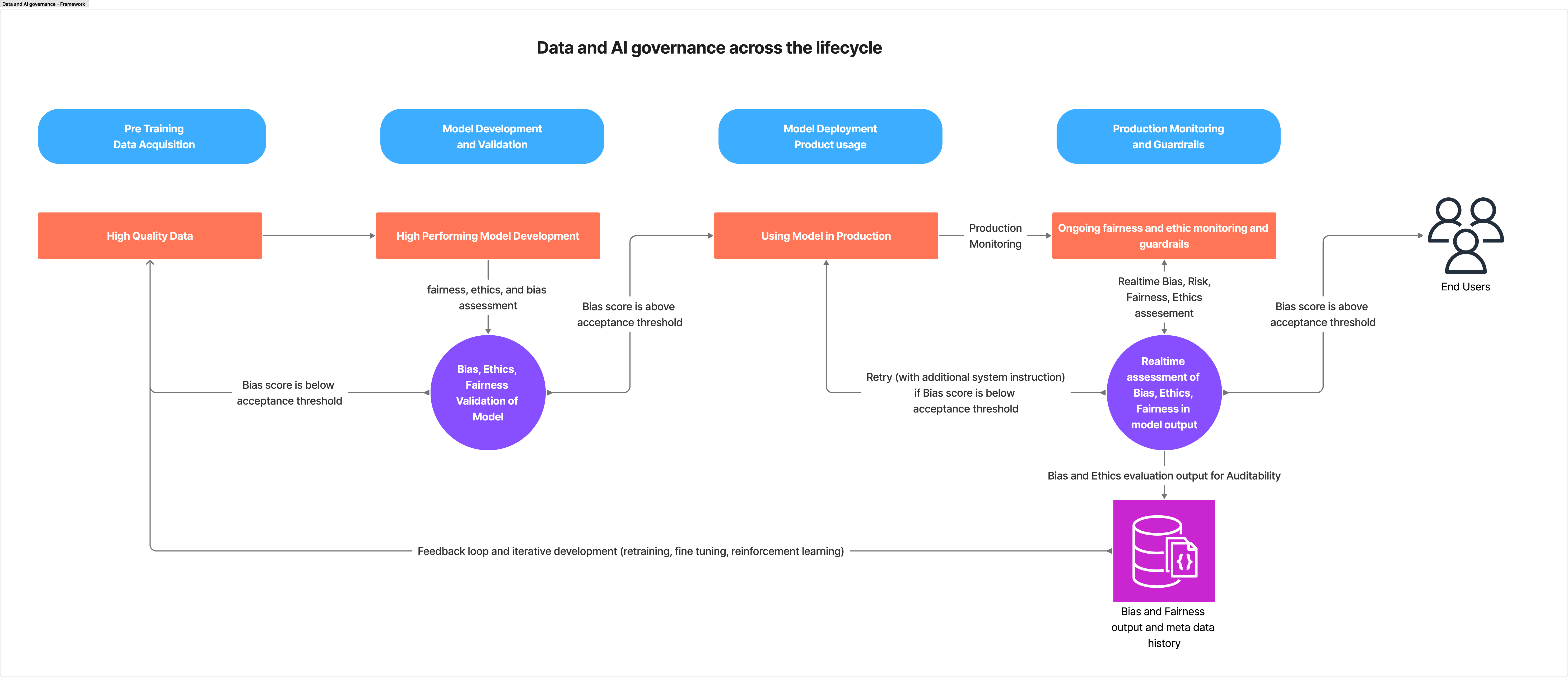

Figure 1: System design of data and AI governance across the AI life cycle. The bias evaluation is performed as part of overall model evaluation before deploying the model in products and as an ongoing guardrail during model inference responses in production.

AI Lifecycle and Governance Integration

Effective governance must span the entire AI lifecycle, from data acquisition to model retirement. At each stage, specific strategies are deployed:

- Data Collection: Emphasis on source verification, demographic diversity audits, and compliance with privacy standards like GDPR and CCPA.

- Data Preprocessing and Labeling: Use of bias detection techniques and transparent labeling protocols to minimize subjective bias.

- Model Development and Training: Incorporation of fairness-aware algorithms, ethics review boards, and explainability techniques such as SHAP and LIME to enhance model transparency.

- Model Deployment and Monitoring: Implementation of continuous fairness observability through real-time dashboards and ethical feedback mechanisms.

By integrating governance practices holistically across the lifecycle, AI systems can better mitigate risks and align with ethical standards.

Limitations and Challenges

While the governance framework is designed to mitigate biases, it faces several limitations:

- Dynamic Regulatory Landscapes: The evolving nature of global regulatory standards requires adaptive governance approaches.

- Framework Generalizability: The framework's design for GenAI and LLM contexts might need adaptation for other AI domains, particularly those using structured or multimodal data.

- Bias Measurement Limitations: The predominance of English- and Western-centric training data in LLMs may result in a lack of sensitivity towards non-dominant global viewpoints.

Conclusion

The proposed data and AI governance framework offers a comprehensive approach to managing bias and ethical issues in LLMs, emphasizing fairness and ethical alignment throughout the AI lifecycle. As organizations increasingly integrate GenAI into critical applications, this governance model provides a necessary tool to navigate complex ethical and regulatory landscapes. Its adaptive, feedback-driven structure not only addresses immediate risks but also fosters ongoing improvement and compliance with evolving global standards. The framework is essential for organizations that aim to deploy GenAI technologies transparently and responsibly, minimizing societal biases and promoting equity across diverse applications.