- The paper introduces InstABoost, a method that boosts LLM attention on instructions to improve control and task performance.

- The method manipulates internal attention distributions to enhance in-context rule following with minimal computational cost.

- Experimental results show InstABoost outperforms standard prompting and other latent steering techniques across diverse tasks.

Instruction Following via Attention Boosting in LLMs

Controlling the behavior of LLMs is crucial for their safe and reliable deployment. While prompt engineering and fine-tuning are common strategies, latent steering offers a lightweight alternative by manipulating internal activations. This paper introduces Instruction Attention Boosting (InstABoost), a novel latent steering method that amplifies the effect of instruction prompting by modulating the model's attention mechanism during generation. InstABoost leverages theoretical insights suggesting that attention on instructions governs in-context rule following in transformer models. Empirical results demonstrate that InstABoost achieves superior control compared to traditional prompting and existing latent steering techniques.

Background on Steering Methods

Steering methods aim to guide the behavior of generative models by encouraging desirable outputs and suppressing undesirable ones. These methods broadly fall into two categories: prompt-based steering and latent space steering. Prompt-based steering uses natural language instructions within the input prompt, while latent space steering directly intervenes on the model's internal representations during generation. Despite advancements in both categories, challenges remain in understanding their efficacy and limitations. The paper argues that the effectiveness of steering methods is closely tied to the task itself, and that simple prompt-based methods can be remarkably effective, especially when augmented with targeted adjustments to the model's internal processing.

Instruction Attention Boosting (InstABoost)

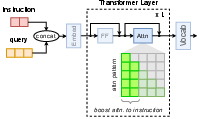

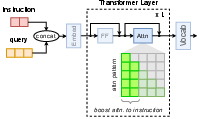

Figure 1: Illustration of InstABoost which steers LLM behavior by increasing the attention mass onto the tokens corresponding to a prepended instruction.

InstABoost is motivated by the observation that in-context rule following in transformer-based models can be controlled by manipulating attention on instructions. The approach treats instructions as in-context rules and boosts the LLM's attention to these rules to steer generations towards a target behavior. Given a tokenized instruction prompt p=(p1,…,pK) of length K and an input query x=(x1,…,xL) of length L, the method first forms a combined input sequence x′=p⊕x=(p1,…,pK,x1,…,xL). Within each Transformer layer ℓ, InstABoost modifies the attention distribution α to increase the weights assigned to the prompt tokens by defining unnormalized, but boosted attention scores:

βij={αij⋅Mif 0≤j<K αijif K≤j<N.

These scores are then re-normalized to ensure a valid probability distribution, resulting in the final steered attention distribution β′. The output of the attention mechanism aℓ is computed using these re-normalized, steered attention weights β′ and the unmodified value vectors V: aℓ=β′V. This approach amplifies the attention of a prepended prompt, effectively steering the model's output.

Experimental Results

The paper presents a systematic evaluation of InstABoost, comparing it against instruction-only prompting and various latent steering methods across a suite of diverse tasks. The tasks range from generating less toxic completions to changing the sentiment of open-ended generations. The experiments were conducted using the Meta-Llama-3-8B-Instruct model, with hyperparameters selected via held-out validation to maximize task accuracy while maintaining high generation fluency.

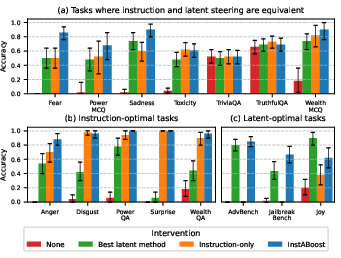

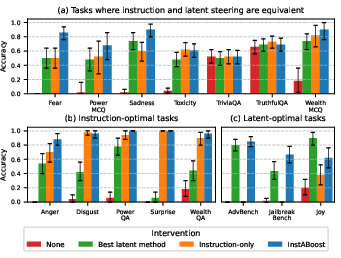

Figure 2: InstABoost outperforms or matches all competing interventions. For each task, we show the accuracy of the model without intervention (red), the best-performing latent steering method (green), the instruction-only intervention (orange), and InstABoost (blue). Error bars show a standard deviation above and below the mean, computed by bootstrapping.

The results demonstrate that InstABoost either outperforms or matches the strongest competing method across all tasks. In tasks where instruction and latent steering had similar performance, InstABoost consistently performed well. In tasks where instruction prompting was superior to latent steering, InstABoost preserved and often enhanced this performance. Notably, in jailbreaking tasks, where the default model and instruction-only baseline had nearly zero accuracy, InstABoost achieved significantly higher accuracy than standard latent steering methods.

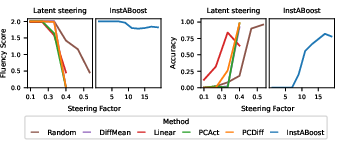

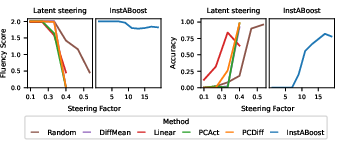

Figure 3: Unlike other latent steering methods, InstABoost maintains high generation fluency while increasing task accuracy. The figure shows the fluency score (left) and accuracy (right) versus varying steering factors for the latent steering methods on AdvBench. For the latent steering methods, we show the effect of varying the steering factor in the best-performing layer.

Comparison to Existing Steering Methods

The paper highlights a significant drawback of latent-only methods: their performance fluctuates considerably by task. In contrast, InstABoost consistently achieves strong performance across all task types, offering a more robust and reliable approach to model steering. The results also indicate that InstABoost maintains high generation fluency, unlike other latent steering methods where increasing the steering factor to enhance task accuracy often results in a sharp decline in generation fluency. This is because InstABoost intervenes on attention mechanisms, offering a more constrained re-weighting of information flow that better preserves the model's generative capabilities.

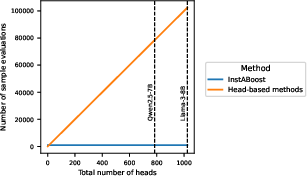

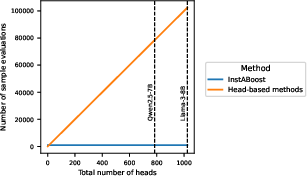

The paper discusses prior work on latent steering, including methods that involve applying a derived steering vector to model activations. It also addresses attention steering methods, such as those by \citet{todd2024function} and \citet{zhang2024tell}, which leverage attention mechanisms for steering model behavior. The paper notes that these approaches often involve a grid search over all attention heads across all layers, incurring substantial computational costs. In contrast, InstABoost's hyperparameter tuning cost is minimal and constant, regardless of model size.

Figure 4: Unlike head-based attention steering methods, InstABoost maintains a minimal and constant cost for hyperparameter tuning, regardless of model size. The plot displays the number of sample evaluations (y-axis) required for hyperparameter selection versus the total number of attention heads in a model (x-axis), assuming 100 validation samples.

Conclusion

The paper introduces InstABoost, a novel attention-based latent steering method that boosts attention on task instructions. The method is evaluated on a diverse benchmark with 6 tasks and is shown to outperform or match other latent steering methods and prompting on the tasks considered. InstABoost offers improved consistency across diverse task types and maintains high generation fluency. These findings suggest that guiding a model's attention can be an effective and efficient method for achieving more predictable LLM behavior, offering a promising direction for developing safer and more controllable AI systems.